Lecture

Consider the stationary random function  which has an ergodic property, and suppose that we have only one implementation of this random function, but on a sufficiently large time interval

which has an ergodic property, and suppose that we have only one implementation of this random function, but on a sufficiently large time interval  . For an ergodic stationary random function, one implementation of a sufficiently long duration is practically equivalent (in terms of the amount of information about the random function) to the set of realizations of the same total duration; characteristics of a random function can be approximated not as averages over a variety of observations, but as averages over time.

. For an ergodic stationary random function, one implementation of a sufficiently long duration is practically equivalent (in terms of the amount of information about the random function) to the set of realizations of the same total duration; characteristics of a random function can be approximated not as averages over a variety of observations, but as averages over time.  . In particular, with a sufficiently large

. In particular, with a sufficiently large  expected value

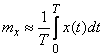

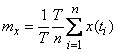

expected value  can be approximately calculated by the formula

can be approximately calculated by the formula

. (17.8.1)

. (17.8.1)

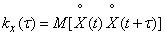

Similarly, the correlation function can be approximately found.  at any

at any  . Indeed, the correlation function, by definition, is nothing more than the expectation of a random function

. Indeed, the correlation function, by definition, is nothing more than the expectation of a random function  :

:

(17.8.2)

(17.8.2)

This expectation can also, obviously, be approximately calculated as a time average.

We fix some value  and calculate in this way the correlation function

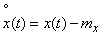

and calculate in this way the correlation function  . For this, it is convenient to pre-center this implementation.

. For this, it is convenient to pre-center this implementation.  , i.e., subtract the expectation from it (17.8.1):

, i.e., subtract the expectation from it (17.8.1):

. (17.8.3)

. (17.8.3)

Calculate for a given  expectation of a random function

expectation of a random function  as time average. In this case, obviously, we will have to take into account not the whole time interval from 0 to

as time average. In this case, obviously, we will have to take into account not the whole time interval from 0 to  , and somewhat smaller, since the second factor

, and somewhat smaller, since the second factor  we are not known for everyone

we are not known for everyone  , but only for those for whom

, but only for those for whom  .

.

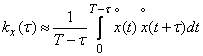

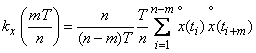

Calculating the time average of the above method, we get:

. (17.8.4)

. (17.8.4)

By calculating the integral (17.8.4) for a number of values  , you can approximately reproduce point by point the entire course of the correlation function.

, you can approximately reproduce point by point the entire course of the correlation function.

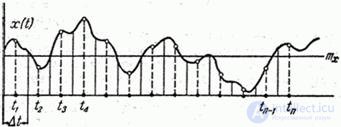

In practice, the integrals (17.8.1) and (17.8.4) are usually replaced by finite sums. We show how this is done. We divide the recording interval of a random function into  equal parts long

equal parts long  and denote the midpoints of the obtained sections

and denote the midpoints of the obtained sections  (fig. 17.8.1).

(fig. 17.8.1).

Fig. 17.8.1.

Provide integral (17.8.1) as the sum of integrals over elementary parts  and on each of them we will carry out the function

and on each of them we will carry out the function  from the integral sign, the average value corresponding to the center of the interval

from the integral sign, the average value corresponding to the center of the interval  . We get approximately:

. We get approximately:

,

,

or

. (17.8.5)

. (17.8.5)

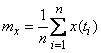

Similarly, you can calculate the correlation function for the values  equal

equal  . We give, for example, the value

. We give, for example, the value  value

value

calculate the integral (17.8.4), dividing the integration interval

on  equal length plots

equal length plots  and putting on each of them a function

and putting on each of them a function  over the integral sign of the mean value. We get:

over the integral sign of the mean value. We get:

,

,

or finally

. (17.8.6)

. (17.8.6)

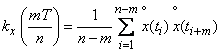

The calculation of the correlation function by the formula (17.8.6) is performed for  consistently down to such values

consistently down to such values  at which the correlation function becomes almost zero or starts to make small irregular fluctuations around zero. The general course of the function

at which the correlation function becomes almost zero or starts to make small irregular fluctuations around zero. The general course of the function  reproduced by individual points (Fig. 17.8.2).

reproduced by individual points (Fig. 17.8.2).

Fig. 17.8.2.

In order to expectation  and correlation function

and correlation function  were determined with satisfactory accuracy, it is necessary that the number of points

were determined with satisfactory accuracy, it is necessary that the number of points  was large enough (about a hundred, and in some cases even a few hundred). The choice of the length of the elementary area

was large enough (about a hundred, and in some cases even a few hundred). The choice of the length of the elementary area  determined by the nature of the change of the random function. If the random function changes relatively smoothly, the plot

determined by the nature of the change of the random function. If the random function changes relatively smoothly, the plot  You can choose more than when it makes a sharp and frequent fluctuations. The higher-frequency composition have oscillations that form a random function, the more often it is necessary to position the reference points during processing. Approximately we can recommend to choose an elementary area

You can choose more than when it makes a sharp and frequent fluctuations. The higher-frequency composition have oscillations that form a random function, the more often it is necessary to position the reference points during processing. Approximately we can recommend to choose an elementary area  so that for the full period of the highest-frequency harmonic in the composition of the random function there were about 5-10 reference points.

so that for the full period of the highest-frequency harmonic in the composition of the random function there were about 5-10 reference points.

Often the choice of reference points does not depend on the processing at all, but is dictated by the pace of the recording equipment. In this case, it is necessary to conduct processing directly obtained from the experience of the material, not trying to insert intermediate values between the observed values, since the echo can not improve the accuracy of the result, and unnecessarily complicate the processing.

Example. In the conditions of horizontal flight of the aircraft, a vertical overload acting on the aircraft was recorded. Overload was recorded at a time interval of 200 seconds with an interval of 2 seconds. The results are shown in table 17.8.1.

Table 17.8.1

(sec) | Overload

|

(sec) | Overload

|

(sec) | Overload

|

(sec) | Overload

|

0 | 1.0 | 50 | 1.0 | 100 | 1.2 | 150 | 0.8 |

2 | 1,3 | 52 | 1.1 | 102 | 1.4 | 152 | 0.6 |

four | 1.1 | 54 | 1.5 | 104 | 0.8 | 154 | 0.9 |

6 | 0.7 | 56 | 1.0 | 106 | 0.9 | 156 | 1.2 |

eight | 0.7 | 58 | 0.8 | 108 | 1.0 | 158 | 1,3 |

ten | 1.1 | 60 | 1.1 | 110 | 0.8 | 160 | 0.9 |

12 | 1,3 | 62 | 1.1 | 112 | 0.8 | 162 | 1,3 |

14 | 0.8 | 64 | 1.2 | 114 | 1.4 | 164 | 1.5 |

sixteen | 0.8 | 66 | 1.0 | 116 | 1.6 | 166 | 1.2 |

18 | 0.4 | 68 | 0.8 | 118 | 1.7 | 168 | 1.4 |

20 | 0.3 | 70 | 0.8 | 120 | 1,3 | 170 | 1.4 |

22 | 0.3 | 72 | 1.2 | 122 | 1.6 | 172 | 0.8 |

24 | 0.6 | 74 | 0.7 | 124 | 0.8 | 174 | 0.8 |

26 | 0.3 | 76 | 0.7 | 126 | 1.2 | 176 | 1,3 |

28 | 0.5 | 78 | 1.1 | 128 | 0.6 | 178 | 1.0 |

thirty | 0.5 | 80 | 1.2 | 130 | 1.0 | 180 | 0.7 |

32 | 0.7 | 82 | 1.0 | 132 | 0.3 | 182 | 1.1 |

34 | 0.8 | 84 | 0.6 | 134 | 0.8 | 184 | 0.9 |

36 | 0.6 | 86 | 0.9 | 136 | 0.7 | 186 | 0.9 |

38 | 1.0 | 88 | 0.8 | 138 | 0.9 | 188 | 1.1 |

40 | 0.5 | 90 | 0.8 | 140 | 1,3 | 190 | 1.2 |

42 | 1.0 | 92 | 0.9 | 142 | 1.5 | 192 | 1,3 |

44 | 0.9 | 94 | 0.9 | 144 | 1.1 | 194 | 1,3 |

46 | 1.4 | 96 | 0.6 | 146 | 0.7 | 196 | 1.6 |

48 | 1.4 | 98 | 0.4 | 148 | 1.0 | 198 | 1.5 |

Considering the process of changing overload stationary, to determine the approximate expectation of overload  variance

variance  and normalized correlation function

and normalized correlation function  . Approximate

. Approximate  any analytical function, find and build the spectral density of a random process.

any analytical function, find and build the spectral density of a random process.

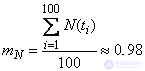

Decision. By the formula (17.8.5) we have:

.

.

We center the random function (Table 17.8.2).

Table 17.8.2

(sec) |

|

(sec) |

|

(sec) |

|

(sec) |

|

0 | 0.02 | 50 | 0.02 | 100 | 0.22 | 150 | -0.18 |

2 | 0.32 | 52 | 0.12 | 102 | 0.42 | 152 | -0.38 |

four | 0.12 | 54 | 0.52 | 104 | -0.18 | 154 | -0,08 |

6 | -0,28 | 56 | 0.02 | 106 | -0,08 | 156 | 0.22 |

eight | -0,28 | 58 | -0.18 | 108 | 0.02 | 158 | 0.32 |

ten | 0.12 | 60 | 0.12 | 110 | -0.18 | 160 | -0,08 |

12 | 0.32 | 62 | 0.12 | 112 | -0.18 | 162 | 0.32 |

14 | -0.18 | 64 | 0.22 | 114 | 0.42 | 164 | 0.52 |

sixteen | -0.18 | 66 | 0.02 | 116 | 0.62 | 166 | 0.22 |

18 | -0,58 | 68 | -0.18 | 118 | 0.72 | 168 | 0.42 |

20 | -0,68 | 70 | -0.18 | 120 | 0.32 | 170 | 0.42 |

22 | -0,68 | 72 | 0.22 | 122 | 0.62 | 172 | -0.18 |

24 | -0.38 | 74 | -0,28 | 124 | -0.18 | 174 | -0.18 |

26 | -0,68 | 76 | -0,28 | 126 | 0.22 | 176 | 0.32 |

28 | -0.48 | 78 | 0.12 | 128 | -0.38 | 178 | 0.02 |

thirty | -0.48 | 80 | 0.52 | 130 | 0.02 | 180 | -0,28 |

32 | -0,28 | 82 | 0.02 | 132 | -0.38 | 182 | 0.12 |

34 | -0.18 | 84 | -0.38 | 134 | -0.18 | 184 | -0,08 |

36 | -0.38 | 86 | -0,08 | 136 | -0,28 | 186 | -0,08 |

38 | 0.02 | 88 | -0.18 | 138 | -0,08 | 188 | 0.12 |

40 | -0.48 | 90 | -0.18 | 140 | 0.32 | 190 | 0.22 |

42 | 0.02 | 92 | -0,08 | 142 | 0.52 | 192 | 0.32 |

44 | -0,08 | 94 | -0,08 | 144 | 0.12 | 194 | 0.32 |

46 | 0.42 | 96 | -0.38 | 146 | -0,28 | 196 | 0.62 |

48 | 0.42 | 98 | -0,58 | 148 | 0.02 | 198 | 0.52 |

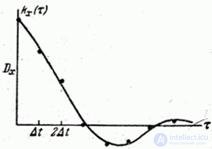

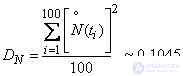

Squaring all values  and dividing the amount by

and dividing the amount by  we obtain approximately the variance of the random function

we obtain approximately the variance of the random function  :

:

and standard deviation:

.

.

Multiplying values  separated by an interval

separated by an interval  and dividing the sum of the works respectively

and dividing the sum of the works respectively  ;

;  ;

;  ; ..., we obtain the values of the correlation function

; ..., we obtain the values of the correlation function  . Normalizing the correlation function by dividing by

. Normalizing the correlation function by dividing by  get the table of function values

get the table of function values  (tab. 17.8.3).

(tab. 17.8.3).

Table 17.8.3

|

|

|

0 | 1,000 | 1,000 |

2 | 0,505 | 0.598 |

four | 0.276 | 0.358 |

6 | 0.277 | 0.214 |

eight | 0,231 | 0.128 |

ten | -0,015 | 0.077 |

12 | 0.014 | 0.046 |

14 | 0.071 | 0.027 |

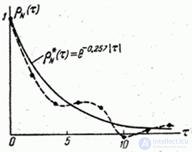

Function graph  presented in fig. 17.8.3 in the form of points connected by a dotted line.

presented in fig. 17.8.3 in the form of points connected by a dotted line.

Fig. 17.8.3.

The not quite smooth course of the correlation function can be explained by the insufficient amount of experimental data (insufficient experience duration), and therefore random irregularities in the course of the function do not have time to smooth out. Calculation  continued to such values at which the actual correlation disappears.

continued to such values at which the actual correlation disappears.

In order to smooth clearly the irregular oscillations of the experimentally found function  , we replace it approximately with a view function:

, we replace it approximately with a view function:

,

,

where is the parameter  we select the method of least squares (see

we select the method of least squares (see  14.5).

14.5).

Using this method, we find  . Calculating function values

. Calculating function values  at

at  Let's build a graph of a smoothing curve. In fig. 17.8.3 he held a solid line. The last column of the table 17.8.3 shows the values of the function.

Let's build a graph of a smoothing curve. In fig. 17.8.3 he held a solid line. The last column of the table 17.8.3 shows the values of the function.  .

.

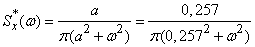

Using the approximate expression of the correlation function (17.8.6), we obtain (see  17.4, example 1) the normalized spectral density of a random process in the form:

17.4, example 1) the normalized spectral density of a random process in the form:

.

.

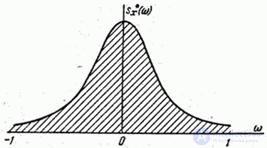

The graph of the normalized spectral density is presented in Fig. 17.8.4.

Fig. 17.8.4.

Comments

To leave a comment

Probability theory. Mathematical Statistics and Stochastic Analysis

Terms: Probability theory. Mathematical Statistics and Stochastic Analysis