Lecture

Many were faced with the problem of slow blogging or their sites, but you need to optimize the scripts to make the site work faster, but many forget that even with low attendance, the site can create a lot of work and hosting will not always work adequately.

To do this, you can enable page caching or database query caching, but all this work with the consequences of the load is a necessary thing, without a doubt.

Only the load on the site is created by both search engine robots and simple spammers. Each of which invests its part in this business.

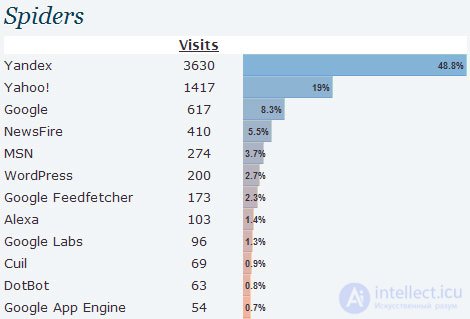

Let's look at the statistics collected using the StatPress plugin for WordPress, which does not know everything, but the major search engines and search bots know for sure:

As we can see, the load is created not only by users, but also by a huge share of robots.

These are very different bots, only the tip of the iceberg:

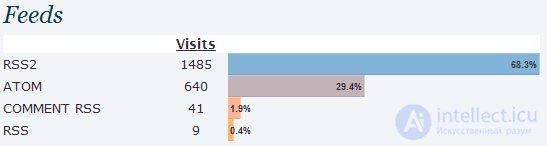

The plugin has a lot of useful data. Including the number of requests for RSS.

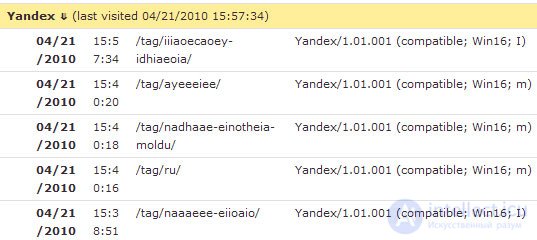

We can accurately determine which search robots are useful to us:

According to the data that we see on the screen, we understand that the main indexer of Yandex came ( Yandex / 1.01.001 (compatible; Win16; I ) and the robot indexing multimedia content ( Yandex / 1.01.001 (compatible; Win16; m ).

When promoting a site, it is necessary to analyze this data in order to understand how and what search engines index , and also with what frequency, then forecasting will be more accurate after making any changes to the site.

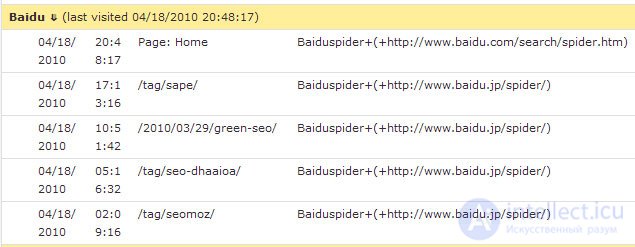

We also see robots that are not very interesting to us , because they create a load on the site, but we do not receive or receive traffic from them, but they are not very targeted:

And here comes the most interesting moment that many miss.

We see in this report exactly the user agent, which can of course be forbidden in the robots.txt file, but this measure cannot be effective, because search engines have repeatedly proved how they can ignore this file and directives in it.

We need to repel robots using the .htaccess file, namely, blocking them at the server level, not even giving access to the site. This can be done either with the help of a user agent or with the help of IP .

Naturally, you need to be clearly sure that you will block unnecessary elements, or you can do big things out of ignorance ![]() Only one Google robot comes at least under 6 different masks.

Only one Google robot comes at least under 6 different masks.

Also, analyzing the site logs, you can understand if hosting is working correctly.

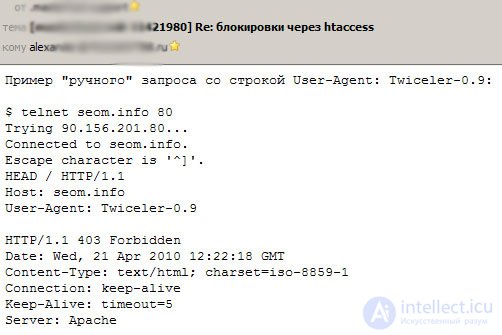

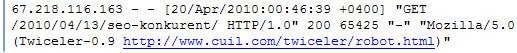

As you can see, after a manual request for a user agent, the idea is to serve the Twiceller-0.9 search robot, because this directive is written in .htaccess , but we see a different picture in the server logs:

As a result, you can reasonably write to the support service so that they solve this problem, because not blocked, we see code 200, not code 403, which was supposed to be.

Blocking robots in .htaccess you reduce the load on the server.

The user agent does not always correspond to real data, you can substitute anything here and present yourself at least by Yandex, so the safety option is to block the IP address . If you need to block someone 100%, it is better to duplicate both directives.

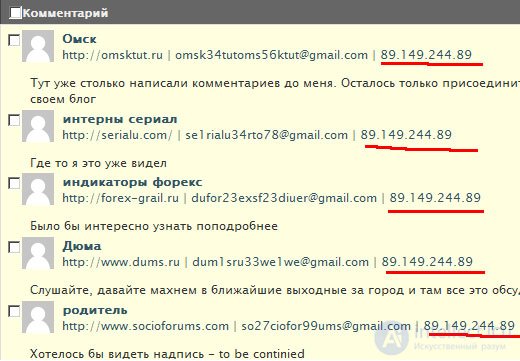

You can also do not only search engine robots, but also with spammers. We look at who is spamming us.

Identify patterns, IP addresses and block them ![]() If you send spam from Chinese servers, for example, you can even block subnets.

If you send spam from Chinese servers, for example, you can even block subnets.

For example, spam blogs can be useful for you. How? - ![]()

You collect statistics - namely, sites that are moving and increasing their performance in this way, and then safely add them to the black list on the link exchange (1 more option to replenish it), because the weight of these sites will not be transferred, no matter how authoritative they may seem , sooner or later they will be sanctioned. Yes, and overpay for the "nakruchennost" indicators are not worth it, namely the indicators determine the price of the link.

Currently, the .htaccess file has more than 300 lines of restrictions.

All that was said above is lyrical digressions, and now to practice, as we do.

<Limit GET POST HEAD>

Order Allow, Deny

Allow from all

Deny from env = search_bot

Deny from 87.118.86.131

Deny from 89.149.241

Deny from 89.149.242

Deny from 89.149.243

Deny from 89.149.244

</ Limit>

I ’m explaining that the contents of the file will block ia_archiver robots (web archive) and msnbot (msn / bing search engine), as well as several IP addresses of 100% of spammers.

Naturally, I will not fully upload my .htaccess file, but by collecting my own statistics, you can not only reduce the load, but also remove unwanted elements from the site that analyze and send spam .

Frequently analyze the site logs - there are many useful things you can find, and there are many uses for these data. ![]()

Comments

To leave a comment

seo, smo, monetization, basics of internet marketing

Terms: seo, smo, monetization, basics of internet marketing