Lecture

Work on the purchase of links should be as automated as possible, but for this you need to do a lot of preparatory work. Any robot will follow your instructions, but you can make a number of mistakes and spoil the seemingly final, excellent idea.

It only seems that there are a lot of resources on the link exchange, in fact, they need to be spent quite carefully. Therefore, the question of the correct classification of parameters for eliminating links arises quite sharply.

In fact, all the filters that you create have a rather small correlation with efficiency, and if you say more precisely, it is not the choice of filter that determines its effectiveness.

I have more than 50 different filters in my accounts (if you take all the accounts in Sapa), including client accounts, but neither do they determine the strategy for choosing a donor.

A site with zero indicators can work no worse than “with puzomerkami”, a site that is not thematic can work better than 100% correct topics.

If you do not have a large sample of sites, on which you can make white lists, you will have to act a little differently (by the way, white sites often turn gray and black, they need to be checked constantly).

The main focus is on the formation of blacklists, for this you need a clear classification of parameters .

I know enough people who have a blacklist for 70 thousand sites for a year or two, and many of them consider this to be absolutely normal and they don’t bother with the classification of parameters.

Just one parameter is enough to blacklist the site - this is not the right and not rational approach.

To collect the parameters for the classification - you have to work with your hands and eyes, partially with your brain. And then all the results should go into automatic processing, because automation allows you to save a lot of time.

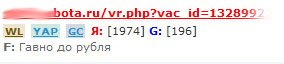

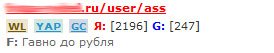

The first barrier that sites go through is a filter by URL (LF), with which we try to identify in advance those sites that have a rather high probability of falling out, as well as undesirable pages.

As we can see, we already have 2 classifiers:

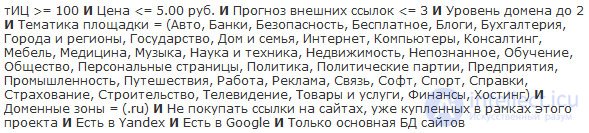

The probability of a ban or a filter can have a sufficient number of sites, for example, it can be a gallery site specially created for glanders, we have entered the parameters:

We mark these sites and we can safely add them to the global black list or black list of the project.

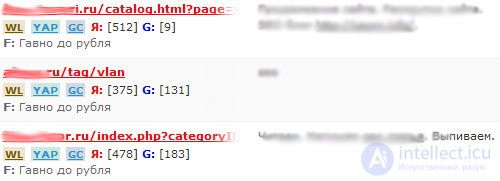

The following parameters - do not have a clear attachment to the characteristics of the site , they have certain characteristics of the page :

With these parameters, we can screen out system pages that may be in the index, but will sooner or later drop out as containing duplicate content, if they are tag or archive pages, or system user profile, registration and other profile pages.

This classifier does not give us the right to mark the site with a blacklist , because these are not characteristics of the whole site, so we cancel these pages without adding them to the blacklists, next time we will see other pages of this site.

I hope I made it clear that there are signs of the site and the page, they need to be clearly distinguished, otherwise you will not “spend the donors” correctly.

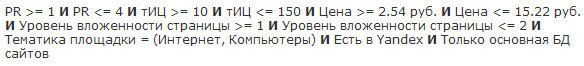

The second barrier is the number of pages in the index; here you need to be careful. Its main goal is to weed out sites that fall under the AGS, by the number of pages indexed.

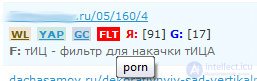

The third barrier is the content filter or TF. I have already partially described the methodology; we can filter enough donors by different parameters. Suppose the site has a "porno" and we noticed it. If you do not go into details - this option is worthy of a blacklist.

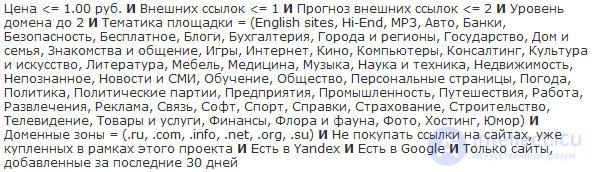

But not every site containing the parameters listed below is worthy of a blacklist:

Here we can either cancel and offer us new pages , or add to the black list of a specific project ( BL ), because global he may not be worthy. I have already touched on TF, so we will not touch it in detail, I will answer questions in the comments, if there are any.

The fourth barrier is the number of external links, the number of internal links, as well as the amount of content per page (BC).

Everything is simple here - you need to clearly understand that this is a characteristic of the page, which means it does not fit the global blacklist , it is best to cancel and we will be offered a new page.

The last stage we go through is the level of nesting (HC), as well as the presence of a page in the index (YAP) and in the cache (YAC) of Yandex. These are also characteristics of the page, we do not have the right to add such sites to black lists, since draw conclusions only on one page.

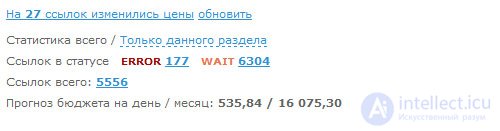

As a result, with a balanced work , we blacklisted 361 sites , and 4076 sites went off the line . That means that out of the 5 thousand 413 links, we have eliminated 4976, keeping for consideration a more detailed 437 links.

If we idly spent the reference mass and added everything to blacklists, without classifying factors, then we would have 4976 sites in the blacklist in just 1 day .

In my opinion, this is not reasonable, but I know many people who do this without demarcating and classifying the parameters .

To date, in my personal account, in just a few years, only 41 thousand sites have accumulated, which were screened out by very tough parameters; if I indiscriminately sent everything to the blacklist, it would already be 3-4 times more sites.

On customer accounts, there are fewer blacklists, but they still work less.

Comments

To leave a comment

seo, smo, monetization, basics of internet marketing

Terms: seo, smo, monetization, basics of internet marketing