Lecture

An interesting topic for writing this statistical post arose by itself when it was decided to check the black list of sites on one of the SAPE accounts.

I have repeatedly written articles and often touched on this topic - how to properly filter the reference mass. Naturally, the blog has materials on the basis of which this black list has been formed:

All articles are written on the basis of experience, which was obtained as a result of tests and errors. Well ... I think it is time to "check" the account with the black list in 68701 site . This is my personal account, not client - where other dropout options are added. I described my principles of filtering - just above. Now let's look at the results.

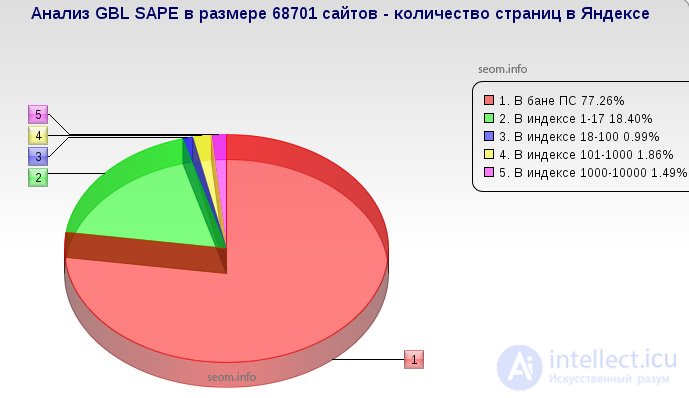

First of all, it was interesting to me to find out what% of the base is in the bath and under the ACS , i.e. How well are the preliminary screening parameters that give signs for blacklisting?

To do this, we checked the 68701 Yandex website and found out how many indexed pages on each of them.

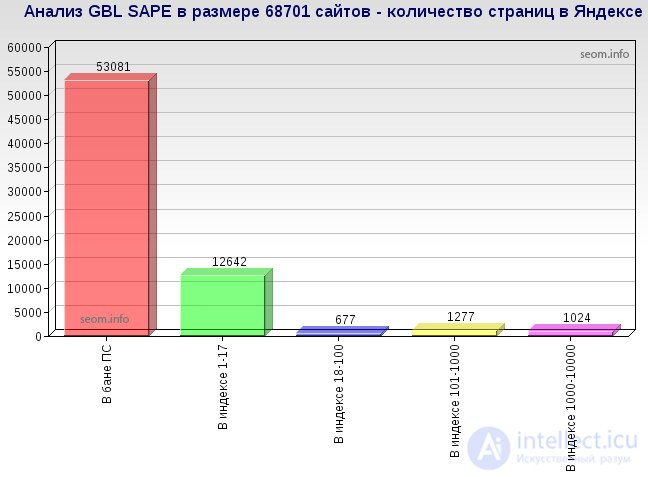

What in quantitative terms looks like this.

More than 53 thousand sites, in principle, do not have a single page in the index, more than 12 thousand are under the filters, almost 700 are not suitable for the number of pages, the rest is an error?

Filters failed and more than 2 thousand sites were blacklisted just like that, having thousands of pages in the index? - Finish! Everything is lost!

Everything is good, but not quite ![]() It is important to know by what criteria they got there, then it will be possible to draw more realistic conclusions.

It is important to know by what criteria they got there, then it will be possible to draw more realistic conclusions.

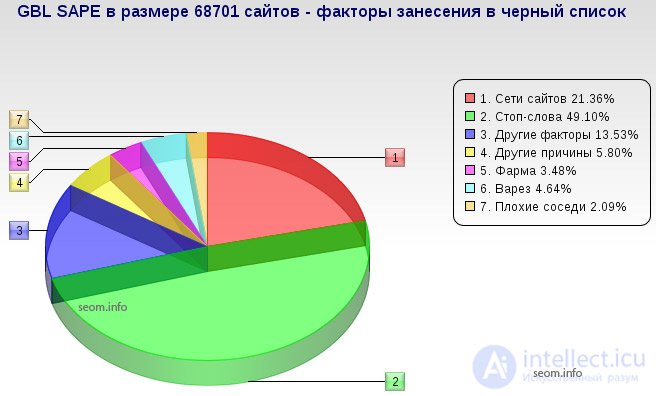

And here are the factors that influenced the blacklisting of sites (GBL):

It is worth mentioning here that other reasons, as well as Pharma points - 3.48%, Varez - 4.64% - this is only a classification, and not a reason for entering, just singled out for myself how many of them contained signs of one or another segment. Bad neighbors (2.09%) are also a relative factor, although it is always worth watching which links are selling next to yours.

The second slice - by classifier, has an error , because In the analysis for blacklisting, specific internal pages were analyzed, while the current analysis took into account only the main pages of the websites — the GBL SAPE presentation format .

Other factors and causes are both inadequate price increases on links, constant inaccessibility, etc., which I cannot clearly classify into separate segments, because the analysis is carried out after the fact, and not at the time of entry with a clear rule around each site.

In general, I am satisfied with the filters that are used, it remains only to correct them on the basis of sites that hit the very couple of thousands of lucky ones in the index, but at the same time clearly sitting on my blacklist. After correction, it will be possible to more clearly identify candidates for departure.

I think you should also conduct such monitoring in order to adjust your procurement strategy.

This analysis took a day . About 19 hours of which Yandex made its way (the captcha was caught 1506 times, the analysis was carried out from 1 IP address), and then the sites themselves, in turn, not together. Here, of course, a complete set of anti-virus protection was useful to me ... ![]()

Comments

To leave a comment

seo, smo, monetization, basics of internet marketing

Terms: seo, smo, monetization, basics of internet marketing