Lecture

Not so long ago, at the conference "SMX East", in a report delivered by Joachim Kupke (head of the engineering team at Google’s indexing department), he said: ".

I never broke off like that, after the announcement, in my own words, but I want to immediately set up readers to carefully analyze the information . The materials that are published in the blog should help in a very important stage of the work on the site - the formation of the reference mass, and this material and a number of previously published (duplicate content within the site: parse for a specific example, duplicate content - myths and a solution) You vector development, analyze. Also, it was not for nothing that the material “link filtering and satellite errors” was written earlier - where some aspects of working with the reference mass were also affected. In my opinion, this series of articles contains a series of fundamental principles that will allow you to work more effectively with links. Of course, I will try to highlight the main points.

I remember those words of him well, then after the end of the conference I discussed this issue with other experts, everyone agreed with this opinion. Now, after some time, I want to improve this opinion somewhat, and to say about it a little differently “ most pages on the Internet do not live long ”.

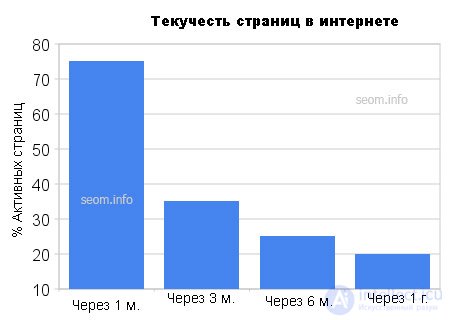

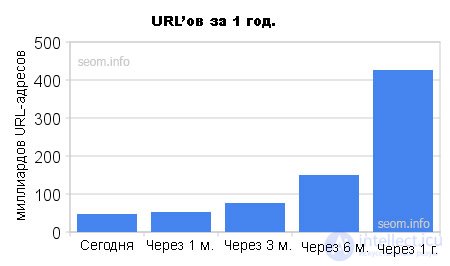

Only after one month has passed, more than 25% of URLs become what we call “out of access”. By this I mean that the content was duplicated, invalid session parameters, or other reasons for which the page is not available (for example, 404 and 505 errors, etc.).

After another six months, another 75% of the remaining ten billion URLs disappear, and only after a year only 20% of the pages remain accessible . As already noted in his previous article, Rand Fishkin, Google is doing a great job of filtering out poor-quality pages.

To visualize this dramatic fluidity of the pages, first imagine what the Internet was like 6 months ago ... (this is very simply shown in the figure)

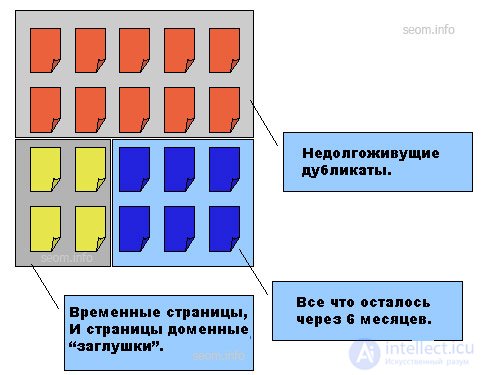

Based on the point of view of Joachim, and my further conclusions regarding this issue, we get about the following, semi-annual content will look like this:

What does this mean for us, as marketers who have incurred the costs of building up the link mass , and then it turns out that our links are not as long-lived as we expected.

If your reference mass originated from such short-lived pages here, then your positions in the Serpa may vary within very large limits. When increasing the reference mass, one must be able to distinguish between sites, long-livers and short-lived .

The first you can use to build a stable reference mass for the future, the second for a quick rise at the beginning of promotion.

No doubt both types of these sites are very useful, but if your entire link mass is concentrated on short-lived sites, then after a certain period you risk seriously losing your position in search results, you should never forget about it.

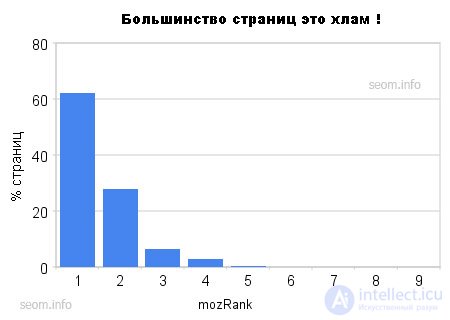

As for the Linkscape index, we see the following:

To make it more clear to you, imagine that the quality of all pages on the network is measured, for example, in mozRank:

It seems to me that this chart speaks for itself. The overwhelming majority of pages have a very low "importance" based on the weight they transmit. Therefore, it is not surprising for me that after a short stay in the network, these seo dummies simply disappear . Naturally, we should not forget that there is also a huge number of quality pages.

What does this say about the pages we save?

But first, let's agree not to take into account the pages that we saw a year ago (as mentioned above, only less than 1/5 of them "survived" on the network). Over the past 12 months, we counted in the network, from 500 billion to 1 trillion pages, depending on the method of counting.

So in just one year, we added over 500 billion unique URLs to Linkscape and other tools that run from Linkscape (Competitive Link Finder, Visualization, Backlink Analysis, etc.).

And more importantly, only less than half of URLs from those that exist are represented here. We had to cut off 50% of the pages as they did not convey any weight, and were simply useless (spam pages, duplicate content, uncanonical, and other reasons).

I think that there are several more trillion pages, but neither search engines, nor even Linkscape, will want to have this garbage in their index.

According to the latest index (compiled over the past 30 days), we have:

We checked all of these links and URLs. And I can with a degree of confidence call them “verified”, here are some of the reasons why I think so:

I hope you agree with that. At least most of my familiar specialists yes

Comments

To leave a comment

seo, smo, monetization, basics of internet marketing

Terms: seo, smo, monetization, basics of internet marketing