Lecture

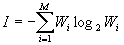

Let us show that the formula of Shannon

(one)

(one)

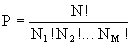

We present in the formula (1) the parameters N! and N i ! According to Stirling Formula

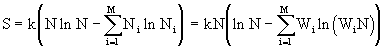

After the transformations we get

.

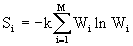

. Entropy per molecule S i = S / N follows from the last formula

, W i = N i / N. (2)

, W i = N i / N. (2) Consider what caused the similarity of expressions for information (1) and entropy (2). Entropy - a measure of disorder, a measure of the lack of information in the system. This can be seen from the following example: when water is frozen, the entropy decreases, but information about the presence of a molecule in the lattice sites increases. But when the liquid is cooled, the temperature and entropy of the refrigerator increase, that is, the second law of thermodynamics is performed. From this it follows that every bit of information has an entropic price. To go from formula (1) to formula (2), you need to multiply the information by

In thermodynamic units, a bit is very cheap.

Let us estimate how many bits are contained in human culture. During the existence of mankind, approximately 10 8 books have been written, each with a volume of 25 sheets, the sheet contains 4 * 10 4 characters. Let each sign contain information in 5 bits, then 10 8 books contain information 5 * 10 14 bits, which is equivalent to a decrease in entropy by 5 * 10 -9 J / K.

The change in entropy is many times greater than the amount of information received. When throwing a coin, 1 bit of information is obtained, but how much this coin and what muscular work is spent on throwing is not taken into account. The coin can be very small or very large (as large as Mont Blanc), and the information is the same. Therefore, the above assessment of the content of books in J / K units is meaningless.

So, for getting information you have to pay an increase in entropy. Minimum work  A is carried out when a fluctuation occurs, but at the same time there is a change in information by

A is carried out when a fluctuation occurs, but at the same time there is a change in information by  I. It is shown that the minimum energy cost of 1 bit of information is determined by the dependence

I. It is shown that the minimum energy cost of 1 bit of information is determined by the dependence

.

. Consequently, the minimum energy cost of one bit of information at T = 300 K, calculated by the last formula is 2 * 10 -21 J / K

Comments

To leave a comment

Synergetics

Terms: Synergetics