Lecture

Modern science pays attention to various processes of transfer - mass, energy, impulse (amount of movement). All processes occurring in time, one way or another connected with the phenomena of transfer and are studied in various fields of science.

Consider the phenomenon of energy transfer, which is a common measure of various processes and types of interaction. All forms of movement are transformed into each other in strictly defined quantitative ratios. It is this circumstance that made it possible to introduce the concept of energy and the law of its conservation. Energy transfer is carried out with the help of those or other carriers and always happens in the direction of its reduction. Energy transfer is often characterized by a specific flux — the amount of energy (J) transferred through a single normal site (1 m 2 ) per unit of time (s); the unit of measurement is J / (m 2 s) or W / m 2 .

In addition to various elementary particles, electromagnetic waves, gravitational fields, elastic waves, bulk or disturbed masses serve as energy carriers. What is important is the different qualitative coloring of the process, which is sometimes identified with the concept of "information". For example, it can be said that the electromagnetic energy flux density is measured at 100 W / m 2 , but this energy can be quite different in wavelength, coherence, polarization, nature of oscillations, etc. The flux at 100 W / m 2 can be recorded from a heated body, laser, or living object.

Mass transfer can be carried out by diffusion, emission of particles of a substance (atoms, electrons, etc.), as well as through the flow of mass. It is measured as a specific mass flow (kg / m 2 ), changing in different processes by more than ten orders of magnitude. In classical mechanics, the law of conservation of mass is formulated; The mass transfer model also contains parameters characterizing the quality of the mass transferred, that is, the individuality of the individual components and the conditions of the processes. Changing the amount of movement of the current mass of the medium or individual bodies, particles leads to the transfer of momentum.

The amount of movement is determined by the product of the density and the velocity vector of the flow. On the basis of the law of conservation of momentum, one can compose the equation of motion, in which the parameters determining the quality of the process also appear.

So, the processes of energy transfer, mass, impulse, have a qualitative color (diversity), which can be identified with the term "information". This is still a very vague concept, about which the founder of cybernetics Norbert Wiener wrote: "Information is information, not matter and not energy." Different researchers put an ambiguous meaning in this term - from the universal fundamental concept of the type “all laws of physics can be perceived as information embedded in substance by nature” to the approval of academician NN Moiseev that this is a historical concept. The need for its introduction arises at those stages in the development of the material world, when wildlife and society arise and the need arises to study targeted actions, decision-making procedures when external conditions change, etc. In all other cases, according to N. N. Moiseev, do without the term "information" and describe the processes taking place using the laws of physics and chemistry.

The classic definition of Ashby information is known as a measure of structural diversity . We emphasize - not just diversity, but structural diversity, which is essential, since it indicates the connection of this concept with the structure, i.e., in some order. If we consider large systems, then their structure is determined by the functional purpose. Therefore, the connection is viewed: information - functional purpose - order. These intuitive considerations become clearer with a joint analysis of entropy and information.

The French physicist Leon Brillouin (1889-1969) formulated the so-called negentropic principle of information: the amount of information accumulated and stored in the structure of information systems.  I is equal to the decrease in its entropy

I is equal to the decrease in its entropy  S. Usually the entropy S is measured as a measure of chaos X near the state of thermodynamic equilibrium:

S. Usually the entropy S is measured as a measure of chaos X near the state of thermodynamic equilibrium:

S = k X, X = ln P.

From the second law of thermodynamics, as shown earlier, there is an irretrievable loss of energy quality. However, real evolution leads not only to the growth of disorder, but also order. This process is associated with the processing of information. We compare uncertainty with the concept of information, and the amount of information with a decrease in uncertainty. The informational measure of ordering P is equal to the difference between the maximum X max and the current values of the measure of chaos, that is,

P = X max - X.

In other words, the measure of chaos and the measure of order are complementary functions. Let all states be equally probable, then X = X max and P = 0. With full orderliness, on the contrary, X = 0 and P = X max . For example, for a fixed number of microstates, as much as a measure of order dP increases, so does the measure of disorder dX, i.e.

dX = dП, X + П = const

Consequently, the two opposites - harmony and chaos are in unstable equilibrium, and their sum is a constant value.

Note that inanimate nature, acting aimlessly and indifferently, chooses the option that gives a small amount of information. The meaningful action of the living system dramatically narrows the field of choice. At the same time, the amount of information is growing at an ever-increasing pace and more and more matter and energy is entering the “turn”. The activity of a rational and spiritually developed person is aimed at improving the orderliness of the environment. If this activity is terminated, then the "blind" forces of nature increase disorder and destroy the traces of human labor. In the process of ordering, a person reduces the entropy of the environment, in other words, as if it extracts negentropy from the environment, and then uses it to construct its own tissues and to support vital processes. Along with other estimates, the result of labor can be characterized by an increase in orderliness, that is, the amount of control information or negentropy introduced by man into the environment.

So, apparently, there is no doubt that the information is a time-varying factor that is in complex interaction with various states of matter and energy. These considerations allow us to expand the definition of information proposed by Ashby: information is a measure of change in time and space of the structural diversity of systems .

The above reflections on the nature of information show that this is a complex and not fully clarified concept, which seems to be as fundamental as the natural science categories of matter, energy, and time.

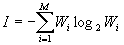

At the beginning of this section, it was pointed out that the American scientist C. Shannon proposed using the thermodynamic probabilistic formula for entropy to measure the amount of information. Those interested can familiarize themselves with the paragraph on the relationship between Shannon information and thermodynamic entropy. At first, the use of the Shannon formula was caused by considerations of convenience of calculations, but the deeper meaning of this operation gradually became clear. Information is contained everywhere: in the living cell and in the dead crystal, in the living and in the computer memory. Information interactions are inherent in both the material and the spiritual world. Understanding this opened up another way for natural science to understand the world around us using the probabilistic formula

.

.

Fundamentally new was the measure of information quantity introduced by Shannon, using this statistical formula of entropy. The commonality of this approach is indicated by the use of the Boltzmann – Shannon formula not only in technical works, but also in the works of biologists, psychologists, linguists, art historians, philosophers, etc. As Shannon wrote: “Information theory, like a fashionable intoxicant, turns the head around around". "Fashionable drink" did not turn the head to K. Shannon. He understood that his theory is not universal, that the new units of measurement (bits) proposed by him for measuring the amount of information do not take into account such important information properties as its value and meaning. However, in the rejection of the specific content of information for the sake of the comparability of the number of different messages lies a deep meaning. The bits offered by Shannon to measure the amount of information are suitable for evaluating any messages, whether it be baby birth news, a television show, astronomical observations, etc. This is similar to the numbers with which you can determine the number of sheep, trees, steps, etc.

Based on the theory created by Shannon, many scientists tried to find such measures of the amount of information that would take into account its value and meaning. But it was not possible to create universal assessments: for different processes, the criteria of value will be different and the latter are subjective, since they depend on who will use them. The smell, carrying a huge amount of information for the dog, is elusive for humans. All these particular differences are ignored by the measure proposed by Shannon, and therefore it is equally suitable for the study of all types of information processes.

Information theory is a major step in the development of scientific thought, as it allows you to deepen the understanding of the essence, unity of the opposites of the concepts of information and entropy and help in understanding the secrets of nature, thanks to a new fruitful method.

Comments

To leave a comment

Synergetics

Terms: Synergetics