Lecture

How can we ensure the presence of points of physical consistency of the database, i.e. how to restore database state at tpc time? For this purpose, two main approaches are used: the approach based on the use of the shadow mechanism, and the approach in which the logging of page-by-page database changes is used.

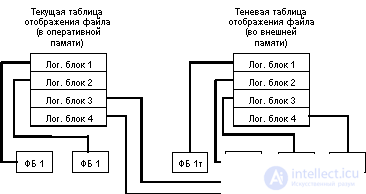

When a file is opened, the table of displaying the numbers of its logical blocks to the addresses of the physical blocks of external memory is read into RAM. When modifying any block of a file in external memory, a new block is allocated. At the same time, the current mapping table (in RAM) changes, and the shadow one remains unchanged. If a failure occurs while working with an open file, the state of the file is automatically saved in external memory until it is opened. To restore the file explicitly, it is enough to reread the shadow mapping table into RAM.

The general idea of the shadow mechanism is shown in the following figure:

In the context of a database, the shadow mechanism is used as follows. Periodic operations are performed to establish the point of physical consistency of the database (checkpoints in System R). To do this, all logical operations are completed, all RAM buffers, the contents of which do not match the contents of the corresponding pages of external memory, are pushed out. The shadow table for displaying database files is replaced with the current one (more correctly, the current display table is written in place of the shadow one).

Restoration to tlpc occurs instantly: the current mapping table is replaced with the shadow one (when recovering, the shadow mapping table is simply read). All recovery problems are solved, but at the expense of excessive memory overuse. In the limit, it may take twice the amount of external memory than is really needed to store the database. The shadow mechanism is a reliable, but too coarse means. It provides a consistent state of external memory at one point in time common to all objects. In fact, it is enough to have a set of consistent sets of pages, each of which can have its own set of time.

To achieve such a weaker requirement, along with logical logging of database change operations, paginal changes are logged. The first stage of recovery after a soft failure consists in a rollback of incomplete logical operations. Just as it is done with logical records in relation to transactions, the last record about page-by-page changes from one logical operation is the record about the end of the operation. In order to recognize whether the external memory page of the database needs to be restored, when ejecting any page from the buffer, the identifier of the last record about page change of this page is placed in the memory. There are other technical nuances.

There are two sub-directions in this approach. The first subdirection maintains a general log of logical and paged operations. Naturally, the presence of two types of records, interpreted absolutely differently, complicates the structure of the journal. In addition, records of page changes, the relevance of which is of a local nature, significantly (and not very meaningfully) increase the journal.

Therefore, it is becoming increasingly popular to maintain a separate (short) journal of page-by-page changes. This technique is used, for example, in the well-known product Informix Online.

Comments

To leave a comment

Databases IBM System R - relational DBMS

Terms: Databases IBM System R - relational DBMS