Lecture

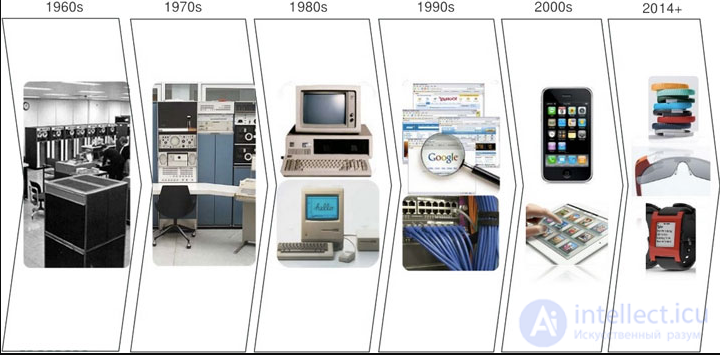

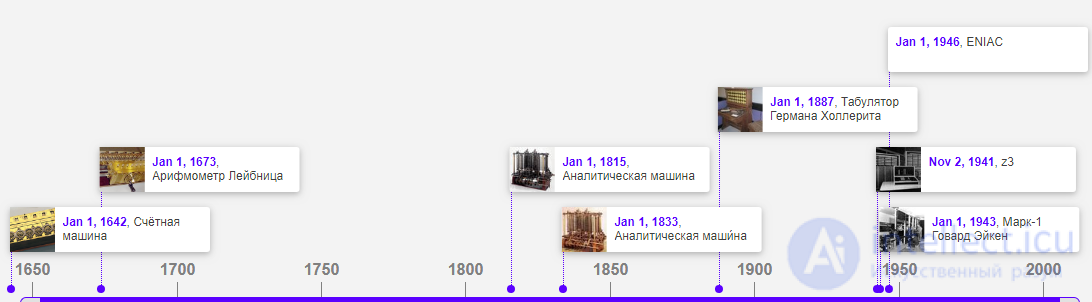

Modern man today is difficult to imagine their lives without electronic computers (computers). Currently, anyone, according to their needs, can assemble a full-fledged computing center on their desktop. So it was, of course, not always. The path of humanity to this achievement was difficult and thorny. Many centuries ago, people wanted to have devices that would help them solve various problems. Many of these tasks were solved by consistently performing some routine actions, or, as they say now, by executing an algorithm. From an attempt to invent a device capable of realizing the simplest of these algorithms (addition and subtraction of numbers), everything began ...

Blaise pascal

The beginning of the XVII century (1623) can be considered as a starting point, when scientist V. Shikard created a machine that can add and subtract numbers. But the first arithmometer capable of performing four basic arithmetic operations was the arithmometer of the famous French scientist and philosopher Blaise Pascal . The main element in it was a gear wheel, the invention of which in itself became a key event in the history of computing technology. I would like to note that the evolution in the field of computing technology is uneven, spasmodic in nature: periods of accumulation of forces are replaced by breakthroughs in development, after which a stabilization period begins, during which the achieved results are used practically and at the same time knowledge and strength are accumulated for the next leap forward. After each orbit, the evolutionary process enters a new, higher stage.

Gustav Leibniz

In 1671, the German philosopher and mathematician Gustav Leibniz also created an adding machine on the basis of a special gear wheel - the Leibnitz cog wheel. Leibniz’s arithmometer, like his predecessor’s arithmometers, performed four basic arithmetic operations. At this period ended, and humanity for almost one and a half centuries accumulated strength and knowledge for the next round of evolution of computing technology. The 18th and 19th centuries were a time when various sciences, including mathematics and astronomy, were rapidly developing. They often encountered problems requiring long and laborious calculations.

Charles Babbage

Another famous person in the history of computing has become the English mathematician Charles Babbage . In 1823, Babbage began working on a machine for calculating polynomials, but, more interestingly, this machine had, in addition to direct production of calculations, to produce results — print them on a negative plate for photo printing. It was planned that the machine will be driven by a steam engine. Due to technical difficulties, Babbage was not able to fully implement his project. Here, for the first time, the idea arose to use some external (peripheral) device to produce the results of calculations. Note that another scientist, Shoyts, in 1853 still realized the car conceived by Babbage (it turned out even less than planned). Babbage probably liked the creative process of searching for new ideas more than embodying them in something material. In 1834, he outlined the principles of the work of the next machine, which he called the "Analytical". Technical difficulties again did not allow him to fully realize his ideas. Babbage was able to bring the car only to the experimental stage. But precisely the idea is the engine of scientific and technological progress. The next Charles Babbage car was the embodiment of the following ideas:

Countess Ada Augusta Lovelace, who is considered the world's first programmer, took part in the development of this machine.

The ideas of Charles Babbage were developed and used by other scientists. So, in 1890, at the turn of the 20th century, the American Herman Hollerith developed a machine that works with data tables (the first Excel?). The machine was controlled by a punch card program. It was used in the US census in 1890. In 1896, Hollerith founded a firm that was the predecessor of IBM. With the death of Babbage in the evolution of computing technology, another break came until the 1930s. In the future, all the development of mankind has become unthinkable without computers.

In 1938, the development center was briefly shifted from America to Germany, where Konrad Zuse creates a machine that operates, in contrast to its predecessors, not binary numbers but decimal numbers. This machine was also still mechanical, but its undoubted advantage was that it implemented the idea of processing data in binary code. Continuing his work, Zuse in 1941 created an electromechanical machine, the arithmetic unit of which was made on the basis of a relay. The machine was able to perform floating point operations.

Over the ocean, in America, during this period, there were also works on the creation of such electromechanical machines. In 1944, Howard Aiken designed the car, which was called the Mark-1 . She, like the Zuse machine, worked on the relay. But due to the fact that this machine was clearly created under the influence of Babbage's work, she operated on the data in decimal form.

Naturally, due to the large proportion of mechanical parts, these machines were doomed. It was necessary to look for a new, more technological element base. And then they remembered the invention of Forest, who in 1906 created a three electrode vacuum tube, called the triode. Due to its functional properties, it has become the most natural replacement for the relay. In 1946 in the USA, at the University of Pennsylvania, the first mainframe computer, the ENIAC , was created. The ENIAC computer contained 18 thousand lamps, weighed 30 tons, occupied an area of about 200 square meters and consumed enormous power. It still used decimal operations, and programming carried out the axis by switching connectors and setting switches. Naturally, such a “programming” entailed the appearance of a multitude of problems, caused primarily by the incorrect setting of the switches. The name of another key figure in the history of computer technology, mathematician John von Neumann, is connected with the ENIAC project. It was he who first proposed to write the program and its data into the memory of the machine so that it could be modified if necessary in the process. This key principle was used later in the creation of a fundamentally new computer EDVAC (1951). In this machine, binary arithmetic is already changing and RAM is used, built on ultrasonic mercury delay lines. Memory could store 1024 words. Each word consisted of 44 binary digits.

|

| John von Neumann on the background of the computer EDVAC |

After the creation of EDVAC, humankind realized what heights of science and technology can be achieved by a man-computer tandem. This industry began to develop very quickly and dynamically, although there was also some periodicity associated with the need to accumulate a certain amount of knowledge for the next breakthrough. Until the mid-80s, the process of evolution of computing technology can be divided into generations. For completeness, we will give these generations a brief qualitative characteristics:

The first generation of computers (1945-1954). During this period, a typical set of structural elements that make up the computer is formed. By this time, the developers already had a roughly the same idea of what elements a typical computer should consist of. This is the central processing unit (CPU), random access memory (or random access memory (RAM)) and input / output devices (I / V). The CPU, in turn, must consist of an arithmetic logic unit (ALU) and a control unit (MS). The machines of this generation worked on the lamp element base, because of which they absorbed a huge amount of energy and were not very unreliable. With their help, mainly solved scientific problems. Programs for these machines could already be compiled not in machine language, but in assembly language.

The second generation of computers (1955-1964 gg.). The change of generations was determined by the appearance of a new element base: instead of a bulky lamp in a computer, miniature transistors were used, the delay lines as memory elements changed memory on magnetic cores. This ultimately led to a decrease in size, improving the reliability and performance of computers. In the architecture of the computer appeared index registers and hardware to perform floating point operations. Commands for calling subroutines were developed.

High-level programming languages appeared - Algol, FORTRAN, COBOL, - which created the prerequisites for the emergence of portable software that does not depend on the type of computer. With the advent of high-level languages, there are compilers for them, libraries of standard subroutines, and other things that are familiar to us now.

An important innovation that I would like to point out is the emergence of so-called I / O processors. These specialized processors allowed the central processor to be freed from input-output control and to perform input-output using a specialized device simultaneously with the computation process. At this stage, the range of computer users has dramatically expanded and the range of tasks has increased. For effective resource management machines began to use operating systems (OS).

The third generation of computers (1965-1970 gg.). The change of generations was again due to the renewal of the element base: instead of transistors in various nodes of a computer, integrated circuits of various degrees of integration were used. The microcircuits made it possible to place dozens of elements on a plate several centimeters in size. This, in turn, not only increased the performance of computers, but also reduced their size and cost. There were relatively inexpensive and small-sized machines - Mini-computers. They were actively used to control various technological production processes in information collection and processing systems.

The increase in the power of the computer made it possible to simultaneously run several programs on one computer. To do this, it was necessary to learn how to coordinate among themselves the simultaneously performed actions, for which the functions of the operating system were expanded.

Along with active development in the field of hardware and architectural solutions, the share of developments in the field of programming technologies is growing. At this time, the theoretical foundations of programming methods, compilation, databases, operating systems, etc., are being actively developed. Application packages are being created for various fields of human activity.

It is now becoming an unaffordable luxury to rewrite all programs with the advent of each new type of computer. There is a tendency to create computer families, that is, machines become upward-compatible at the software-hardware level. The first of these families was the IBM System / 360 series and our domestic counterpart of this computer is the EU computer.

The fourth generation of computers (1970-1984 gg.). Another change in the elemental base led to a change of generations. In the 70s, work was actively carried out to create large and super-large-scale integrated circuits (LSI and VLSI), which made it possible to place tens of thousands of elements on a single chip. This led to a further significant reduction in the size and cost of computers. Work with the software has become more friendly, which led to an increase in the number of users.

In principle, with this degree of integration of elements, it became possible to try to create a functionally complete computer on a single chip. Relevant attempts were made, although they met, mostly with an incredulous smile. Probably, these smiles would be less if it were possible to foresee that this very idea would cause the extinction of large computers in some fifteen years.

Nevertheless, in the early 70s, Intel released the microprocessor (MP) 4004. And if before that there were only three areas in the world of computing (supercomputers, mainframes) and minicomputers, now one more was added - microprocessor. In the General case, the processor is understood as a functional block of a computer, designed for logical and arithmetic processing of information based on the principle of microprogram control. By hardware implementation, processors can be divided into microprocessors (all processor functions are fully integrated) and processors with small and medium integration. Structurally, this is reflected in the fact that microprocessors implement all the functions of the processor on a single chip, while other types of processors implement them by connecting a large number of microcircuits.

Intel 4004

So, the first microprocessor 4004 was created by Intel at the turn of the 70s. It was a 4-bit parallel computing device, and its capabilities were severely limited. 4004 could produce four basic arithmetic operations and was initially used only in pocket calculators. Later, the scope of its application was expanded through the use of various control systems (for example, to control traffic lights). Intel, having correctly predicted the viability of microprocessors, continued intensive development, and one of its projects ultimately led to a major success, which predetermined the future path of development of computer technology.

Intel 8080

They became a project to develop an 8-bit processor 8080 (1974). This microprocessor had quite a developed system of commands and was able to divide numbers. It was he who was used to create the Altair personal computer, for which young Bill Gates wrote one of his first interpreters of the BASIC language. Probably, from this point on, the 5th generation should be counted.

The fifth generation of computers (1984 - today) can be called a microprocessor. Notice that the fourth generation ended only in the early 80s, that is, the parents represented by large cars and their rapidly growing and gaining "child" For almost 10 years, they relatively peacefully existed together. For both of them, this time has only benefited. Designers of large computers have accumulated vast theoretical and practical experience, and microprocessor programmers have managed to find their own, albeit initially very narrow, niche in the market.

Intel 8086

In 1976, Intel completed the development of a 16-bit 8086 processor. It had a sufficiently large register width (16 bits) and system address bus (20 bits), due to which it could address up to 1 MB of RAM.

In 1982, 80286 was created. This processor was an improved version of the 8086. It already supported several operating modes: real, when the address was formed according to the i8086 rules, and protected, which implemented multitasking in hardware and virtual memory management. 80286 also had a larger address bus width - 24 bits versus 20 of 8086, and therefore it could address up to 16 MB of RAM. The first computers based on this processor appeared in 1984. According to its computing capabilities, this computer has become comparable to the IBM System / 370. Therefore, we can assume that the fourth generation of the development of a computer has been completed on this.

Intel 80286

In 1985, Intel introduced the first 32-bit microprocessor 80386, hardware-compatible bottom-up with all previous processors from this company. It was much more powerful than its predecessors, had a 32-bit architecture and could directly address up to 4 GB of RAM. The 386 processor began to support the new mode of operation - the virtual 8086 mode, which provided not only greater efficiency of the programs developed for the 8086, but also allowed the parallel operation of several such programs. Another important innovation - support for the page organization of RAM - made it possible to have a virtual memory space of up to 4 TB in size.

Intel 80386

The 386 processor was the first microprocessor to use parallel processing. Так, одновременно осуществлялись: доступ к памяти и устройствам ввода-вывода, размещение команд в очереди для выполнения, их декодирование, преобразование линейного адреса в физический, а также страничное преобразование адреса (информация о 32-х наиболее часто используемых страницах помещалась в специальную кэш-память).

Intel 80486

Вскоре после процессора 386 появился 486. В его архитектуре получили дальнейшее развитие идеи параллельной обработки. Устройство декодирования и исполнения команд было организовано в виде пятиступенчатого конвейера, на втором в различной стадии исполнения могло находиться до 5 команд. На кристалл была помещена кэш-память первого уровня, которая содержала часто используемые код и данные. Кроме этого, появилась кэш-память второго уровня емкостью до 512 Кбайт. Появилась возможность строить многопроцессорные конфигурации. В систему команд процессора были добавлены новые команды. Все эти нововведения, наряду со значительным (до 133 МГц) повышением тактовой частоты микропроцессора, значительно позволили повысить скорость выполнения про грамм.

Since 1993, Intel Pentium microprocessors began to be produced. Their appearance, the beginning was clouded by an error in the block of operations with a floating point. This error was quickly eliminated, but distrust of these microprocessors remained for some time.

Intel Pentium

Pentium продолжил развитие идей параллельной обработки. В устройство декодирования и исполнения команд был добавлен второй конвейер. Теперь два конвейера (называемых u и v) вместе могли исполнять две инструкции за такт. Внутренний кэш был увеличен вдвое - до 8 Кбайт для кода и 8 Кбайт для данных. Процессор стал более интеллектуальным. В него была добавлена возможность предсказания ветвлений, в связи с чем значительно возросла эффективность исполнения нелинейных алгоритмов. Несмотря на то что архитектура системы оставалась все еще 32-разрядной, внутри микропроцессора стали использоваться 128- и 256-разрядные шины передачи данных. Внешняя шина данных была увеличена до 64 бит. Продолжили свое развитие технологии, связанные с многопроцессорной обработкой информации.

The advent of the microprocessor Pentium Pro has divided the market into two sectors - high-performance workstations and cheap home computers. The Pentium Pro processor has implemented the most advanced technology. In particular, another pipeline was added to the existing two of the Pentium processor. Thus, in one cycle of operation, the microprocessor began to execute up to three instructions.

Intel Pentium II

Moreover, the Pentium Pro processor allowed dynamic command execution (Dynamic Execution). Its essence is that three command decoding devices, working in parallel, divide commands into smaller parts, called microoperations. Further, these micro-operations can be executed in parallel by five devices (two integer, two floating point and one memory interface device). At the exit, these instructions are again collected in the original form and order. The power of the Pentium Pro is complemented by an improved organization of its cache memory. Like the Pentium processor, it has 8 KB of first-level cache and 256 KB of second-level cache. However, at the expense of circuit solutions (using the architecture of a double independent bus), the cache memory of the second level was placed on a single chip with a microprocessorwhich greatly improved performance. The Pentium Pro implemented a 36-bit address bus, which made it possible to address up to 64 GB of RAM.

Процесс развития семейства обычных процессоров Pentium тоже не стоял на месте. Если в процессорах Pentium Pro параллелизм вычислений был реализован за счет архитектурных и схемотехнических решений, то при создании моделей процессора Pentium пошли по другому пути. В них включили новые команды, для поддержки которых несколько изменили программную модель микропроцессора. Эти команды, получившие название MMX-команд (MultiMedia eXtention - мультимедийное расширение системы команд), позволили одновременно обрабатывать несколько единиц однотипных данных.

Intel Pentium III

Следующий выпущенный в свет процессор, названный Pentium II, объединил в себе все технологические достижения обоих направлений развития архитектуры Pentium. Кроме этого он имел новые конструктивные особенности, в частности, его корпус выполнен в соответствии с новой технологией изготовления корпусов. Не забыт и рынок портативных компьютеров, в связи с чем процессором поддерживаются несколько режимов энергосбережения.

Процессор Pentium III. Традиционно он поддерживает все достижения своих предшественников, главное (и, возможно, единственное?!) его достоинство - наличие новых 70 команд, Эти команды дополняют группу MMX-команд, но для чисел с плавающей точкой. Для поддержки этих команд в архитектуру процессора был включен специальный блок.

Comments

To leave a comment

History of computer technology and IT technology

Terms: History of computer technology and IT technology