Lecture

Previously tried to increase the clock frequency.

Now it is not advisable because of:

• downsizing problems

• heat sink problems

Apply parallel computing

Computing systems

1. Computer with shared memory (multiprocessor systems)

2.Komputer with distributed memory (multicomputer systems)

There are two classes of system architectures:

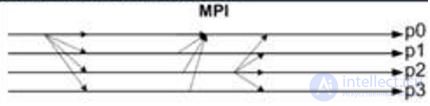

distributed memory (massively parallel) (MPP) (MPI example)

usually consist of a set of compute nodes.

each contains 1+ processors, local memory, network adapter, hard drives, I / O devices

there may be specialized control nodes and input / output nodes.

nodes are connected through a communication medium

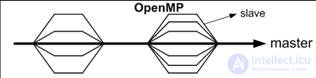

shared memory or symmetric multiprocessing (SMP) (OpenMP example)

Consist of several homogeneous processors and an array of shared memory

each processor has direct access to any memory cell

memory access speed for all processors is the same

typical SMP multi-core processors

2 Multiprocessor systems

The first class is shared memory computers. Systems built on this principle are sometimes called multiprocessor "systems or simply multiprocessors. There are several peer processors with the same access to a single memory. All processors share common memory. All processors work with a single address space: if one the processor wrote the value 79 into the cell at 1024, then another processor, after reading the contents of the cell located at the address 1024, will receive the value 79. 2

3 3 Parallel computers with shared memory

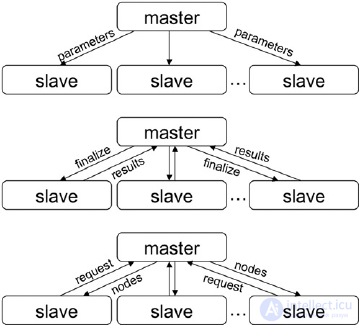

4 Multicomputer systems The second class is computers with distributed memory, which, by analogy with the previous class, are sometimes called multicomputer systems. Each computing node is a full-fledged computer with its own processor, memory, I / O subsystem, operating system. In such a situation, if one processor writes the value 79 to the address 1024, this will not affect what the other one reads at the same address, since each of them works in its own address space. four

5 5 Parallel computers with distributed memory

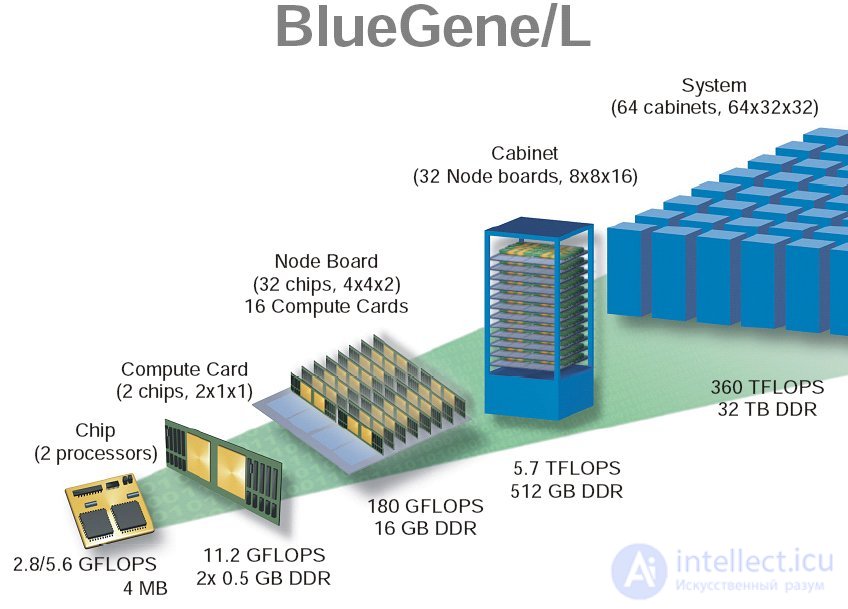

6 Blue Gene / L 6

Location: Lawrence Livermore National Laboratory. Total number of processors: 65536 pieces. Consists of 64 racks. Performance 280.6 teraflops. The laboratory has about 8000 employees, of which more than 3500 scientists and engineers.

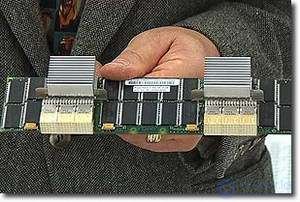

The machine is built on a cellular architecture, that is, from the same type of blocks, which prevents the appearance of "bottlenecks" when expanding the system. The standard BlueGene / L module - "compute card" - consists of two block-nodes (node), the modules are grouped into a modular card of 16 pieces each, 16 modular cards are mounted on a midplane, 43.18 x 60.96 x 86.36 cm, with each such panel combines 512 nodes.

Two backplanes are mounted in a server rack, in which there are already 1024 base units. Each compute card contains two central processors and four megabytes of allocated memory. The PowerPC 440 processor can perform four operations with a floating point per clock, which for a given clock frequency corresponds to a peak performance of 1.4 teraflops for one backplane ( midplane), if we assume that one processor is installed on one node.

However, at each block node there is another processor identical to the first one, but it is designed to perform telecommunication functions.

Comments

To leave a comment

Highly loaded projects. Theory of parallel computing. Supercomputers. Distributed systems

Terms: Highly loaded projects. Theory of parallel computing. Supercomputers. Distributed systems