Lecture

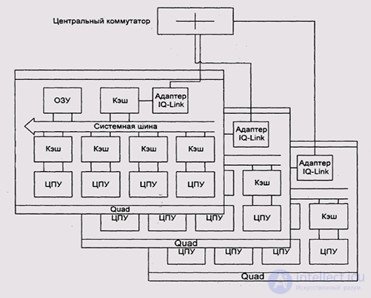

Massively parallel architecture (MPP, Massive Parallel Processing) is a class of architectures of parallel computing systems. The peculiarity of the architecture is that the memory is physically separated. The system is built from separate modules containing a processor, a local bank of operating memory, communication processors or network adapters, sometimes hard drives and / or other input / output devices. Only processors from the same module have access to the bank of operating memory from this module. Modules are connected by special communication channels. Two variants of operating system operation on MPP-architecture machines are used. In one, a full-fledged operating system works only on the controlling machine (front-end), on each individual module there is a heavily trimmed version of the operating system that ensures the operation of only the branch of the parallel application located in it. In the second version, a full-fledged UNIX-like OS runs on each module, installed separately.

Architecture benefits

The main advantage of systems with separate memory is good scalability: in contrast to SMP systems, in machines with separate memory, each processor has access only to its local memory, and therefore there is no need for clockwise synchronization of processors. Practically all performance records for today are set on machines of such an architecture, consisting of several thousand processors (ASCI Red, ASCI Blue Pacific)

Architecture flaws

the lack of shared memory significantly reduces the interprocess exchange rate, since there is no common environment for storing data intended for exchange between processors. Special programming technique is required to implement messaging between processors; each processor can use only a limited amount of local memory bank; Due to these architectural shortcomings, considerable effort is required in order to maximize the use of system resources. This is what determines the high price of software for massively parallel systems with separate memory.

Comments

To leave a comment

Highly loaded projects. Theory of parallel computing. Supercomputers. Distributed systems

Terms: Highly loaded projects. Theory of parallel computing. Supercomputers. Distributed systems