Lecture

Test design is a stage of the software testing process at which test cases are designed and created (test cases) in accordance with previously defined quality criteria and testing objectives.

Test design [ ISTQB Glossary of terms ]: The process of transforming general testing conditions into tangible test conditions and test cases.

Simply put, the task of a test of analysts and designers is to use different strategies and test design techniques to create a set of test cases that provides the best test coverage of the application under test. However, on most projects these roles are not allocated, but are entrusted to ordinary testers, which does not always have a positive effect on the quality of tests, testing and, as it follows from this, on the quality of the software (final product).

Test Coverage is one of the test quality assessment metrics, which is the density of test coverage of requirements or executable code.

If we consider testing as "checking the correspondence between the actual and expected behavior of the program, performed on the final test suite," then this final test suite will determine the test coverage:

The higher the required level of test coverage, the more tests will be selected to verify the tested requirements or executable code.

The complexity of modern software and infrastructure has made impossible the task of testing with 100% test coverage. Therefore, to develop a test suite that provides a less high level of coverage, you can use special tools or test design techniques.

There are the following approaches to the assessment and measurement of test coverage:

Differences :

The requirements coverage method focuses on verifying the compliance of a set of tests with product requirements, while the code coverage analysis completes the verification tests of the developed part of the product (source code), and the control flow analysis tracks the paths in the graph or model of the functions being tested. (Control Flow Graph).

Limitations :

The code coverage estimation method will not reveal unrealized requirements, as it does not work with the final product, but with the existing source code.

The requirement coverage method may leave some parts of the code untested, because it does not take into account the final implementation.

Calculation of test coverage relative to the requirements is carried out according to the formula:

Tcov = (Lcov / Ltotal) * 100%

Where:

Tcov - test coverage

Lcov - the number of requirements checked by test cases

Ltotal - total requirements

To measure coverage of requirements, it is necessary to analyze product requirements and break them down into points. Optionally, each item is associated with test cases checking it. The combination of these links is the trace matrix. Tracing the connection, you can understand exactly what the requirements of the test case checks.

Non-requirements tests are meaningless. Non-test requirements are white spots, i.e. After completing all the created test cases, you can not give an answer whether this requirement is implemented in the product or not.

To optimize test coverage for testing based on requirements, the best way is to use standard test design techniques. An example of developing test cases according to existing requirements is discussed in the section: "Practical application of test design techniques in the development of test cases"

Calculation of test coverage relative to the executable software code is carried out according to the formula:

Tcov = (Ltc / Lcode) * 100%

Where:

Tcov - test coverage

Ltc - number of lines of code covered with tests

Lcode - the total number of lines of code.

Currently, there is a toolkit (for example: Clover), which allows you to analyze which rows were occurrences during testing, so you can significantly increase coverage by adding new tests for specific cases, as well as get rid of duplicate tests. Conducting such an analysis of the code and the subsequent optimization of the coverage is fairly easy to implement as part of the white-box testing for modular, integration and system testing; when testing the black box (black-box testing), the task becomes quite expensive, since it requires a lot of time and resources to install, configure and analyze the results of work, both from the testers and developers.

Control Flow Testing is one of the white box testing techniques that is based on determining how to execute program module code and create test cases to cover these paths. [one]

The foundation for testing control flows is the construction of control flow graphs, the main blocks of which are:

Different levels of test coverage are defined for testing control flows:

| Level | Title | Short description |

|---|---|---|

| Level 0 | - | “Test everything you test, users test the rest.” In English, this sounds much more elegant: “Test whatever you test, users will test the rest” |

| Level 1 | Operator Coverage | Each statement must be executed at least once. |

| Level 2 | Coating Alternatives [2] / Coating Branches | Each node with branching (alternative) is performed at least once. |

| Level 3 | Coverage | Each condition that has TRUE and FALSE at the output is executed at least once. |

| Level 4 | Coverage of alternatives | Test cases are created for each condition and alternative. |

| Level 5 | Coverage of multiple conditions | Coverage of alternatives, conditions and conditions of alternatives is achieved (Levels 2, 3 and 4) |

| Level 6 | “Covering an infinite number of paths” | If, in the case of looping, the number of paths becomes infinite, their substantial reduction is allowed, limiting the number of execution cycles, to reduce the number of test cases. |

| Level 7 | Track covering | All paths must be checked. |

Table 1. Test Coverage Levels

Based on the data of this table, you can plan the required level of test coverage, as well as evaluate the existing one.

Literature

[1] A practitioner's Guide to Software Test Design. Lee copeland

[2] Standard Glossary of Terms Used in Software Testing Version 2.0 (December 4, 2008), Prepared by the 'Glossary Working Party' International Software Testing Qualifications Board

Many people test and write test cases (test cases), but not many use special test design techniques . Gradually, gaining experience, they realize that they are constantly doing the same work, which is amenable to specific rules. And then they find that all these rules are already described.

I suggest you read the brief description of the most common test design techniques:

Many people know what a test design is, but not everyone knows how to apply it. To clarify the situation a bit, we decided to offer you a consistent approach to the development of test cases (test cases), using the simplest test design techniques:

The test case development plan is proposed as follows:

Further on an example, we will consider the offered approach.

Example:

Test the functionality of the application form, the requirements for which are provided in the following table:

|

Element |

Item type |

Requirements |

|---|---|---|

|

Type of treatment |

combobox |

Data set:

* - does not affect the process of performing the application receipt operation. |

|

The contact person |

editbox |

1. Required 2. Maximum 25 characters 3. The use of numbers and special characters is not allowed |

|

contact number |

editbox |

|

|

Message |

text area |

1. Required 2. Maximum length 1024 characters |

|

To send |

button |

Condition: 1. Default - Inactive (Disabled) 2. After filling in the required fields becomes active (Enabled)

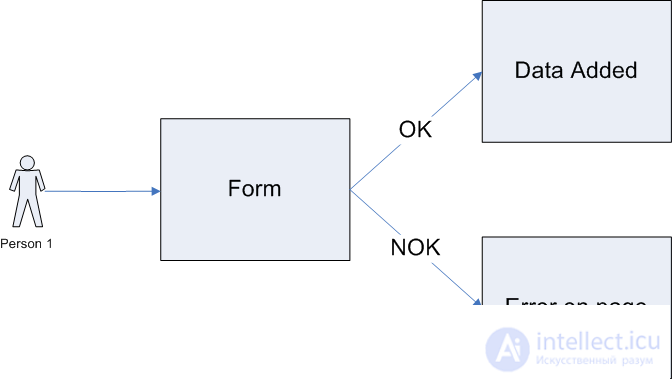

Actions after pressing 1. If the entered data is correct - sending a message 2. If the entered data is NOT correct - validation message

|

Use case ( sometimes it may not be ):

We read, analyze the requirements and highlight the following nuances for ourselves:

Based on the requirements for the fields, using the design test techniques, we begin the definition of a test data set:

Note:

Note that the number of test data after the final generation will be quite large, even when using special design test techniques. Therefore, we confine ourselves to only a few values for each field, since the purpose of this article is to show the process of creating test cases , and not the process of obtaining specific test data.

The Contact telephone field consists of several parts: a country code, an operator code, a telephone number (which can be composite and separated by hyphens). To determine the correct set of test data, it is necessary to consider each component separately. Applying BVA and EP, we get:

The resulting data table, for use in the subsequent preparation of test cases

|

Field |

OK / NOK |

Value |

Comment |

|---|---|---|---|

|

Type of treatment |

Ok |

Consultation |

first on the list |

|

Site error |

last on the list |

||

|

NOK |

|

|

|

|

The contact person |

Ok |

ytsukengschschytsukengshshtsytsuke |

25 characters lower case |

|

a |

1 character |

||

|

YTSUKENGSCHSCHFYVAPRODJYACHSMI |

25 characters upper case |

||

|

ITSUKENGSHSCHZfryproljYaSMI |

25 characters SMASH register |

||

|

NOK |

|

empty value |

|

|

ytsukengshschytsukengshshtsytsukey |

length is greater than the maximum (26 characters |

||

|

@ # $% ^ &;.?,> | \ / № "! () _ {} [<~ |

specialist. characters (ASCII) |

||

|

1234567890123456789012345

|

only numbers

|

||

|

adsadasdasdas dasdasd asasdsads (...) sas |

very long string (~ 1Mb) |

||

|

contact number |

Ok |

+12345678901 |

with plus - the minimum length |

|

+123456789012345 |

with plus - maximum length |

||

|

12345 |

no plus - minimum length |

||

|

1234567890 |

no plus - maximum length |

||

|

NOK |

|

empty value |

|

|

+1234567890 |

with plus - |

||

|

+1234567890123456 |

with plus -> maximum length |

||

|

1234 |

no plus - |

||

|

12345678901 |

no plus -> maximum length |

||

|

+ YYYXXXyyyxxzz |

with a plus - letters instead of numbers |

||

|

yyyxxxxzz |

no plus - letters instead of numbers |

||

|

+ ### - $$$ -% ^ - & ^ - &! |

specialist. characters (ASCII) |

||

|

1232312323123213231232 (...) 99 |

very long string (~ 1Mb) |

||

|

Message

|

Ok |

ytsuuyuts (...) ytsu |

maximum length (1024 characters) |

|

NOK |

|

empty value |

|

|

ytsuysuits (...) yutsuts |

length is greater than the maximum (1025 characters) |

||

|

adsadasdasdas dasdasd asasdsads (...) sas |

very long string (~ 1Mb) |

||

|

@ ## $$$% ^ & ^ & |

only special characters (ASCII) |

Based on the CE technique and, if possible, the existing use cases (Use case), we will create a pattern of the planned test. This document will be the steps and expected results of the test, but without specific data, which is substituted in the next stage of test case development.

Sample Case Test Template

|

Act |

Expected Result |

|---|---|

|

1. Open the form to send a message |

|

|

2. Fill in the form fields:

|

|

|

3. Press the "Send" button |

|

After the test data and test steps are ready to proceed directly to the development of test cases. Here we can help such methods of combination as:

Upon completion of the preparation of data combinations, we substitute them into the test case template, and as a result we have a set of test cases covering the requirements for the form for accepting applications that we are testing.

Note :

We remind you that test cases are divided by the expected result into positive and negative test cases.

An example of a positive test case (all fields are OK ):

|

Act |

Expected Result |

|---|---|

|

1. Open the form to send a message |

|

|

2. Fill in the form fields:

|

|

|

3. Press the "Send" button |

|

An example of a negative test case (Contact person field - NOK ):

|

Act |

Expected Result |

|---|---|

|

1. Open the form to send a message |

|

|

|

Comments

To leave a comment

Quality Assurance

Terms: Quality Assurance