Lecture

A neural network processing information can be compared to a black box. No, of course, experts imagine the principle of data processing by a neural network in general terms. But the problem is that self-training is not a completely predetermined process, so sometimes absolutely unexpected results can be expected at the output. At the heart of everything lies deep learning, which has already allowed us to solve a number of important problems, including image processing, speech recognition, translation. It is possible that neural networks will be able to diagnose diseases at an early stage, make the right decisions when trading on the stock exchange, and perform hundreds of other important human actions.

But first you need to find ways that will better understand what is happening in the neural network itself when processing data. Otherwise, it is difficult, if at all possible, to predict the possible errors of systems with a weak form of AI. And such errors will certainly be. This is one of the reasons why the car from Nvidia is still under testing.

1. Classification Errors

False Positives: When an AI system classifies an object or event as belonging to a certain category when it does not.

False Negatives: When an AI system does not recognize an object or event that should be classified into a certain category.

2. Prediction Errors

Low Prediction Accuracy: When an AI incorrectly predicts a future event or behavior based on data.

Systematic Bias: Bias in the training data that leads to systematic errors in predictions.

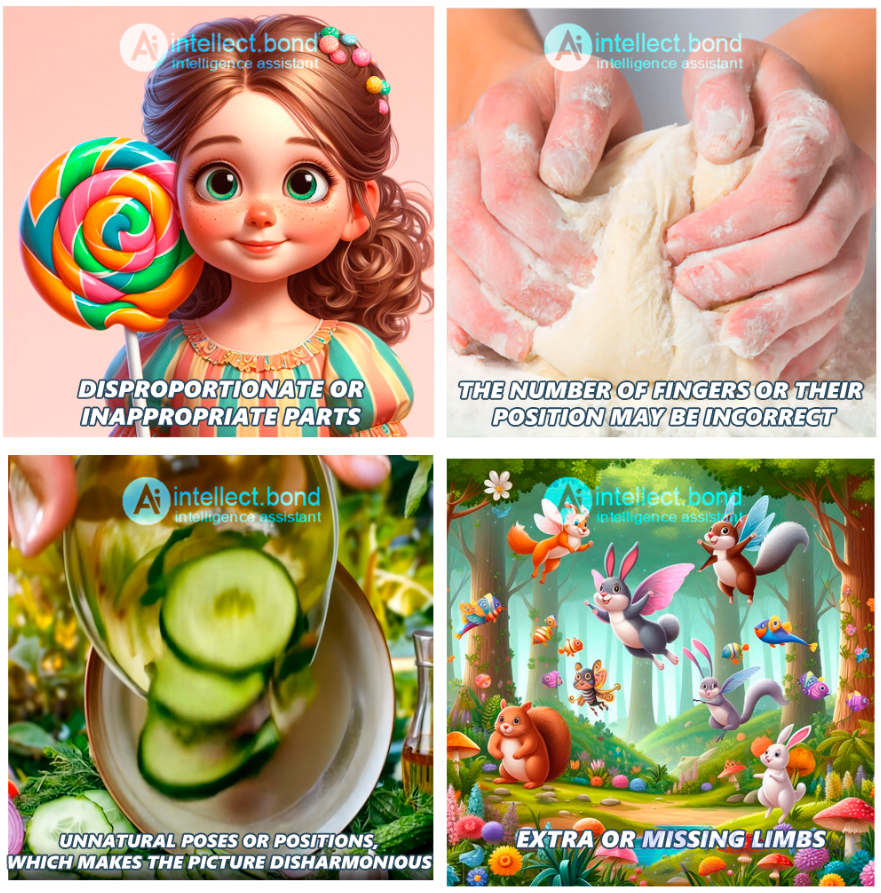

3. Image Processing Errors

Distortion of Anatomy: For example, errors in generating images of people (as in the case of fingers).

Misrecognition of Objects: When an AI system incorrectly identifies objects in images.

4. Natural Language Processing Errors

Misunderstanding of Context: An AI system may misinterpret the meaning of a phrase or text.

Syntactic and Semantic Errors: Errors in the grammar or meaning of the generated text.

5. Decision Errors

Ethical Errors: Decisions that are contrary to moral and ethical standards.

Priority Error: When the AI makes a decision that does not match the user's priorities and goals.

6. Training Errors

Overfitting: The model fits the training data too well, but does not work well with new data.

Underfitting: The model does not fit the training data enough, resulting in low accuracy.

7. Technical Errors

Input Data Error: Incorrect or incomplete data can cause AI to fail.

System Failures: Software errors or hardware issues can cause the AI system to fail.

8. Hallucinations

Factual Hallucinations: The AI makes up facts that do not correspond to reality. For example, it might state that “London is the capital of France.”

Confabulation: The model generates detailed but entirely fictitious answers that sound plausible but have no factual basis.

9. Context Understanding Errors

Task Misunderstanding: The AI may misinterpret the user’s query and provide an answer that does not match the question.

Context Bias: The model may change the topic or context of the answer without a clear reason, resulting in information that is inconsistent.

10. Data Bias

Training Bias: Errors caused by skewed training data that lead to incorrect or biased conclusions.

Algorithmic Bias: Software limitations in the model may lead to repeated biased conclusions.

11. Structural Errors

Information Misconnection: The AI may incorrectly connect pieces of information, resulting in a distorted or illogical result.

Inconsistency: The model may provide inconsistent answers to the same or similar queries.

12. Semantic Errors

Meaning Confusion: The AI may use words and phrases incorrectly, creating confusion about the meaning of the text.

Interpretation Error: Misinterpreting ambiguous words and phrases may result in an incorrect answer.

13. Generation Errors

Repetition: The model may repeat the same information or phrases, making the text redundant.

Incompleteness: The answer may be incomplete or fragmentary, not covering all aspects of the query.

14. Data Errors: The results of an AI system depend on the quality of the data used to train it. If the data contains errors, distortions, or inaccuracies, the results of the AI system may be inaccurate or incorrect.

15. Mistraining: If the AI system has been trained incorrectly or insufficiently, it may produce erroneous results. For example, if the system was trained on incorrect data or with insufficient data, it may fail to recognize some patterns or make mistakes in identifying certain objects.

16. Inability to adapt: AI systems may have trouble adapting to new situations that were not designed into their algorithms. If the AI system was not able to adapt to new conditions, its output may be inaccurate.

17. Interpretability: The output of an AI system may be difficult to interpret or explain. In some cases, AI systems may make decisions that cannot be explained or understood by humans. In some cases, AI systems may generate output that is difficult or impossible to interpret or explain.

18. Susceptibility to attack: AI systems may be susceptible to attacks and fraud, such as introducing false data into training sets or using deception to change the output of the system.

19 Lack of transparency: In some cases, AI systems may not be transparent enough to verify their output. This may mean that it is impossible to determine how the system makes its decisions, or how it operates in general.

20 Ethical issues: The output of an AI system may raise ethical issues, such as

as discrimination or privacy violation. Some AI systems may also be used to create dangerous or malicious results.

21 Hallucinations in artificial intelligence (AI) systems can also be considered as errors. Hallucinations in AI occur when an AI system generates some content (e.g. text, images, sounds) based on its training and experience, but this content does not correspond to reality or does not have its own real-world counterpart. This can happen, for example, if the AI system was trained on incomplete or incorrect data, or if the content-generating algorithms in the AI system have limitations that lead to the generation of inaccurate or unrealistic results. Hallucinations in AI are one of many types of errors that can occur in the performance of artificial intelligence systems. They can be problematic because they can lead to inaccurate results and a decrease in the quality of the AI system.

22 Classification errors: AI systems may misclassify data, such as failing to recognize objects or their features or classifying them incorrectly.

23 Prediction Errors: AI systems may make incorrect predictions based on incorrect or outdated data.

24 Content Generation Errors: AI systems may generate incorrect or inappropriate texts, images, sounds, and other types of content.

25 Training Errors: If an AI system has not been trained well enough or on the right data, it may make errors in its operation.

26 Decision Errors: AI systems may make incorrect decisions based on incorrect analysis of data.

27 Lack of Transparency: AI systems may lack transparency, making it difficult to understand how they work and how they make decisions.

28 Security Issues: AI systems may be vulnerable to hacking or abuse, which may lead to data leaks or other security issues.

29. Finger Generation Problem

Finger generation errors often occur due to the complexity of the task and the limitations of current image generation models. This is mainly due to several factors:

1. Complexity of Anatomy

Hands and fingers are some of the most difficult body parts to depict due to their mobility and varied poses. They have many joints and bones, making them difficult to depict correctly.

2. Training Limitations

AI models are trained on huge image datasets, but even this amount of data may not be enough to perfectly reproduce complex details like fingers. Training datasets may not have enough diverse and accurate images of hands.

3. Generative Approaches

Generative models like GANs (Generative Adversarial Networks) can create realistic images, but they do not always understand anatomical features the way the human brain does. Instead, they try to model an image based on probabilities, which can lead to distortions.

4. Noise and Interference

When generating images, models may encounter “noise” in the data, which leads to distortions and errors. This is especially noticeable in complex parts like fingers.

5. Model evolution

AI models are constantly improving, and the quality of image generation is also improving over time. Current models already show significant progress compared to a few years ago, but there is still room for improvement.

This is why distortions in finger images happen, but with each update and improvement of the models, the accuracy and realism of the images will continue to improve.

Conclusions

Errors that occur in AI systems highlight the importance of continuous improvement and adaptation of these technologies. These errors can take many forms, and understanding their nature helps developers create more reliable and accurate AI systems. It is important to consider that each category of errors reflects the features and limitations of current AI technologies, and highlights the need for rigorous testing and optimization of models.

These errors show that despite significant advances in AI, models still have their limitations. Continuous improvement of training data, algorithms, and model testing help minimize such errors, making AI more reliable and accurate. These errors can occur in various AI applications, and their minimization requires rigorous testing, algorithm improvement, and training models on higher-quality data.

The development and implementation of AI requires continuous improvement and consideration of all possible errors. Transparency and understandability of neural networks remains an important task to increase user trust in AI systems. Improving data quality, optimizing algorithms, and introducing ethical standards into AI processes will help reduce the number of errors and create more reliable and effective systems. In the future, the development of AI will be accompanied by new challenges that require innovative solutions and an interdisciplinary approach.

Comments

To leave a comment

Theory of Reliability

Terms: Theory of Reliability