Lecture

| How does the brain actually function? How does he build connections within himself? How does the process of training the neutron network? |

|

|

Neurons are a random number and they are connected randomly. It is required to construct a connection algorithm, after which the model will act expediently. |

|

A perceptron (from the word perception - perception) can be considered as an embodiment of the neural network. |

|

Neural network learning algorithm: |

|

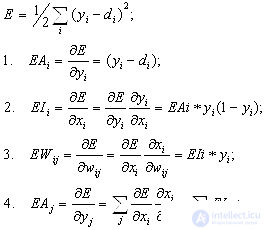

The structure, input and output signals can not be changed. E can be minimized by changing w ij . |

|

1 - dependence of the rate of change of error on the output signal of the perceptron. 2 - dependence of the rate of change of error on the input signal of the perceptron. 3 - dependence of the rate of change of error on the weight of the connection |

|

Reverse Computation Algorithm |

|

We know what should be at the output and gradually calculate the input from layer to layer by the chain of formulas 1 - 2 - 4 - 2 - 4 - 2 - 4 -: In this case, after determining (2) in each layer, we can calculate it by the formula (3 ) dw and further w according to the formula: w = w + dw . It is implemented as a gradient method. |

|

The task is as follows: find all w ij , that is, adjust the weights of all the connections so that the perceptron gives the desired output signal to the corresponding input. To set up (learn) a perceptron for a task, you need to implement many iterations. The goal is to reduce the error E to zero. As a result, all the best values of w ij are found . Learning occurs exponentially. If the error E does not come to zero, it means that the perceptron's complexity is not enough for teaching this example (examples), the number of layers or neurons in the layers should be increased. |

|

The general signs of technology are as follows. The system begins to detect patterns in the input information. The system does not know how it learns - it is indifferent to the subject of reasoning. The system is easily retrained and retrained. |

|

At the entrance were 64 images. As a training system had to give an answer to the question - is there a mirror symmetry in the picture. She studied on 64 images - she was told whether there is a mirror image or not. Then they gave an expert picture - an image that she had not yet seen. She accurately answered the question asked. Thus, the experiment was carried out in two stages - training (a number of examples were given to the perceptron) and examination (checking the degree of training). Each perceptron studied exponentially. perceptrons taught to solve arithmetic examples, reading English text, recognizing spoken letters, etc. |

|

Perceptron can be “undernourished” by examples, but it is also possible to “overfeed”. |

|

Example: Perseptron of the 1st order copes fairly well with the tasks of image recognition to which scale and rotation effects have been applied, but cannot “digest” the shift. Therefore it is required to apply several perceptrons. The number of perceptrons must be increased hierarchically until the complexity of the system equals the complexity of the task. |

Comments

To leave a comment

Artificial Intelligence

Terms: Artificial Intelligence