Lecture

The history of the development of computing systems with massive parallelism has more than a dozen years. Perhaps this is one of a small number of areas of science and technology, where domestic developments are at the level of world achievements, and in some cases even exceed them. Years went by, the element base and approaches to the architecture of building modern superchargers changed, new directions appeared, among which neurocomputers can be attributed.

What should be understood by the term neurocomputer? The question is quite complicated. The neural network, as such, is interdisciplinary, it deals with as developers of computer systems and programmers, as well as specialists in the field of medicine, financial and economic workers, chemists, physicists, etc. The fact that physics is understandable is completely not accepted by the physician and vice versa - all this has generated numerous disputes and whole terminological wars in various areas of application of everything where there is a prefix neuro. Here are some of the most well-established definitions of a neurocomputer [1,2,4,6], adopted in specific scientific fields:

The general principles of the construction of neural networks were laid at the beginning of the second half of the 20th century in the works of such scientists as: D. Hebb, M. Minsky, F. Rosenblat. The first neural networks consisted of a single layer of artificial perceptron neurons. M. Minsky strictly proved a number of theorems defining the principles of functioning of neural networks. Despite the numerous advantages of perceptrons: linearity, ease of implementation of parallel computing, the original learning algorithm, etc., M. Minsky and his co-authors showed that single-layer neural networks implemented on its basis are not capable of solving a large number of diverse tasks. This caused a certain weakening of the pace of development of neural network technologies in the 60s. In the future, many restrictions on the use of neural networks were removed with the development of multilayer neural networks, the definition of which was first introduced by F. Rosenblat: "a multilayer neural network means this property of the transformation structure, which is implemented by a standard open neural network with a topological description, not a symbolic description." The theory of neural networks was further developed in the years 70-80 in the works of B. Widrow, Anderson, T. Kohonen, S. Grossberg, and others.

The theory of neural networks has not introduced revolutionary innovations in the algorithms of adaptation and optimal control. Self-learning systems have been known for a long time, the theory of adaptive regulators is also well developed, they are widely used in engineering. The theory of neural networks masters the methods developed earlier and tries to adapt them to create more and more efficient neural systems. Of particular importance is the use of neurostructures in terms of computer performance. According to the hypothesis of Minsky [2-4]: the actual performance of a typical parallel computing system of n processors grows as log (n) (that is, the system performance of 100 processors is only twice as high as that of a 10-processor system - processors wait longer for their turn than calculate). However, if the neural network is used to solve the problem, then parallelism can be used almost completely - and the performance grows "almost proportionally" n.

| No | Scientific direction | Definition of the neural computing system |

| one | Mathematical statistics | A neurocomputer is a computing system that automatically generates a description of the characteristics of random processes or their combination, with complex, often a priori unknown distribution functions. |

| 2 | Mathematical logic | A neurocomputer is a computational system whose algorithm of operation is represented by a logical network of elements of a particular type — neurons, with a complete rejection of Boolean elements of type AND, OR, NOT. |

| 3 | Threshold logic | A neurocomputer is a computing system, an algorithm for solving problems in which is represented as a network of threshold elements with dynamically tunable coefficients and tuning algorithms that are independent of the dimension of the network of threshold elements and their input space. |

| four | Computer Engineering | The neurocomputer is a computational system with MSIMD architecture, in which the processor element of a homogeneous structure is simplified to the level of a neuron, the connections between the elements are dramatically complicated, and the programming is shifted to changing the weights of the connections between the computing elements. |

| five | Medicine (neurobiological approach) | A neurocomputer is a computer system representing a model of the interaction of the cell nucleus, axons and dendrides connected by synaptic connections (synapses) (that is, a model of biochemical processes occurring in nerve tissues). |

| 6 | Economics and finance | There is no established definition, but most often under the neurocalculator understand the system that provides parallel execution of "business transactions" with elements of "business logic". |

Consequently, the main advantages of neurocomputers are associated with massive parallelism of processing, which causes high speed, low requirements for stability and accuracy of the parameters of elementary nodes, resistance to interference and destruction with a large spatial dimension of the system, and stable and reliable neural systems can be created from low-reliable elements that have large scatter of parameters.

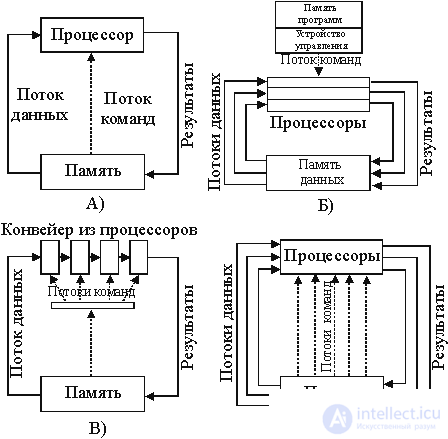

In the future, in this review, a neurocomputer will be understood as any computer system with MSIMD architecture (Definition No. 4). Before turning to the review of modern neurocalculators and their element base, let us dwell on the classification of computer systems architectures according to B.М. Koganu:

Fig.1. Computer architecture.

An elementary building block of a neural network (NS) is a neuron that performs a weighted summation of the signals arriving at its input. The result of this summation forms an intermediate output signal, which is converted by the activation function into the output signal of the neuron. By analogy with electronic systems, the activation function can be considered as a nonlinear amplification characteristic of an artificial neuron, having a large gain for weak signals and a falling gain for large excitations. Gain is calculated as the ratio of the output signal of the neuron to caused a small increment of the weighted sum of the input signals. In addition, to ensure an increase in computational power of multilayer NAs, as compared with single-layer ones, it is necessary that the activation function between layers be non-linear, i.e. as shown in considering the associativity of the matrix multiplication operation, any multilayered neural network without nonlinear activation functions can be reduced to an equivalent single-layer neural network, which are very limited in their computational capabilities. But at the same time, the presence of neuralities at the output of a neuron cannot serve as a decisive criterion, neural networks are well known and successfully operate without output non-linear transformations, called neural networks on delay lines.

Algorithmic basis of neurocomputers provides the theory of neural networks (NS). A neural network is a network with a finite number of layers of the same type of elements - analogues of neurons with different types of communication between layers. The main advantages of the National Assembly in [2] are: the invariance of the methods of synthesis of the National Assembly to the dimension of the feature space and the size of the National Assembly, the adequacy of modern advanced technologies, fault tolerance in the sense of monotonous and not catastrophic change in the quality of the solution of the problem depending on the number of failed elements.

The solution of mathematical problems in the neural network logical basis is determined by the theoretical positions of neuromathematics. In [2], the following stages of solving virtually any task in the neural network logical basis are highlighted: formation of the input signal NA, formation of the output signal NA, formation of the desired output signal NA, generation of the error signal and optimization functional, formation of the neural network structure adequate to the selected task, development of the algorithm adjusting the NA equivalent to the process of solving the problem in the neural network logical basis, conducting research on the decision-making process of the problem. All of the above makes the construction of modern control systems using the neural network approach and based on the neural network logical basis one of the most promising directions for the implementation of multi-channel and multi-connected control systems.

As noted, the neurocomputer is a computer system with MSIMD architecture, i.e. with parallel streams of the same commands and multiple data flow. Let's see what side neurocomputers relate to parallel computers. Today we can distinguish three main directions of development of computing systems with mass parallelism (VSMP):

| No | Name of direction | Description |

| one | VSMP based on cascade connection of universal SISD, SIMD, MISD microprocessors. | Element base - universal RISC or CISC processors: Intel, AMD, Sparc, Alpha, Power PC, MIPS, etc. |

| 2 | Based on processors with parallelization at the hardware level. | Element Base - DSP Processors: TMS, ADSP, Motorola |

| 3 | VSMP on specialized element base | Element base from specialized single-bit processors to neurochips. |

For each of the areas today there are solutions that implement certain neural network paradigms. For greater clarity, we will further assume that neural network systems implemented on hardware platforms of the first direction (albeit multiprocessor ones) will be referred to as neuro-emulators - i.e. systems implementing typical neurooperations (weighted summation and nonlinear transformation) at the program level. Neural network systems implemented on hardware platforms of the second and third directions in the form of expansion cards of standard computing systems (1st direction) will be called neuro accelerators (weighted summation is usually implemented in hardware, for example, based on transversal filters, and nonlinear transformations are programmatically ) and systems implemented on a third-party hardware platform in the form of functionally complete computing devices should be referred to neurocomputers (all operations performed are in the neural network logical basis). Summing up the terminological "war", it can be noted that neurocomputers can be safely attributed to high parallelism computing systems (MSIMD architecture), implemented on the basis of a specialized element base, focused on performing neural network operations in a neural network basis.

Questions of the construction and application of neuro-emulators are beyond the scope of this review, we can recommend to the interested reader to refer to the literature [3,6,7]. We will focus on a review of modern neuro accelerators and neurocomputers, first considering the elements of neurology from a hardware implementation point of view, then moving on to the elemental base of neural calculators (signal processors and neurochips), and then we will analyze the architectural solutions and tactical and technical properties of the most common modifications.

Comments

To leave a comment

Computer circuitry and computer architecture

Terms: Computer circuitry and computer architecture