Lecture

Immediately warn difficult. Brains will have to turn inside out.

The first filter. DB of "hackneyed phrases", with already known / calculated sense. Used to speed up rendering. Examples: "after a rain on Thursday", "when the cancer on the mountain whistles," etc.

Replaced by just - NO and then the transition to the "birth of a new thought."

The second filter. This is the "prediction" of the next phrase. The conversation has a certain "topic of conversation", "character" and "style". That is, depending on what the conversation is about. The question suggests an answer. A lecture involves memorization. The dispute involves an exchange of views. The scandal involves the exchange of emotions. For each situation, several variants of "behavioral models" are stored.

On the basis of these models, a "line of conduct" is built (here you are the character of the person and the mood, etc.). Moreover, the interlocutor also has (supposed) character / mood, etc.

And during the conversation, the coincidence of the real and the alleged conversation is monitored, with the correction of the following assumptions and the correction of the database of the assumptions.

Accordingly, the filter makes adjustments. Example:

IR: Is the earth shaped like a ball? (the answer is YES or NO)

Q: What do you think yourself? (the answer does not coincide with the supposed one, the answer was supposed, instead of this question. Phrase analysis. An independent clarification of the question is proposed. Put unresolved tasks in the buffer, try to build a "temporary" connection - an assumption).

The third filter. Screening of parasitic words. Mata (for a bunch of words in sentences).

Change of complex structures to simpler matching DB (synonyms).

Example: "Esteemed interlocutor, would you please tell me the following fact ..."

is replaced by: "say ..."

"I bow low and slavishly beg ..." is replaced by "listen ..."

EMO filter. Distortion depending on the emotional intensity.

The meaning of the phrase "Yes you are a goat, I was already stupid with my stupidity" is being replaced by "you did not understand me"

(according to the correspondence database and from the "line of conduct") Frank lie, ridicule, humiliation, humiliation, etc., and the corresponding "line of conduct" for example: ignore the interlocutor.

Only after processing by filters begins "recognition of the phrase" and "translation" of it into an internal format. In thought.

And so, let's start with understanding / recognizing phrases and turning them into links.

Suppose the input filed phrase:

"Green wallpaper in my room"

We will forget for a while about all syntactic-linguistic treatments, etc.

Now we need to understand the main thing. Any phrase contains several groups of links.

We need to select them and build a bunch.

(The totality of all clusters during a conversation is called the "thought ball" the most closely related word "context" but not quite. The context is the "general meaning" of the conversation, and the "thought ball" is a selection of all possible conversation connections (their number is limited by the controller "from deep care ")).

"Green wallpaper in my room"

For this phrase, the layout will be as follows:

With careful consideration, a complex sentence falls into two simple ones.

(ROOM) -> YES -> [HAS] -> (WALLPAPERS)

The room has wallpaper.

Moreover, the object (WALLPAPERS) has a color parameter:

(ROOM) -> YES -> [HAS] -> (WALLPAPERS) - | GREEN |

In addition, there is another "binding" that makes this connection from abstract to concrete.

(I) -> YES -> [HAS] -> (ROOM)

That is, if you write in the form of two separate links, you get this:

(I) -> YES -> [HAS] -> (ROOM)

(ROOM) -> YES -> [HAS] -> (WALLPAPERS) - | GREEN |

But it will be wrong. Since these are two different connections!

(ROOM) is an abstract concept because it is very high in wood.

So you need to combine these two links into one:

(I) -> YES -> [HAS] -> (ROOM) -> YES -> [HAS] -> (WALLPAPERS) - | GREEN |

It is a little difficult to understand right away, and I immediately want to argue: Why do you want to make a fuss at all if you got the same thing?

I'll try to explain. At the entrance we had a phrase (text) at the exit we have a thought.

Only words are beautiful, the thought is ugly from a linguistic point of view, it is too simple and primitive. Here in this "primitiveness" is the meaning. The thought is rational and easy to handle (saving / searching in the database, checking, replacing / replacing one data with another).

When working with text, the same thing is much more difficult to do.

And now we remember the "rule of the observer." With this thought, everything would be fine if it were born within the system.

But since it came to us "from the outside" (entered from the keyboard), therefore, it is necessary to "invert" the notion of self.

After this transformation, our thought will look like this:

(OH) -> YES -> [HAS] -> (ROOM) -> YES -> [HAS] -> (WALLPAPERS) - | GREEN |

Since the idea of "alien" is necessary to comprehend / process / calculate / drive it into the database:

1. Binding to I.

(I) -> YES -> [I KNOW] :( HE) -> YES -> [HAS] -> (ROOM) -> YES -> [HAS] -> (WALLPAPERS) - | GREEN |

I found out that he (the interlocutor) has his own room in which the wallpaper is green.

2. Thought check (signs on controllers / interrupts):

a) controller correct / Wrong thought.

He has a room. (process check) Can he have his own room?

The room has wallpaper. (process check) Are there any wallpapers in the rooms?

Green wallpaper (check color setting) Can the wallpaper be green?

b) priority controller. (Is it important to me? Do I even need to know this?)

b1) Do I already know that? Well no.

b2) The context of the conversation (did we even talk about it at all? Is the phrase relevant to the conversation?)

b3) What did he mean (re-checking the meaning of the phrase within the context, separately with the previous thought and separately with the general topic of conversation, checking for distortions - logic, lie)

c) EMO-attitude to the phrase (binding to the EMO-zone) Is the phrase serious? Not a joke? Not a hoax?

d) Checking the unresolved task buffer (do we need to know what color the wallpaper in his room is?)

d) ... / leave the possibility of adding test modules /

3. Make the final conclusion: the phrase is neutral. Data is second-rate. We save in the database with the appropriate labels / coefficients for the priority controller.

4. Preparing the answer. (further in the article "Birth of a new thought")

Briefly yet:

(Here you have to make a link to the "instructions" to the concepts.)

1. Any situation is processed according to the situation database and the instruction database.

The simplest situations are instructions like "Got a question - give an answer to it," or "when the older say - do not interrupt." The database of such instructions is updated all the time.

2. Information obtained during a conversation has a certain "likelihood" ratio (accuracy, truth, falsehood) is formed from the trust of the interlocutor (he may be mistaken / lie / joke, etc.) In addition, there is a variant of the wrong "understanding" (decoding) of the phrase ( you did not understand me, I did not mean it) or incomplete knowledge of the subject of conversation (some data / images / connections are missing). In this case, the corresponding "line of conduct" is used.

3. EMO filter. The same important part of the conversation. Predicting behavior is very difficult, you must have a large database of "situations" that you can "manually" invest. We need a very tricky algorithm for “remembering” situations and “comparing current” (similarity-like)

Let's try some more examples.

"A large number of animals live in the forest, for example - wild boars, moose, wolves, foxes, hares, squirrels, mice."

Unlike the previous case, here all the links are common. Consequently, they are located higher up the tree and can be recorded as separate links. We will write:

(BOAR) -> YES -> (ANIMAL)

(LOS) -> YES -> (ANIMAL)

(WOLF) -> YES -> (ANIMAL)

(FOX) -> YES -> (ANIMAL)

(Rabbit) -> YES -> (ANIMAL)

(PROTEIN) -> YES -> (ANIMAL)

(MOUSE) -> YES -> (ANIMAL)

(FOREST) -> YES -> [IS] -> (ANIMALS)

There are animals in the forest. And which ones? Can we answer this question? In general, no.

So if the giraffe and camel from another sentence accidentally fall into the list of animals, then when selected by the list, they will also fall into the list of animals living in the forest. Thus, we have established the point to which the connection is common and from where it becomes concrete. So we add:

(FOREST) -> YES -> [HAVE] -> (BOAR)

(FOREST) -> YES -> [HAVE] -> (LOS)

(FOREST) -> YES -> [IS] -> (WOLF)

(FOREST) -> YES -> [IS] -> (FOX)

(FOREST) -> YES -> [HAVE] -> (HARE)

(FOREST) -> YES -> [IS] -> (PROTEIN)

(FOREST) -> YES -> [IS] -> (MOUSE)

Or shortened entry:

(FOREST) -> YES -> [IS] -> (BOAR) / (LOS) / (WOLF) / (FOX) / (Rabbit) / (PROTEIN) / (MOUSE)

And so, the "understanding" for the phrase "A large number of animals live in the forest, for example - wild boars, moose, wolves, foxes, hares, squirrels, mice", in its final form will look like this:

(BOAR) -> YES -> (ANIMAL)

(LOS) -> YES -> (ANIMAL)

(WOLF) -> YES -> (ANIMAL)

(FOX) -> YES -> (ANIMAL)

(Rabbit) -> YES -> (ANIMAL)

(PROTEIN) -> YES -> (ANIMAL)

(MOUSE) -> YES -> (ANIMAL)

(FOREST) -> YES -> [IS] -> (BOAR) / (LOS) / (WOLF) / (FOX) / (Rabbit) / (PROTEIN) / (MOUSE)

Now we go here and having familiarized with the text and having constructed the corresponding scheme, we study to work with data and to answer questions.

A bit about the general / specific.

Take for example the previous phrase, but slightly visible it:

"A large number of animals are found in the forests of Transbaikalia, for example - wild boars, moose, wolves, foxes, hares, squirrels, mice."

In contrast to the previous case, adding just one word immediately made the thought concrete. Everything remained the same:

(BOAR) -> YES -> (ANIMAL)

(LOS) -> YES -> (ANIMAL)

(WOLF) -> YES -> (ANIMAL)

(FOX) -> YES -> (ANIMAL)

(Rabbit) -> YES -> (ANIMAL)

(PROTEIN) -> YES -> (ANIMAL)

(MOUSE) -> YES -> (ANIMAL)

(BARRING) -> YES -> [HAVE] -> (FOREST)

(FOREST) -> YES -> [IS] -> (ANIMALS)

But such a structure:

(FOREST) -> YES -> [IS] -> (BOAR) / (LOS) / (WOLF) / (FOX) / (Rabbit) / (PROTEIN) / (MOUSE)

we can no longer use it, because LES is a general concept, and it is not known what specific forest is meant. And we have in the condition indicated a specific forest of Transbaikalia.

In addition, such a record can spoil our ideas about wildlife in the forests.

Therefore, our record will be even longer:

(BARRING) -> YES -> [IS] -> (FOREST) -> YES -> [IS] -> (BOAR) / (LOS) / (WOLF) / (FOX) / (ZAJC) / (PROTEIN) / (MOUSE)

And taking into account the binding_ to

(I) -> YES -> [I KNOW] :( BARRING) -> YES -> [IS] -> (FOREST) -> YES -> [IS] -> (BOAR) / (LOT) / (WOLF) / (FOX) / (HARE) / (PROTEIN) / (MOUSE)

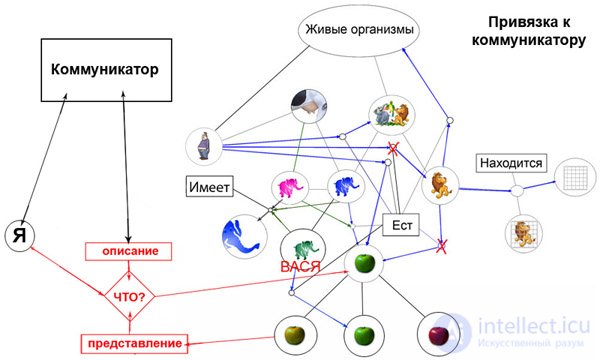

Small scheme (in our example with animals)

It shows a "binding to the communicator."

I think that is clearly depicted? If not, I am waiting for "clarifying questions."

In general, the fact that we have been sorting this out for so long is just "figurative thinking."

Repeatedly asked me a question: Do we think in words (I already gave the answer)

Yes and no. And that's why:

Linking to a communicator is a different branch of ONE equation.

A huge formula and in the middle a sign "=". All that to the left of the sign is thinking “with words”, all that to the right is with images. But this is far from everything, there is still an abstract way of thinking (God forbid that at least a year later, I could describe to you his principle of work :) and this is not all ...

However, back from heaven to earth. So we still think in words?

Yes. The process of thinking in words and images goes in parallel.

Examples:

1.1 Is the earth shaped like a ball?

1.2 2x2 =?

and for comparison:

2.1 How many sides dodecaidra?

2.2 213Х2 + 174 =? (without calculator !!!)

As you can see in the first two questions you did not have to “think”, since the answer was already “at the tip of the tongue”. In the second two questions you had to count. And this is already involved not only p / p work with memory, but also the logic and thought process ...

The most stable connections of images are placed in the image (top level) and labeled (indexed). This is called Image_ as_the set of images.

The word “concept” has an approximate meaning. The same is true of the most common phrases. There is a kind of "virtual buffer" (cache) in which the most frequently used phrases, phrases, jargon, etc. are located.

Profession, education, cultural level, IQ, hobbies, and so on. All this leaves an imprint on a person and depends on all this, what kind of phrases / knowledge are in this buffer.

What are they for? Everything is simple, TO ACCELERATE the process of thinking. - CASH.

This knowledge is already calculated, tested and very often in demand.

Accordingly, a complex question, this is the question, the answer to which "does not lie on the surface." And if you need to "think", this is the process of thinking.

Accordingly, we say "slang", the most frequently used words and sentences. And we "recognize" these phrases - faster.

If “non-standard phrase” gets into the buffer (unusual or unknown information or “from another opera”, respectively, the thinking will follow a different chain of neurons and other “mechanisms” of memory and thinking will be involved)

It means that in order to UNDERSTAND how the “binding to the communicator” is performed, it is necessary to KNOW how the “figurative thinking” works and HOW the communicator works.

This is a subroutine (function) to the input of which a "phrase in an external language" is fed, and at the output a "link of images".

But that is not all. There is not enough "simple translation" (bindings), since the "external" and "internal" languages are different and the "translation" is approximate. In addition, additional "noise and interference" are superimposed. IMPORTANCE IS IMPORTANT. The true value of the resulting connection.

Just to think about it, it becomes clear why there is still no perfect translator (for example, from English to Russian) because it’s not about keeping the word base on the English / Russian word base (it’s easy to implement) and even not in grammar (and it already exists a long time ago) and even neologisms, etc. can be chosen. The problem lies in the fact that the meaning is still lost. That is, after the "literal" (word-for-word) translation, additional editing of the text is necessary in order to bring the text to a syntactically correct spelling and prevent distortion of the semantic layer.

How so? We did not change ANYTHING in the original text, but the meaning was lost? Exactly! The same phrases, jargon and so on. In different languages have a different meaning. This is especially true of advertising slogans. Short and sonorous phrases. Example: Chupa Chups advertising slogan. "We taught the whole world to suck." In Russia, does not roll ... The meaning is important. Meaning is not related to the word. The meaning is connected with thought. (i.e., a little deeper), and this means the “perfect translator” should be able to “think” in both languages, in order to translate the phrase, you need to understand not what is said, but what is meant.

"Competence" of the interlocutor.

The interlocutor may be wrong / not know, lie, fantasize, mock, joke, finally just be a "moron" or a trol ...

Therefore, the context is very important, it can change the meaning in general to the opposite.

And the general emotional state of the conversation (a joke or a serious conversation).

And finally, the most important thing is IMPORTANCE (priority), if this is the NECESSARY information, then "listen and delve into everything said." If you just chatter, then "do not think deeply," joke and "have fun."

Based on the foregoing, I cannot “authoritatively state” that the communicator (translator) is separated into a separate subprogram. He most likely - part of the overall scheme. But to separate it on the diagram into a separate block is necessary for understanding its importance.

> 1. How many types of communicator bindings are planned?

Actually 2.

1. in / out.

2. recheck

(and whether he will understand me?)

That is, after "generating" the answer, the answer is sent back to the input and "recognition recognition is performed" and linked to the "virtual" thought ball by context (the current conversation).

If the result is the same (or approximately) as planned, the data is sent further to the speech organs. But is it necessary for the chat bot?

I do not know this...

> 2. Object and Process Characteristics.

The characteristics of the object depend on the object. We go down to the "leaves" and see what the object is. What characteristics has.

The characteristics of the process depend on the object by which the process is “filled”.

"Own" characteristics of the process are secondary, I have already written, they sign for the "wind rose" (coordinate system + time + importance)

> 2.2 How is the process determined, and where is the object?

Also a question, from the category of "go there - I do not know where, bring it, I do not know what."

In the absence of a database, it is impossible to “identify” in the new information where the object is, and where the process is - is impossible. (although linguistics is cunning and can help 70-80%)

With a "big enough" database, this is no longer a problem. Since we have the opportunity to "compare". In ET, this is realized by several different variants of thinking (associative, abstract thinking, intuition, and of course “experience” - DB of “successful” identifications).

The question is, HOW to implement it for a chat bot?

Therefore, I suggested, for starters, to refuse to recognize at all, until a "minimum necessary database" is typed. For starters, it limits itself to a "dialogue in the internal language" - the language of images.

In addition, there are 2 -3 ideas on how to significantly expand this database, without resorting to hard work on "manual database stuffing" (you'll have to write some very simple algorithms to replenish the database, based on the explanatory dictionary, wikipedia, encyclopedias, etc. that perhaps such a problem and will not appear at all.

But if it does, for any chat bot it will not "decide" on its own;)

Therefore, it is necessary to lay the instruction "do not know, ask."

> 3. How is goal setting for a given intelligence?

For a specific implementation - a chat bot, the goal is a "full-fledged dialogue with a person." And the priority of "secondary tasks" type; replenishing the database of objects / connections, clarifying the meaning, etc., let him "engage" himself;)

This should be understood as follows: the priority of priorities is set on the basis of a whole heap of parameters (including ECO) and I will not describe them yet ...

Although it is possible, at first, simply “mark” the entered info with labels of importance.

What do you think?

SEARCH FOR MEANING PHRASES

A phrase may have several layers of meaning. Дай бог, научить бота поиску хотя бы одного...

Первый фильтр.

БД "избитых фраз", с уже известным/просчитанным смыслом. Используется для ускорения просчета.

По научному назывется - фразеологизмы.

Однако кроме этого бывает еще СЛЕНГ и Язык Падонкафф. Кроме того, специфические (для каждой профессии) сокращения слов/фраз или при долгих разговорах с одним человеком сокращения напоминающие смысл прошлых бесед.

Первое:

Работает как метка (ссылка, ярлык) на дополнительный текст. То есть вытаскивается целиком на "просчет" только при особой необходимости, а в повседневной беседе используется только СМЫСЛ фразы (понимание).

Как сделать? По таблице соответствия. For example:

ПАДАТЬ ДУХОМ это значит Отчаиваться, глубоко расстраиваться, приходить в уныние

УПАСТЬ ДУХОМ это значит ПАДАТЬ ДУХОМ

ПАСТЬ ДУХОМ это значит ПАДАТЬ ДУХОМ

То есть, если перевести в запись на внутреннем языке, выглядит так:

(ПАДАТЬ ДУХОМ)<-[ЭТО]<-ДА->(ОТЧАИВАТЬСЯ)

Или сокращенная форма записи:

(ПАДАТЬ ДУХОМ)<-ДА->(ОТЧАИВАТЬСЯ)

буквально значит равно =

Правая часть (выражения) = левая часть (выражения) - одинаковы по смысловому значению.

Противоположный пример:

Не падай духом.

ПАДАТЬ ДУХОМ это значит Отчаиваться, глубоко расстраиваться, приходить в уныние

(ПАДАТЬ ДУХОМ)<-[ЭТО]<-ДА->(ОТЧАИВАТЬСЯ)

Не падать духом это значит противоположное по смысловому значению.

(НЕ ПАДАТЬ ДУХОМ)<-[ЭТО]<-НЕТ->(ОТЧАИВАТЬСЯ)

Или сокращенная форма:

(НЕ ПАДАТЬ ДУХОМ)<-НЕТ->(ОТЧАИВАТЬСЯ)

Второе:

Хранить в БД обратные конструкции (антонимы) для связей. (То же самое, но наоборот)

I.e:

(НЕ ПАДАТЬ ДУХОМ)<-[ЭТО]<-НЕТ->(ПАДАТЬ ДУХОМ)

(ПАДАТЬ ДУХОМ)<-[ЭТО]<-ДА->(ОТЧАИВАТЬСЯ)

Третье: Хранить в БД как СИНОНИМЫ.

(ПАДАТЬ ДУХОМ)<-[ЭТО]<-ДА->(ОТЧАИВАТЬСЯ)

(ПАДАТЬ ДУХОМ)<-[ЭТО]<-ДА->(РАССТРАИВАТЬСЯ)

(ПАДАТЬ ДУХОМ)<-[ЭТО]<-ДА->(ПРИХОДИТЬ В УНЫНИЕ)

Или сокращенная запись:

(ПАДАТЬ ДУХОМ)<-[ЭТО]<-ДА->(ОТЧАИВАТЬСЯ)/(РАССТРАИВАТЬСЯ)/(ПРИХОДИТЬ В УНЫНИЕ)

Для "понятий" необходимо хранить (словарь) не только ссылку на понимание но и ссылку на "оригинальный текст словаря", может быть необходим как цитата.

Аналогично для разговоров (бесед) при напоминании об уже говоренном.

А помнишь я тебе рассказывал, как мы ходили 1 мая на демонстрацию?

Реакция бота: Помню/НЕ помню? Рассказывал/НЕ рассказывал? В БД есть упоминания? Есть/Нет.

Глубже погружения не происходит, до необходимости. Соответственно "поведенческая реакция" и привязка к Я:

Да, помню. Нет, напомни пожалуйста.

Структура данных.

Хранить в виде списка:

1. идентификатор

2. понимание (смысловое значение)

3. определение из словаря

4. уточнение (когда используется)

5. Эмо-составляющая

6. Инструкция (поведенческая реакция)

Аналогично работает и Третий фильтр. .

Отсеивание слов-паразитов. Мата. (для связки слов в предложения).

Изменение сложных конструкций на более простые по БД соответствия (синонимы).

Пример: "Глубокоуважаемый собеседник, не соблаговолите ли вы сообщить мне следующий

факт..." заменяется на: "скажи..."

"Нижайше кланяюсь и раболепно умоляю..." заменяется на "слушай..."

Здесь только кроется маленький ньюанс, на который обычно не обращают внимания.

Нельзя так просто взять и выкинуть из предложения мат заменив его на тишину или "бип".

Мат имеет вполне нормальное (с точки зрения связей а не морали) смысловое значение.

Иногда даже более емкое, чем обычные слова. Существуют словари русского мата.

Существует бесчисленное количество популярных анекдотов играющих такую же роль общении,

как и фразеологизмы. (используется только смысловое содержание, ссылка на гроздь)

Выкинуть все это нельзя, так как смысловое значение потеряется либо изменится.

(НЕ ЗАКОНЧЕНО)

Comments

To leave a comment

Natural Language Modeling of Thought Processes and Character Modeling

Terms: Natural Language Modeling of Thought Processes and Character Modeling