Lecture

An image as an object may be a concept, but not every image of an object is a concept.

However, the "concept" is always the "image_to_set_of images".

I repeat once again - the most stable connection of images - also becomes an image, marked with a label (called by a proper name) and used on equal terms with other images.

Intersections of images (in connections) are possible at any levels.

"Image_object" is considered as a "variable" in the connection of images (object) -> [process / action] -> (object)

A concept (in the given interpretation) is a pointer to a link (or is this link itself).

Interrogative pronouns (in Russian) are vectors for choosing the direction of the path.

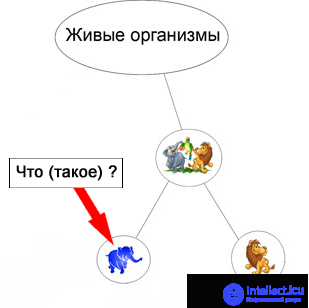

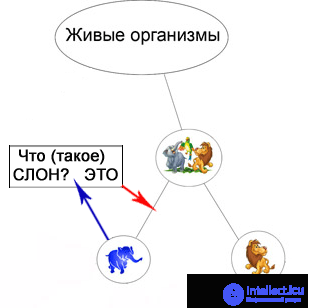

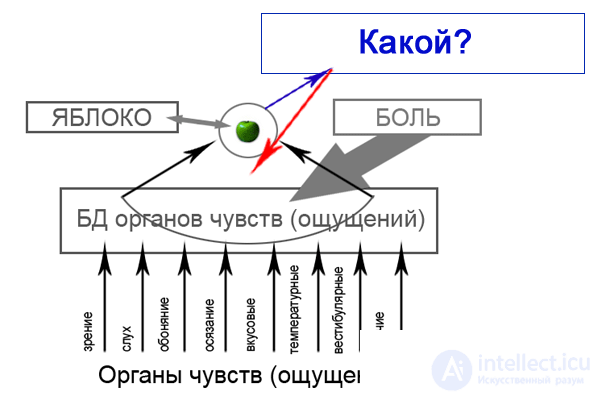

To begin with I will try to paint the simplest concept. Concept - WHAT?

WHAT? is a pointer to an object.

However, for understanding this is not enough. What? - "general" question. To make it "specific", you need a "clarification".

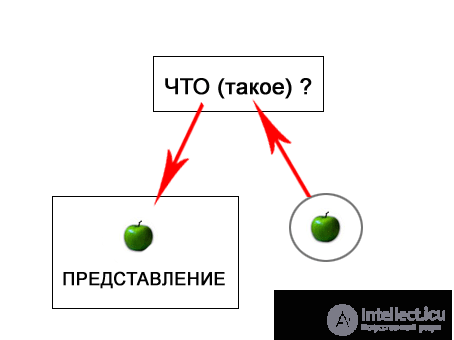

WHAT IS IT? (such) or WHAT (from itself) IS IT?

1. This is a view from the outside (observer rule): Tell me, what is this ELEPHANT?

2. This is an inside view: What is an ELEPHANT?

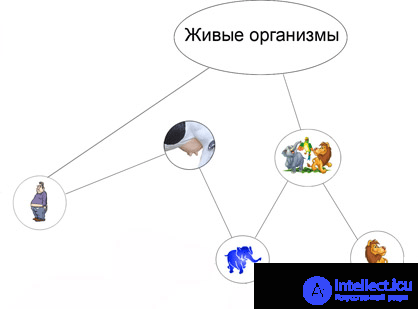

Redraw the picture a little differently.

(what? is a question, means to understand how to work with it, the arrow should indicate the answer)

It got worse, isn't it? ;))

The whole problem is that we can’t imagine HOW IT SETTINGS in our head ...

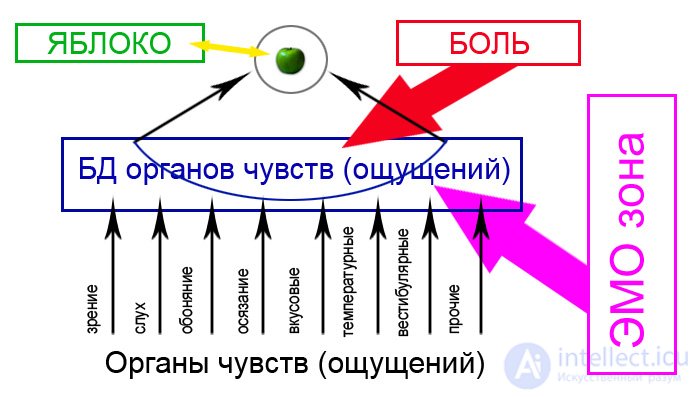

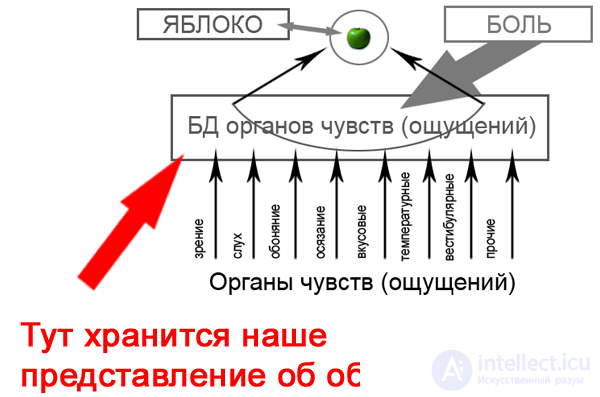

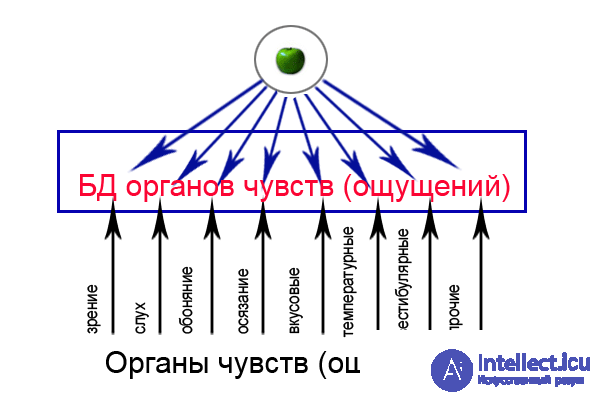

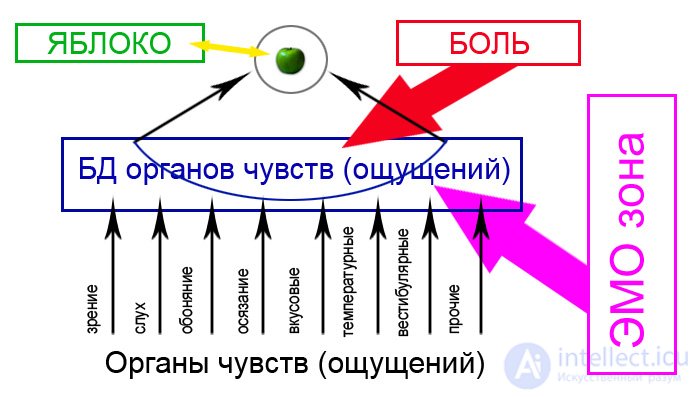

Let's return to the beginning, to the formation of images:

Once again, read how the formation of the image:

Pain (pain) is the determining factor for “remembering” the most dangerous characteristics of an object. But the "personal" (svyazka_k_Ya) emotional impressions (EMO-zone) are decisive for memorizing the "interesting" (important) aspects of the image. Speech as a second signal has just a link to the image and vice versa.

How not corny, but:

In the future, we work only with him.

And here:

It consists of:

1. Sight

2. Hearing.

3. Smell.

4. Touch.

5. Taste.

secondary:

6. The pain

7. Temperature.

(although it is rather related to touch, let's say temperature sensations are included in the "touch" class)

and "very" unimportant

8. Orientation in space (deviation from the vertical).

9. Orientation in time (poorly developed, but there is)

10. Sexual desire. (And other physiological needs of the body. Eg hunger, thirst)

Data is stored in the form in which it is received.

The collection of data about any object / process is the REPRESENTATION of the image.

The mental representation of the object is "pulling out" information about it from the memory (from the database).

Hard? But so it is ...

And another quote from the old:

And now, actually, the question: how to work with it?

I see no other way out how to copy the entire structure.

For this, you will have to go down to the level of abstractions.

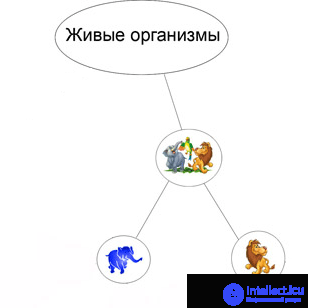

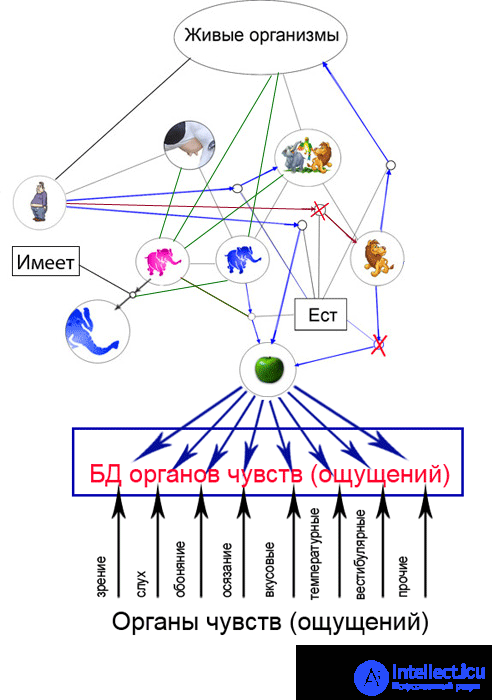

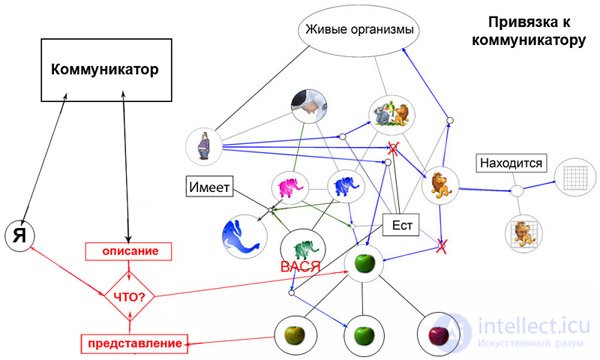

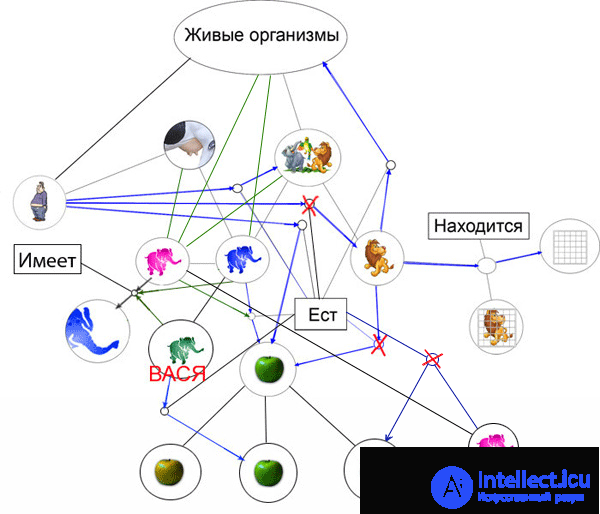

Let's return to one of the first schemes:

Add the concept of what?

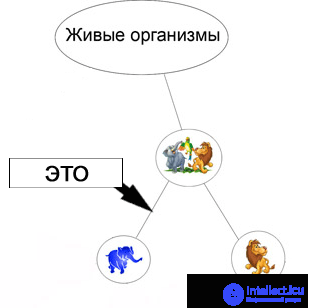

The answer to the question WHAT (such) ELEPHANT is the concept -> IT

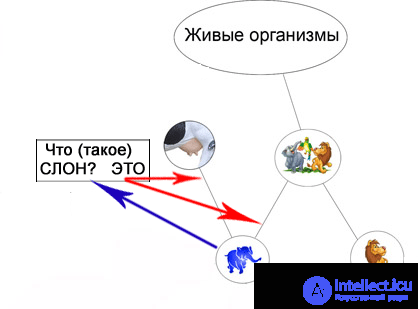

However, back to the beginning of the article ...

Redraw the picture a little differently.

(what? is a question, means to understand how to work with it, the arrow should indicate the answer)

So combine these two "concepts" into one:

Now we can get the "answer to the question."

Finding the answer to the question: What (such) ELEPHANT - comes down to finding the ancestor of the ELEPHANT.

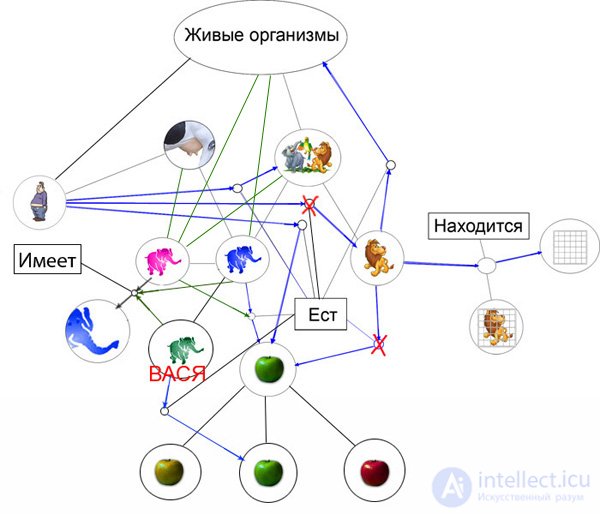

Consider the situation when there are several such connections. And both connections are equivalent:

In this case, when choosing the "path", we stumble on a fork.

An elephant is an animal.

An elephant is a mammal.

Both links are the "answer to the question" WHAT?

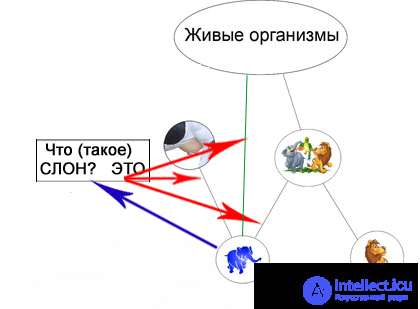

Let's return to the article Theory of ZonoID. Thought data structure. When "self-building" communication: Elephant - a living organism. The newly built link is also the answer.

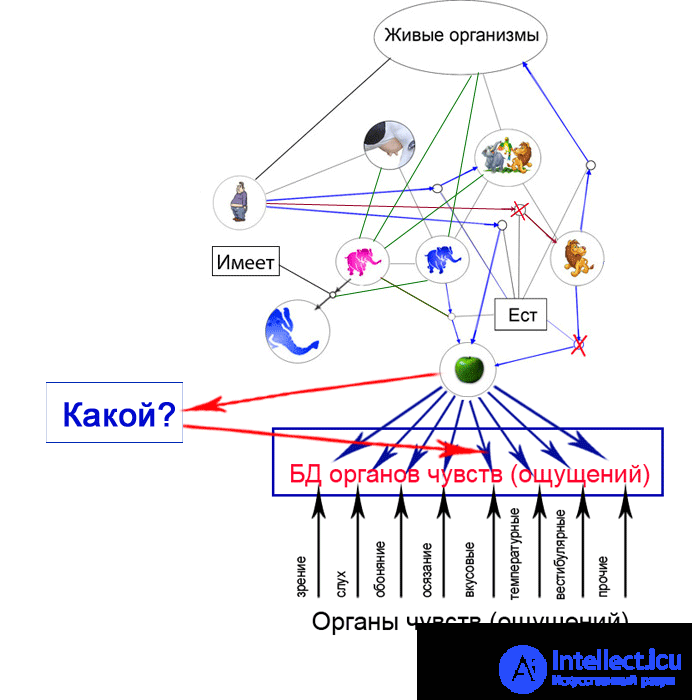

Consider the concept of what?

The answer to the question WHAT? it is a set of connections defining the characteristics of the object.

Because just the question: Which one? - a common question, then the whole selection of links is the answer, as in the previous case, but in the previous case we had only a fork, and there are a lot of options, which one to choose? Or give all?

We re-draw the scheme (the previous one, let me remind you, this is the “pattern of formation of images”, and now we need a scheme of “data storage”).

And dock it with the scheme from Theory ZonoIDa. Thought data structure.

We specify the question by adding a clarification.

What apple tastes like?

Refer to this article: Theory ZonoIDa. Binding to the communicator. (We turn speech into thought, and thought into speech)

Consider the scheme from the article.

And back to the scheme.

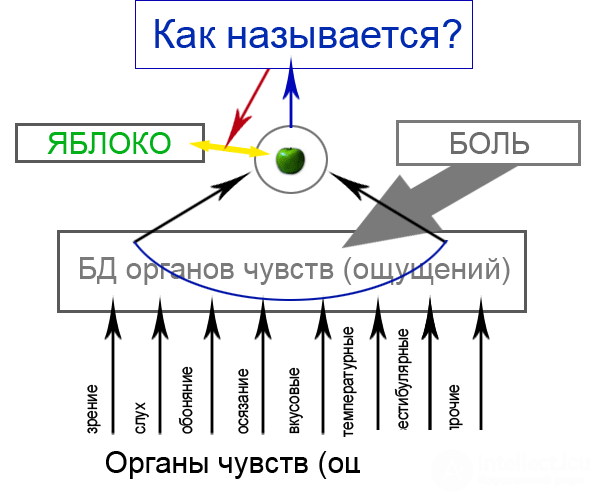

Concept - HOW IS IT NAME? (binding to the communicator) will look like this:

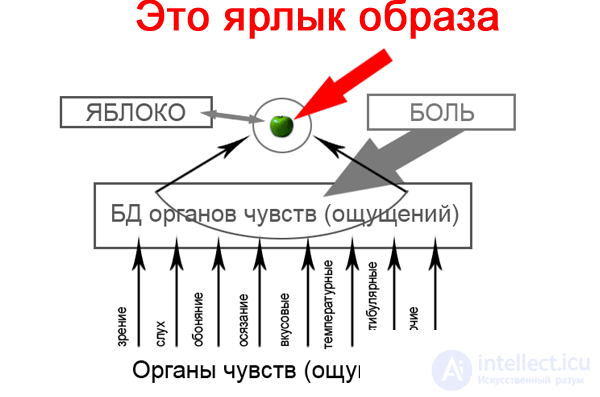

The word, in this context, the TITLE is the LABEL of the image.

Let's return to the scheme:

Add to our scheme:

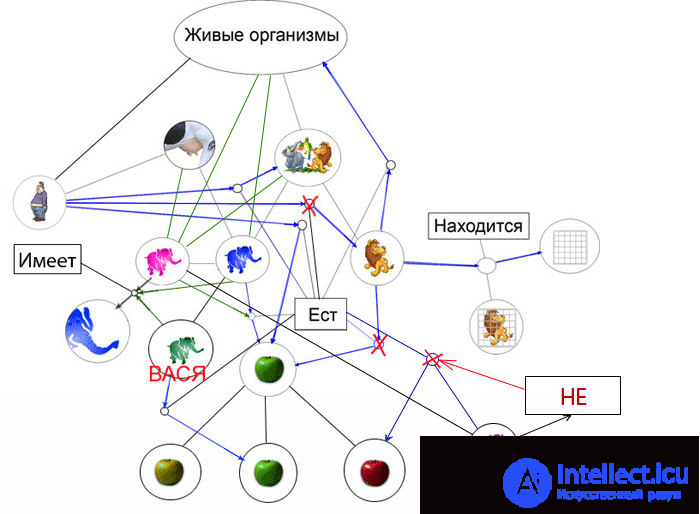

ELEPHANT PETE does not eat [only] RED apples.

(ELEPHANT) -> [EAT] -> NO -> (APPLES)

| | |

(SINGER) [but only] -> | red |

And now the question: What apples DO NOT EAT ELEPHANT SINGER?

Concept - NOT . Pointer to a negative relationship.

This link is issued as an answer.

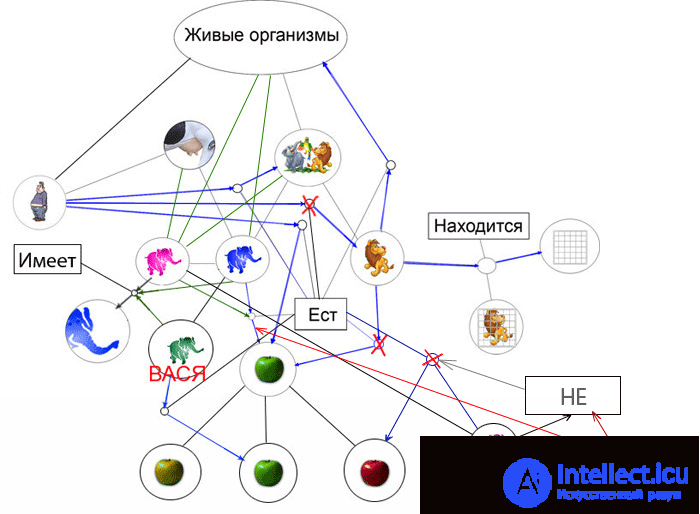

And now the question is more complicated: What kind of apples does ELEPHANT SINGLE eat?

The logic of “building a path” is this: Since (we are looking for an ancestor) ELEPHANT PETE is ELEPHANT (also known as ELEPHANT) and elephants eat apples, then ELEPHANT PETE eats ALL apples except red. What are the apples (sampling of all options - color option)

Yellow, red, green.

Remove from sample NOT. Answer: yellow, green.

Concept EXCEPT . Pointer to a link contrary to the condition. Answer, all connections except this.

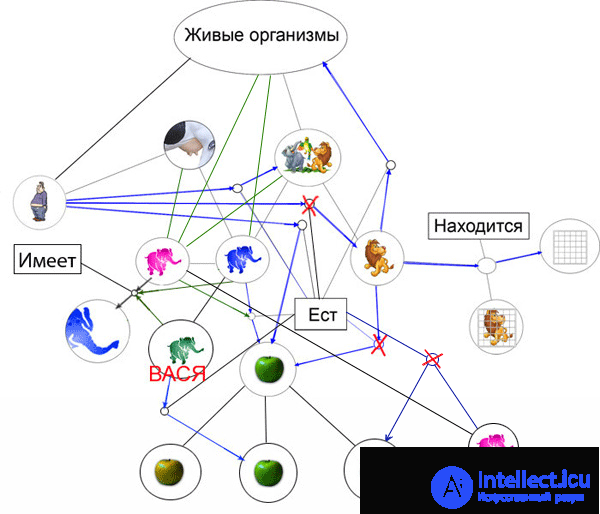

Let's return to the scheme:

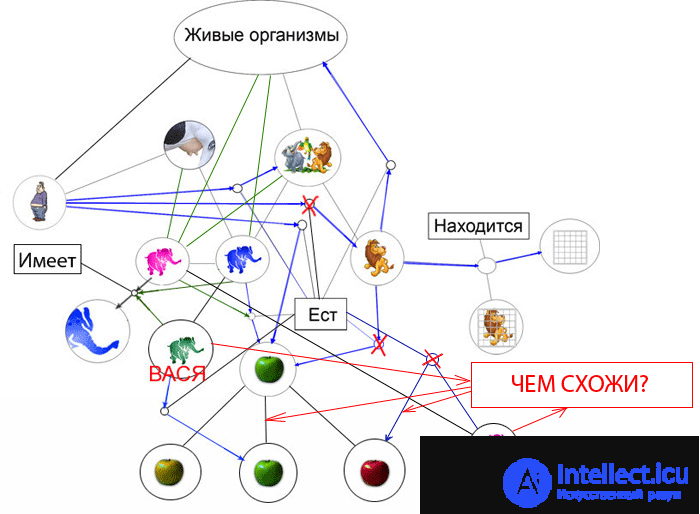

The concept of what is similar?

Specify common links with ELEPHANT VASIA and ELEPHANT SINGER?

Search for a common ancestor, if this is not found, exit with setting the flag (different),

if found, compare links, search for identical links.

For this example: Both do not eat red and eat green apples.

(besides: both are animals / mammals / living organisms and both have a trunk and can be eaten by a lion;)

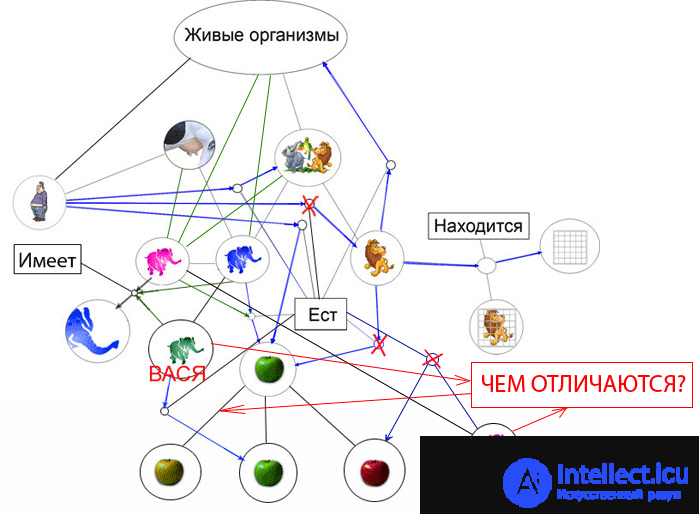

The concept of what is different?

What is the difference between ELEPHANT WASIA and ELEPHANT SINGER?

A pointer to different links.

Search for a common ancestor, if this is not found, exit with setting the flag (different),

if found, compare links, search for different links.

For this example: ELEPHANT PETE eats yellow apples and EASON VASYA not.

So, it makes no sense to describe all the concepts so far, since in the first place I still have no complete list.

Secondly, they can be added gradually. If the principle is clear, then I will write a list of several concepts and a brief description. If something is not clear, then write, I'll sign for more and draw a diagram.

Concepts are closely related to the instructions. About this in the next article.

List of concepts.

1. WHAT? is a pointer to an object.

1a WHAT IS IT? (such) - The answer to the question WHAT? (such) is the concept -> IT

1b WHAT (of myself) DOES IT? - The answer to the question is a pointer to the idea of the object.

2. THIS is a pointer to an ancestor.

3. WHAT? - This is a set of relations defining the characteristics of the object.

4. HOW IS THE NAME? (binding to the communicator) - pointer to the label (the name of the image).

5. NOT. Pointer to a negative relationship.

6. The concept EXCEPT. Pointer to a link contrary to the condition. Answer, all connections except this.

7. WHAT DO YOU LIKE? Search for a common ancestor, if this is not found, exit with setting the flag (different),

if found, compare links, search for identical links. As a response, the detected identical links are given.

8. WHAT DIFFER? Search for a common ancestor, if this is not found, exit with setting the flag (different),

if found, compare links, search for different links. In response, different links are issued.

9. WHY? (Because) - a pointer to two links. For example: [WHY?] A lion sits in a cage [BECAUSE] A lion eats a person.

Self-linking: [WHY?] The elephant is NOT in a cage [BECAUSE]?

Logic: The lion sits in a cage - because he eats a man. The elephant does not sit in a cage because ... we substitute the "variable".

Does an elephant eat a man? Not.

lion - eat- yes - sits in a cage

the elephant eats no, it does NOT sit in a cage.

And if I had eaten, I would have sat in a cage.

10. WHAT? (conversation) Search for the most "upper" ancestor - as a response, a common ancestor or a list of ancestors is issued.

Comments

To leave a comment

Natural Language Modeling of Thought Processes and Character Modeling

Terms: Natural Language Modeling of Thought Processes and Character Modeling