Lecture

Let's start from scratch. We do not have any information in the database yet. Totally. Our brain is as pure as a baby.

All we have is a database handler program (also known as the chat bot shell, also known as the IR kernel).

(a small digression: as I warned, as long as we have nothing complicated. Information is entered manually in the "internal language" processed by the shell and entered into the database / extracted from the database, displayed on the screen.)

We introduce the first sentence:

"The lion is in a cage."

since it is in the "inner language", it will look like this:

(OBJECT) -> [PROCESS] -> YES -> (OBJECT)

I recall:

This is not a speech! This is a thought. So these are not words, but images.

Object in parentheses.

The process is in square.

Yes - the connection is positive (the lion is really there)

For now. That is, we enter the info in the bot as it will be stored.

(and it will be stored perhaps even easier than we enter)

All "bindings" / translators, etc. THEN. Now we need to understand how it is stored? HOW to work with it?

(LEO) -> [FOLLOWED] -> YES -> (CELL)

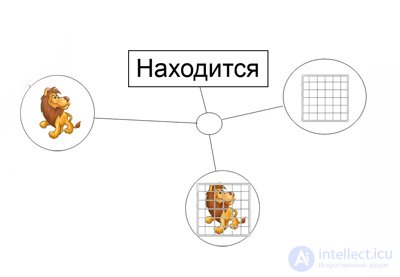

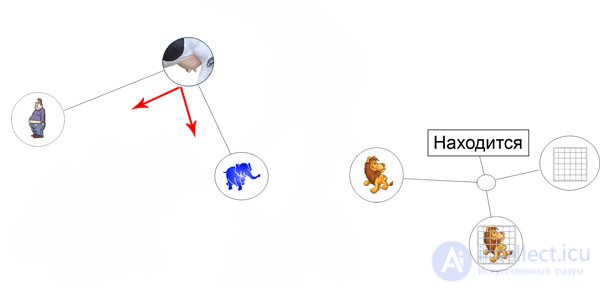

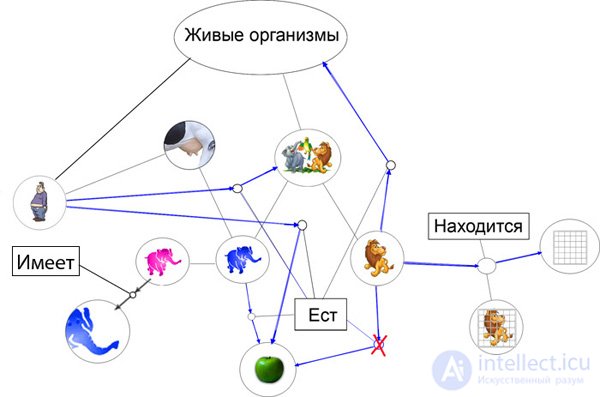

Now pictures:

The picture shows:

1. Leo

2. Cage

3. Communication through the "knot" (this means communication object-process)

4. "Presentation" as a person represents it. For the chat bot, this "presentation" is not needed yet. Pictured for people to understand.

And so we look on a DB:

1. (LEO)

Do we have a BEV image in DB? (answer NO, because DB is empty)

So we put this image in the database.

2. (CELL)

Do we have a DB image of a CELL? (answer NO, we have nothing but LEV there yet)

3. [TO BE]

Do we have a process located? (answer NO, we don’t have any process in the database at all)

Now you can fill the database.

What will be the structure of the database now is not important (I have a few thoughts about, but this later) now the simpler the better.

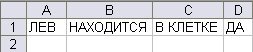

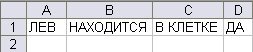

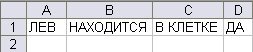

Imagine a table of Excel type from several cells.

in one we write - "LION" (just a word of letters)

in the second - "is" (just a word of letters)

in the third - "CELL"

fourth link - yes / no or 1/0

EVERYTHING! Communication entered in the database.

Now how to work with her?

Enter: Is the lion in a cage?

We recall / learn the "inner language":

(LEO) -> [FOLLOWED] ->? -> (CELL)

In the table we have:

Begin testing:

1. (LEO)

Do we have a BEV image in DB? (Answer YES) So far we don’t know what / who the lion is? How does it look, etc.

It does not matter. There is an IMAGE - the CONCEPT "LION". So you can already work with him.

2. (CELL)

Do we have a DB image of a CELL? (Answer YES)

3. [TO BE]

Do we have a process located? (answer YES)

four. ?

Do we have a connection between (LEO), (CELL) and [TO BE]? (Answer YES)

Is the connection positive? Look at the table:

The answer to the question (LEO) -> [LISTED] ->? -> (CELL)

Will be the contents of cell D.

What kind of questions can we answer?

Here is a list:

1. Who is in the cell?

2. Where is the lion?

3. What does a lion do in a cage?

4. Is the lion in a cage or not?

1. (?) -> [FINDING] -> YES -> (CELL)

2. (LEO) -> [LOCATED] -> YES -> (?)

3. (LION) -> [?] -> YES -> (CELL)

4. (LEO) -> [LOCATED] ->? -> (CELL)

We look at the database:

Answers:

1. Contents of cell A

2. The contents of cell C

3. Cell content B

4. The contents of cell D

Let's try to add data to our "knowledge base":

We introduce: "Man - a mammal."

This is the usual connection "concept" is the "concept", the connection "top level". The meaning of this connection is defined by the words: is, this, is equal. (A = B)

It is extremely easy to work with it, since these “general concepts” are not binding for anything special. However, in contrast to the IR (chat bot) in humans, the creation of a “concept” goes “bottom-up” after analyzing a large number of “specific objects” they have “common characteristics” and they are combined into a group - “concept”. We immediately get an unknown object create a group (concept) to simplify the system. Those. "top down".

(PERSON) <- YES -> (MAMMALIAN)

We look at the schema:

Not much has changed, right?

Still try to "work" with this data. What questions can we answer?

Mammal man?

(MAN) <-? -> (MAMMALIAN)

Who is the person?

(MAN) <- YES -> (?)

What mammals do you know?

(?) <- YES -> (MAMMAL)

Do you know any other types of mammals besides MAN? (Answer: NO)

(?) / (MAN) <-? -> (MAMMAL)

What other types of mammals besides CHELOVEK are known to you? (Answer: NO)

(?) / (PEOPLE) <- YES -> (MAMMALIAN)

Enter the string. "Elephant mammal."

(ELEPHANT) <- YES -> (MAMMALIAN)

Everything is analogous to the example of a person, except:

Do you know any other types of mammals besides MAN?

(?) / (MAN) <- YES -> (MAMMALIAN) (Answer: YES)

What other types of mammals besides CHELOVEK are known to you? (Answer: ELEPHANT)

(?) / (PEOPLE) <- YES -> (MAMMALIAN)

What mammals do you know?

(?) <- YES -> (MAMMAL)

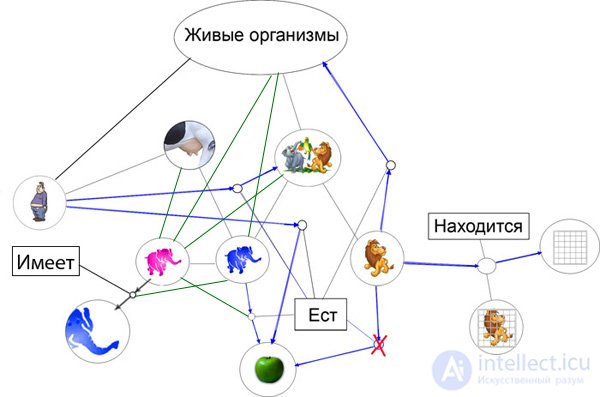

look at the schema:

We cannot answer this question, as we have a fork!

That is, the answer is more than 1. So, we give the list as the answer.

(?) <- YES -> (MAMMAL)

(ELEPHANT) / (PERSON) <- YES -> (MAMMAL)

(But for IR, of course, it would be logical to give the answer: I know SEVERAL mammals. And after specifying (List them), even then, to issue a list, but I think this is a trifle, which can be ignored)

Now the most important thing is what I have been saying from the very beginning. This is the connection and the way.

In the previous scheme, we first met with the ambiguity of the answer. That is, we have a “fork in the road”. So STOP. Where to go? How to choose the direction of the path? Which answer is correct?

In the above example, both answers are correct. But this is not the path yet ... The path is a long chain of thought to search for an answer. And the longer the chain, the harder the path, and therefore more will meet on the path of branching.

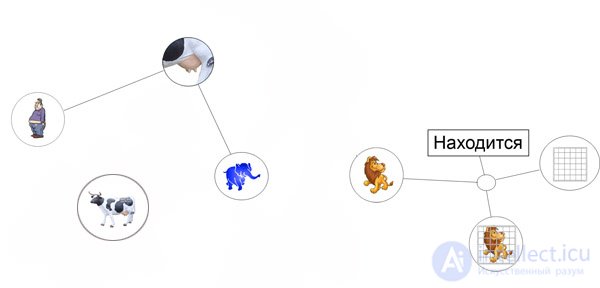

Let's add to our scheme for example a cow. Just a cow object:

(COW)

That is the same situation as with the lion. We have no idea what a cow is, what it does, what it looks like, etc.

We are most important, can we work with her?

We look at the scheme:

We see no "connecting lines" to either the cow or the cow. What is the use of a gas station on an uninhabited island? How to get there by car? The answer is NO.

Without the "path" to get to the object COW is simply impossible. The object is there, but you can NOT use it, since it has no connection with the "outside world" (that is, data), it is simply isolated inside our database.

Forget about the cow and go ahead.

Enter the question: "Man is a living organism?"

(MAN) <-? -> (LIVING BODY)

This is a question and not a statement!

We look at the database. Do we have such a concept "LIVING ORGANISM"? The answer is NO.

Accordingly, we cannot answer this question (Answer: I DO NOT KNOW)

And ... We act in the same way as with COW, that is, we start an “isolated cell”, we put our new concept there, and in the “buffer of unsolved problems” we put task No. 1 to find the answer to the question: (PERSON) <-? -> (LIVE ORGANISM) . (more on this in a bit later)

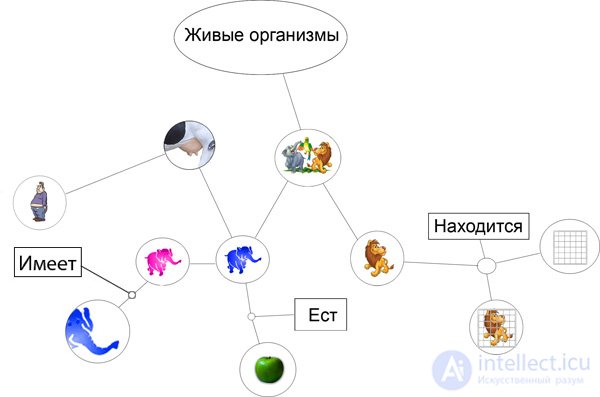

We will not torment the bot :))) We introduce the answer to it and at the same time we fill it with the database by entering several phrases at once:

"The elephant is an animal."

"Animals - living organisms"

"Man - a living organism"

"Lion - an animal"

(ELEPHANT) <- YES -> (ANIMAL)

(LEO) <- YES -> (ANIMAL)

(ANIMAL) <- YES -> (LIVING BODY)

(PERSON) <- YES -> (LIVING BODY)

We look at the scheme, what did we do?

This is called a bunch. A selection of links related to a common theme.

We try to work with the scheme:

(MAN) <-? -> (LIVING ORGANISM) Is man a living organism?

(MAN) <-? -> (MAMMAL) Is this a mammal?

There is no need for explanations. Just check whether the connection is positive.

(since we have no negative connections in the database, the answer is also positive)

(MAN) <-? -> (ANIMAL) Is man an animal?

Communication "man-animal" no. Negative communication "man is not an animal" is also not, then the answer is "I do not know." Similarly for the lion:

(LION) <-? -> (MAMMAL) Is a lion a mammal? I DO NOT KNOW

Here is already familiar to us:

(ELEPHANT) <-? -> (MAMMAL) Is an elephant a mammal? YES

(LEO) <-? -> (ANIMAL) Is a lion an animal? YES

(ELEPHANT) <-? -> (ANIMAL) An elephant is an animal? YES

Now a little harder:

(ELEPHANT) <-? -> (LIVING ORGANISM) Is an elephant a living organism?

(LEO) <-? -> (LIVING ORGANISM) Is a lion a living organism?

We have no links: "elephant-living organism" and "lion-living organism."

Therefore, direct reconciliation reveals a lack of communication. But we have a connection through communication.

That is, the solution goes in two stages:

elephant animal animal living organisms.

(A = B, B = C therefore A = C)

Elephant is a living organism. because the elephant is an animal, and animals are living organisms. (see Why?)

(LEO) <- YES -> (?) Who is Leo? ANIMAL

This is a refinement of the object. The answer is to give the object to which the link indicates.

(MAN) <- YES -> (?) Who is the person?

We have a fork. There are several connections in the database. There are several options.

For example, to give the entire list of links. But there can be VERY many such connections.

The criterion for such a response may be the number of links (for example, if there are no more than three links, give a list in reply, otherwise ask again “in the sense?”

Or give back the first link. In case of a repeated question (and who else is the person?), Issue a second connection, etc. Or not to give out the answer at all but to ask again: "I know several answers to this question, specify what exactly interests you?"

(CELL) <-? -> (MAMMAL) DO NOT KNOW

(CELL) <-? -> (LIVING ORGANISM) DO NOT KNOW

(CELL) <-? -> (ANIMAL) DON'T KNOW

(CELL) <-? -> (MAN) I DON'T KNOW

(CELL) <-? -> (ELEPHANT) DON'T KNOW

Lack of connections is always the answer. I DO NOT KNOW. The answer is YES or NO, is given only if such a relationship exists (positive or negative).

(CELL) <-? -> (LEO) I DO NOT KNOW

Let me remind you once again, about the fact that the connection "lion-animal" is a simplified record.

Actually, the complete record: A lion is an animal. The object-object relationship in nature does not exist at all. Hence the lion and the animal are connected through the concept of "this." But the lion and the cell are connected through the concept of "is". These are different concepts and different ways.

Is a lion a cage? I DO NOT KNOW.

Is a cage a lion? I DO NOT KNOW

Since we do not have such connections, we still have nothing to draw conclusions from. (few connections)

We will return to these questions later.

In the meantime, back to the very beginning:

(LEO) -> [LOCATED] ->? -> (CELL) Is a lion in a cage? YES

2. (CELL) -> [LOCATED] ->? -> (LEO) Is the cell in the lion? I DO NOT KNOW

Unidirectional communication. We know that the lion is in a cage, but we do not know the reverse value.

(ELEPHANT) -> [FOLLOWED] -> YES -> (CELL) DO NOT KNOW

(PERSON) -> [GOING TO ] -> YES -> (CELL) I DO NOT KNOW

This is already familiar to us.

Now the questions are more complicated:

(?) <- YES -> (ANIMAL)

Do you know any animal?

But we know several animals (see the previous example), we give out a list if we know less than three, otherwise we say: "I know a LOT of animals" and we list them only if they ask for it.

(?) <- YES -> (LIVING BODY)

Do you know any living organisms?

Already familiar to us fork, the answer is: man, animals

You can write right away like this:

(?) / (?) <- YES -> (LIVING BODY)

It will sound like: List all living organisms that you know.

For "debugging" and for thinking:

1. All connections submitted to the input are “horizontal”

2. Communication: LIVING ORGANISM - ANIMAL - ELEPHANT. is "vertical"

The "higher" the more general the concepts become, the "lower" the more specific.

3. Links for the "top" relevant to the "bottom." But the "lower" links do NOT matter to the upper ones. Because This is a special case. (What do you call there? Affiliated processes. Ancestors / descendants - heredity)

Add another process:

The elephant eats apples.

(ELEPHANT) -> [EAT] -> YES -> (APPLES)

As in the previous examples, the addition of a new process that does not have connections does not give us the opportunity to answer those questions that require the presence of these connections.

For example:

Does a man eat apples?

Does lion eat apples?

IMAGES-SYNONYMS (not to be confused with synonymous words)

Now we just need an abstract example of a synonym for an image, let it be a synonym for the image "elephant" written in English letters - elephant, in order to easily distinguish these two images.

(It is not related to language, it's just easier to understand)

Enter the data:

ELEPHANT is an ELEPHANT.

(ELEPHANT) <- YES -> (ELEPHANT)

This is a bidirectional connection. That is, by simple: A = B, then B = A

elephant has a trunk

(ELEPHANT) -> [HAS] -> YES -> (HOBOT)

What do we have now? We create HOBOT, we stretch the connection. We create ELEPHANT, we stretch the connection.

Communication ELEPHANT - ELEPHANT is horizontal! This is equivalent to the statement ELEPHANT = ELEPHANT.

So all the links for ELEPHANT are also relevant for ELEPHANT.

SELF-BUILDING TIES

Recall our data about the elephant:

(ELEPHANT) <- YES -> (ANIMAL)

Elephant is an animal

(ELEPHANT) <- YES -> (LIVING ORGANISM)

An elephant is a living organism.

(ELEPHANT) <- YES -> (MAMMALIAN)

Elephant is a mammal

(ELEPHANT) -> [EAT] -> YES -> (APPLES)

Elephant eating apples

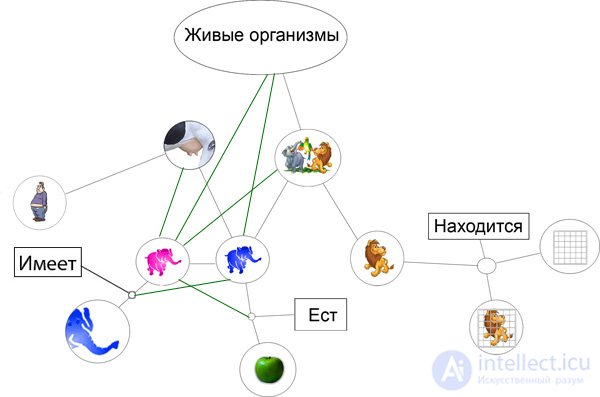

But since ELEPHANT = ELEPHANT

(ELEPHANT) <- YES -> (ELEPHANT)

We can immediately substitute ELEPHANT for ELEPHANT and get the connections we need:

(ELEPHANT) <- YES -> (ANIMAL)

Elephant is an animal

(ELEPHANT) <- YES -> (LIVING BODY)

An elephant is a living organism.

(ELEPHANT) <- YES -> (MAMMALIAN)

Elephant is a mammal

(ELEPHANT) -> [EAT] -> YES -> (APPLES)

And besides the question:

Does an elephant have a trunk?

(ELEPHANT) -> [HAS] ->? -> (HOBOT)

We can answer YES. (since ELEPHANT = ELEPHANT)

(ELEPHANT) -> [HAS] -> YES -> (HOBOT)

All the links in the figure I highlighted in green.

One more question:

Lion has a trunk?

And again we look at the tree: LION and ELEPHANT (which is also ELEPHANT) in different branches. So we can not answer this question.

Or NO or DON'T KNOW. (no connection)

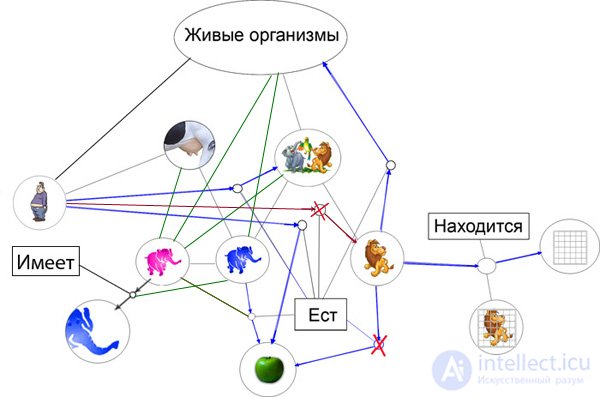

NEGATIVE TIES

We introduce: Lion does not eat apples.

(LEO) -> [EAT] -> NO -> (APPLES)

The negative branch of knowledge is not stored. (But the label is stored "negative zone")

This means that it is a dead end. The end of the road.

That is, to the question: Leo eats red or green apples? The answer will not be searched, having encountered “Lion does not eat apples” on the way. The whole process of finding the path stops there.

No matter what the question was, the answer will be a negative connection: "Leo does NOT eat apples."

So let's continue.

We will add new information, but we will not add new objects / processes. We are mainly interested in the principle of the formation of new connections. Add:

Man eats apples.

(PEOPLE) -> [EAT] -> YES -> (APPLES)

Man eats animals.

(PERSON) -> [EAT] -> YES -> (ANIMALS)

The lion eats all living organisms.

(LION) -> [EAT] -> YES -> (LIVING ORGANISMS)

Establish the necessary links. (highlighted in blue in the picture of the connection so as not to confuse the types of connections, the arrows are the direction of the connections). The picture takes on this form:

And taking into account the "self-built connections", this:

And now we carefully look at the picture and try to answer these questions:

1. Does a lion eat apples? (switch in NO position)

2. Does an apple eat a person?

3. Is the lion eating a man?

3. Is the lion eating an elephant?

4. Is the lion eating a lion?

5. Lion eat mammals?

6. Does a man eat an elephant?

We take into account the presence of compounds or their absence and the direction (arrows) of the compounds.

If everything is clear, we proceed to the next part, which is more complicated.

This whole scheme does not go beyond the traditional AI (recall the Chinese room). To create this Mind is not enough.

Therefore, we consider a little more complex examples:

LOGICS

7. Do animals eat apples?

Here the chain will be a little longer.

1. We znom a few animals - ELEPHANT (which is also ELEPHANT besides), LEO.

2. The elephant eats apples.

3. Lion does not eat apples.

It turned out a delicate situation, and YES and NO - SIMULTANEOUSLY. Fork.

Mutually exclusive links. Which answer to give?

Therefore, the answer must be double:

YES, SOME animals eat apples. And some animals do NOT eat apples.

And after clarification (What exactly animals eat apples?) Issue a list of apple-eating animals.

8. Do animals eat man?

The principle is the same:

Elephant eating man? There is no such connection. UNKNOWN.

Leo eat man? There is no such connection. UNKNOWN.

Need to build a path:

What do we know?

Elephant Eats Apples.

But Elephant is also ELEPHANT!

Does ELEPHANT eat a man?

There is no such connection. UNKNOWN.

lion eats all living organisms.

Man is this apple? NOT. It disappears.

Is man a living organism? YES.

So:

Leo is the man eating.

Summing up:

We know several animals:

LION, ELEPHANT (aka ELEPHANT)

Elephant (aka ELEPHANT) EAT man? There is no such connection. UNKNOWN.

Leo is the man eating.

Conclusion:

YES, SOME animals eat man.

On the clarifying question, ANY is the answer LEV.

CONTROLLER correct / wrong thought

Any data received at the input of the system is checked (compared with the already AVAILABLE data).

The fact that inside the database has a coefficient of reliability of the source of the data. (for example: Encyclopedia is a completely reliable source of data, a physics textbook is the same thing that is trustworthy, but the interlocutor-troll does not cause such trust.)

And so at the entrance of the new information:

A lion sits in a cage and eats an apple.

We break into two separate thoughts:

The lion sits in a cage.

The lion is eating an apple.

see the scheme: The lion sits in a cage (already known to us information) and eats an apple.

STOP! The lion does not eat apples. OUTPUT.

WRONG INFORMATION: The lion does not eat apples.

Paradoxes

We introduce the phrase:

Man does not eat lions. (we build necessary communications)

We look carefully at the scheme:

We have a paradox:

Man eats animals.

The lion is an animal.

Man does not eat lions.

In fact, there is nothing terrible. This is just EXCLUSION.

Man eats animals. (but) Man does not eat lions.

EXCEPTION is editing the database.

Changing erroneous / somewhat meaningful / unintelligent knowledge when new information is received.

Fuzzy logic allows for the correction of any information. Initially it is implied that the data is incomplete / inaccurate / subject to change. The main point:

> Man eats animals.

> Man does not eat lions

remains, but when adding new information:

> Lion-edible.

A new fork appears (in the database) (clarification, read: EXCLUSION)

> Man (usually) does not eat lions (but)

> Lion-edible.

Therefore, in exceptional cases, the lion can still be devoured.

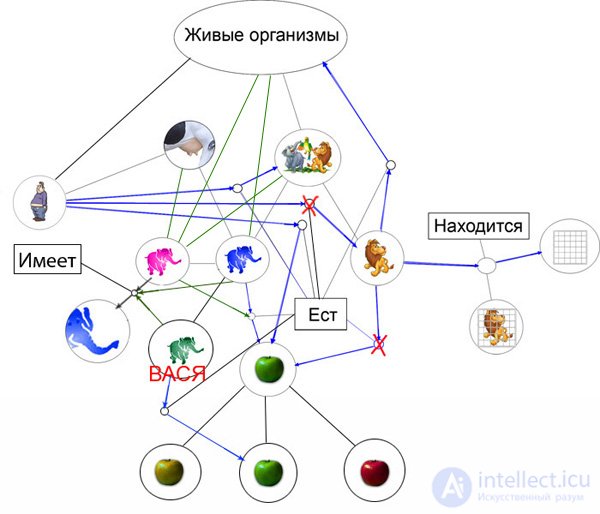

General and specific

Add to our model:

Elephant Vasya eats [only] GREEN apples.

(ELEPHANT) -> [EAT] -> YES -> (APPLES)

| | |

(Vasya) [but only] -> | green |

First of all, back here: http://www.gamedev.ru/community/ir/articles/?id=4809

and once again look at the pattern of "formation of images."

And so, the “general” becomes “concrete” only after the “clarification”.

APPLE is just a class of objects. Notion General view.

"Green Apple" is a subclass of (class APPLE) having a color parameter of green. Уже более точное определение, но все еще "слишком" общее представление.

А вот "то самое, зеленое, кислое яблоко, которое я сорвал у соседа на даче..." - уже будет конкретным объектом.

Поэтому, для указания конкретности (точность) объекта используются дополнительные привязки (уточнение).

В данном примере:

Слон имеет кличку ВАСЯ (это конкретизирует объект "просто" СЛОН становится "конкретным" - "СЛОН-ВАСЯ"

(Васи не обижайтесь пожалуйста, я просто не знаю какие клички дают слонам, а Вася Пупкин имя нарицательное)

И так, все (общие) свойства класса СЛОН так же принадлежат и (конкретному) СЛОНУ-ВАСЕ.

Однако, некоторые (уточнение/исключение) могут отличаться. В данном случае, СЛОН-ВАСЯ ест ТОЛЬКО зеленые яблоки (что и отличает его от других СЛОНов), но (так же как и другие СЛОНы и ELEPHANTы) СЛОН-ВАСЯ имеет хобот, является животным/млекопитающимся, может быть съеден львом и т.д.

Хочу обратить внимание на два момента.

1. Если ввести дополнительно характеристики яблока - кислое/сладкое/невкусное и т.д. это никак не повлияет на СЛОНа-ВАСЮ, так как у нас жестко задано, что именно он ест. Но, если ввести данные "повыше" (слоны едят только сладкие яблоки) то соответственно и СЛОН-ВАСЯ "откажется" от зеленых, но при этом кислых яблок. И соответственно, если ввести данные: "Слон ВАСЯ ест только зеленые и сладкие яблоки", это никак не повлияет на выбор остальных слонов.

2. Недостаток информации, нормальное "состояние" системы.

Что было бы, если бы мы не вводили "Яблоки бывают желтые, зеленые и красные", а сразу ввели "Слон ВАСЯ ест только зеленые яблоки"? Ничего страшного бы не произошло. Просто у нас появилась бы "развилка" с "неопределенными параметрам". "Слон ВАСЯ ест только зеленые яблоки", но КАКИМИ бывают яблоки мы не знаем.

Поэтому для ответа на вопрос: "Вася съест красное яблоко?" Нам необходимо узнать что такое КРАСНОЕ?

При отсутствии "понятия" ЦВЕТ мы не сможем ответить на этот вопрос.

Comments

To leave a comment

Natural Language Modeling of Thought Processes and Character Modeling

Terms: Natural Language Modeling of Thought Processes and Character Modeling