Lecture

Это окончание невероятной информации про средства контроля диагностических качеств психологических тестов.

...

отношение к данному конструкта, но и любых других. Так, если на результаты теста интеллекта совершенно не влияют показатели измерения эмоциональных состояний, контролируемый тест исследует «чистые» свойства интеллекта, относительно свободные от влияния эмоционально-волевых факторов. Сравнение эталонного и контролируемого теста может осуществляться процедур конвергентной (проверки степени проявления прямого или обратной связи результатов теста) и дискриминантной (установление отсутствия связи) валидизации. Благодаря приема подтверждения или отклонения гипотез о характере связи контролируемого теста с эталонным в психологической диагностике иногда конструктной валидность терминологически обозначается как «предусмотрена» валидность (assumed validity).

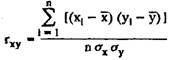

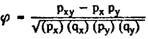

Для определения степени валидности в количественных показателях на практике чаще всего используются различные виды коррелятивного анализа связи между индивидуальными оценками теста и валидизацийним критерию (или характера связи между результатами теста валидизуеться, и другой методике, которая используется как эталонная). В основном распределение тестовых оценок в репрезентативной выборке валидизации приближается к нормальному. Если тестовые и критериальные оценки имеют континуальный характер, тогда может быть использован коэффициент корреляции произведения моментов Пирсона:

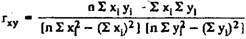

where x i, i - compared quantitative traits; n is the number of compared observations; σ x, σ in - standard deviations in comparable series. Convenient for calculating r xy is the following formula:

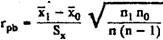

Depending on which measuring scale the test and criterial indicators are presented in, one or another means of correlation analysis is used. If one of the rows is presented in a dichotomous scale, and the other is in the interval or ordinal scale, use the Pipson pseudo-correlation coefficient:

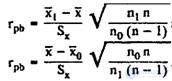

where x 1 - the average of X objects with a value of one for Y; x 0 - the average of X objects with a value of zero for Y; S x - the standard deviation of all values in X; n x - the number of objects with a unit of Y; n в is the number of objects with zero for Y, that is, n = n 1 + n 0. The equations for calculating r Pb are an algebraic simplification of the formula for determining the coefficient r xy for the case when Y is a dichotomous variable. A number of other equivalent expressions suitable for practical use can be given. this:

where x is the general average of X.

It is possible that the test scores and criteria indicators are represented by dichotomous alternative definitions (for example, normal development - developmental delay, coincidence - discrepancy of answers with the key) then use Pearson's association coefficient, which is essentially an simplification of the equation for determining r xy:

Suppose that a variable takes the value of one and zero. Then p x, p y - the proportion of cases with a unit on the grounds of X and Y; q x, q y - with zero for X and Y; q = P-1, d. xy - the proportion of cases with a unit of both X and Y.

An added case is when the two rows being compared are grades expressed in a qualitative scale of titles. Then use the criterion of consistency of Pearson:

In this equation, the chi-square test is used as an intermediate value.

Along with the coefficients of validity, which are determined in the traditional way, there are some other measures for quantifying the validity of a test. Among them is the j-coefficient (proposed by E.Primim (1975)), which is one of the indicators of synthetic validity. The procedure for determining it implies the existence of a list of elements of a complex activity or ability, expressed in the language of professional or other special actions, and an assessment of the relative importance of these elements is established by experts. The final analysis is carried out using the correlation of test scores and individual elements of real activity, taking into account their specific weight. Statistical processing is based on the calculation of multiple regression. The indicators of the correlation of each element of activity by the criterial activity as a whole are multiplied by the partial weight of each of the elements in the test; the works thus obtained are summed up.

Validity factors are important, but far from exhaustive characteristics of the test validity. It should be noted that validity is not measured, it is only judged. In the methodological materials for the test, validity coefficients can be given, but the actual validity of the test in terms of its specific use is judged by the combination of the most diverse types of information obtained in various ways. Validity is interpreted not as something expressed quantitatively, but as “adequate”, “satisfactory”, “insufficient”, etc. Thus, validity coefficients are only an element of a complex process of characterizing a test's validity.

The dubiousness of a separate calculated validity coefficient can be determined by many factors. Firstly, the conditions of validity of the test can not be fully considered. There are always a lot of unaccounted facts, situations, conditions, and so on. Secondly, the logic of criterion validation itself requires the validity of the criterion. Verification of such validity is a very difficult problem. In addition, tests are often validated not by using an essential criterion, but on the basis of using the available, most accessible criterion. Thus, tests of general abilities are compared not from the criteria of the quality of thinking, neurophysiological and psychological correlates of instincts and abilities, but with indicators of academic achievement or performance of a certain activity. These indicators themselves are complex, and in addition to intelligence, they are affected by many other factors. Third, the validation conditions for the criterion assume that the validation sample is fully representative of the population, the effect of which make the final conclusions on the test. In practice, this requirement is extremely difficult to meet, especially in the case of predictive validation.

The greatest difficulty in interpreting validity coefficients is associated with such circumstances. Initial validation is based, as a rule, on a set of external, social and pragmatic criteria. This is caused by the fact that the main goal of validation is to determine the practical value of the developed technique. The criteria in this case act as indicators that have direct value for individual branches of practice. For example, “learning success”, “labor productivity”, “crime”, “health status”, etc. When targeting these categories, validation solves two problems at once: proper measurement of validity and evaluation of the pragmatic effectiveness of the psychodiagnostic method. If a correlation is found, then we can assume that with a certain degree of probability both problems are positively solved. But if no correlation is found, uncertainty remains: either the procedure itself is invalid (the test score does not reflect, for example, the operator’s stressful stability), or the hypothesis about the presence of a causal relationship between mental properties and socio-pragmatic indicator (resistance to stress does not affect the number of situations).

Along with the mentioned theoretical and methodological difficulties, it is necessary to take into account and ensure the statistical reliability of the calculated coefficients. Making conclusions about validity on the basis of coefficients, one must be sure that this coefficient did not appear through random deviations in the sample. It is necessary to evaluate the standard error of test scores. The measurement uncertainty used in this case indicates the allowable error limits in the individual indicators due to the limited reliability of the test. Similarly, the estimation error indicates the limits of the possible error in the predicted value of the individual criterial indicator as a result of the limited validity of the test.

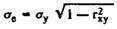

The estimation error can be determined by the following equation:

where σ in - standard deviation of criterion indicators; r xy - validity coefficient; √ (l - r 2 xy) is an expression indicating the magnitude of the error with respect to the error of simple guessing, that is, with zero validity. If √ (l - r 2 xy) = 1, then the error is as great as in guessing. If the validity coefficient is 0.80, then √ (l - r 2 xy) = 0.60, that is, the error rate is 60% of the value that was due to the random distribution.

Obvious validity is an idea of the test, the scope of its use, effectiveness and prognostic value, arises in the test subject or any other person, has no special information about the nature of use and the direction of the methodology. In fact, obvious validity is not a component of objectively established validity. At the same time, high apparent validity is part of the highly desired. It is an essential determinant of the motivation of the subjects in the situation, stimulates a more serious and responsible attitude to work, to the conclusions of the psychologist.

A sufficient level of apparent validity is especially important for methods designed for screening adults. If the composition of the test tasks will seem frivolous, excessively light, such that it does not correspond to the essence of the studied entity, this can lead to an ironic, negatively critical or even hostile attitude of the subjects to the survey situation. On the contrary, the hypertrophied attitude to the capabilities of the technique, an inadequate understanding of its orientation and predictive value can cause excessive motivation, undesirable emotional tension, a tendency to simulate or disimulyat the answer. It is clear that in the examples given, due to inadequate apparent validity, the actual validity of the test will drop dramatically.

The imagination of the experimental and users of psychodiagnostic information about the obvious validity is largely associated with the name of the method, since this part of the information about the test is the most accessible to non-specialists. You should avoid vague, too general names on the questionnaire forms, test notebooks, which may be misinterpreted (for example, “Personality Test”, “Mental Ability Test”, “Comprehensive Achievement Assessment Battery”, etc.). The adequacy of the idea of the validity of the methodology is enhanced by the introduction of brief information about the purpose of the study into the instruction manual for the experimental subject.

Obvious validity enhances the attraction to the text of tasks that are the most natural from the point of view of widows, gender, and professional specificity of the subjects. In order to increase the obvious validity, obscure formulations and special terms should be avoided. Inadequate overestimation of the apparent validity causes a more discovered phenomenon of contamination to the criterion.

Note that the validity in the English-language psychological diagnostics is called “external validity” (face validity), as well as “validity of trust” (faith validity).

In the study of the validity of projective techniques, the most clarified aspect is the current initial validity. Most often, the current criterion validity of projective tests is studied by comparing the results in contrasting groups. Since the majority of projective tests are used in clinical psychodiagnostics, the objective criterion of a medical diagnosis, which records the fact of “health” or “ill health,” is most often taken. When validation establish such indicators, symptoms, reliably differentiate the subjects to a specific criterion. This model was used to validate the TAT test.

Another common means of monitoring current validity is comparing test data with a “portrait of a person.” Personality information can be analyzed on the basis of interviews, observations, expert opinions of teachers and the like.

Compared to current validity, predictive validity indicators are less frequently studied. The analysis of these indicators is mainly carried out in long-term and retrospective observations. The difficulties of remote validation are related to the problem of establishing a relationship between a prognostic criterion and current personality characteristics.

The problem of criterial validation of projective tests is determined by specific features that distinguish the projective techniques of objective tests and personality questionnaires. The former are focused on a broad “observational” information on various personality traits, while objective tests and questionnaires measure a limited range of features and properties, to which it is much easier to choose a validation criterion.

In particular subjects in a projective test this or that property of a person manifests itself in different ways. For example, one of the subjects can detect a large number of symptoms of aggression and a slight manifestation of signs of creative abilities. In the responses of the other, on the contrary, creative symptoms will dominate, and aggressiveness may not be. The absence of a single hierarchy of symptom registration for the subjects leads to low indices of criterial validity if we analyze the test for each of the symptoms across the entire validation sample. Higher and adequate indicators will be achieved in the analysis of the "constellation", complexes of symptoms associated with a specific diagnosis.

The least developed, but extremely important issue of the analysis of the criterion and constructive validity of projective techniques is the obvious difference between the real (criterion) external behavior and its projection into the experimental fantasy. Thus, sexual impulses against the background of social anxiety and specific upbringing, of course, are more pronounced in fantasy than in real behavior. But in the presence of psychopathic abnormalities, aggressive sexuality can manifest itself equally expressively both in projection and in criterion behavior.

Internal consistency (consistency, consistency) is a characteristic of the test method, indicating the degree of homogeneity of the composition of tasks according to the quality, ability or behavior measured by the test. The criterion of internal consistency is an essential element of the constructive validity of the test, because it determines the tasks by their focus on a particular construct, measures the contribution of each item (tasks or questions) to the display of the studied psychological properties. It is clear that the maximum validity of the test is achieved through the selection of precisely such items, which, having a positive correlation with the overall test result, simultaneously minimally correlate with each other. If there are high correlation rates between individual tasks (positive or negative), such a test will be overloaded with unnecessary items (tasks) that are almost unambiguous .. This will result in an unjustified increase in the number of tasks for the test, and this, in turn, will lead to an increase in the duration and the amount of work of the experimental and researcher, as well as lead to a deterioration in the reliability of the technique. Thus, the selection of tasks according to the criterion of internal consistency ensures the greatest pragmatic effectiveness of the test. Due to this criterion, as a rule, the methodology is completed mainly with such tasks, as closely as possible connected with the studied indicator.

In practice, internal consistency is determined by examining the correlation between the test result as a whole and each individual task. Most often, this is done using the indicator of the correlation between the nature of the solution of each task (“completed” - “not completed”, support or denial of the statement in the questionnaire, the answer to the questionnaire questionnaire, etc.) with a total score of all test items. Thus, the most consistent would be such a question or task, or their combination, “works” in accordance with the entire set of method paragraphs. One should not be too observant to notice some contradiction between the two parts of the definition of the criterion of internal consistency: the task should belong to the same sphere of behavior as much as possible, and such tasks cannot correlate with each other. The simple definition of a bisterial correlation in this way does not allow one to solve such controversies. This can be achieved through the use of a complex procedure for determining internal consistency through the analysis of partial correlations between the overall test result and individual tasks; it involves the preparation of a multiple regression equation. In this equation, each item has its own "weight" coefficient, quantitatively reflects the contribution of this task to the overall result. This contribution is separated from the contributions of other tasks. The advantage of this method is that such a "weight" coefficient (the proportion of a point in the general diagnostics) can be used as a "key" for this task. This significantly increases the authenticity of the results of personal questionnaires. Due to the presence of such factors for each of the questions, the final sum of points can be calculated differentially, in accordance with the importance of each symptom. Traditionally, the calculation of the result of the questionnaire is carried out on a system of "1" or "0" points to offset the final result in the event of a match or a mismatch of the answers with the "key". At the same time, the importance of individual answers - from the point of view of the trait being studied - do not pay attention, which makes the accuracy of the study more difficult.

In the analysis of internal consistency, the method of the equation of contrasting groups is sometimes used, which are formed from subjects with the highest and lowest total scores. The performance of each item in the group of people with high results on the test as a whole is compared with its performance in the group with low scores. Those tasks for which subjects from the first group do not have significantly better results than subjects in the second group are considered invalid. Detected imperfect tasks or rejected or revised.

So far, cases of analysis of indicators of internal consistency of individual tasks of an isolated psychological test have been considered. However, internal consistency is an important characteristic of the diagnostic value of a subtest of a comprehensive test battery. In this case, the criterion of internal consistency relates to the existing correlation interaction between the data of individual subtests and the integrative result. A large number of intelligence tests, for example, consist of subtests that are used separately from each other (vocabulary, arithmetic, practical, general knowledge subtest, etc.). When constructing such batteries of tests, the degree of connection of indicators with the overall IQ is determined and, just as in the previous case, the subtest poorly correlates with the battery as a whole, is rejected or recycled. Correlation coefficients in the form of a matrix, where quantitative measures of the relationship between individual subtests are shown, as well as between them and the general indicator, are evidence of the internal consistency of the test battery, the degree of contribution (“value”) of individual subtests for the study of a complex construct. Recall that the correlative links between individual subtests should be minimal, while the relationship of each of them with the end result - the maximum.

Analysis of the internal consistency of the component parts of the methodology is focused not only on the practical goals of increasing the homogeneity of tasks. This criterion allows you to deepen the understanding of the nature of the studied construct, its contextual and theoretical status. Based on the analysis of the content of items already selected by the criterion of internal consistency, it is possible to determine the specific boundaries of the studied construct, for example, a set of individual qualities of intelligence that are studied using a test.

According to the procedure for determining the criterion of internal consistency approaching the characteristics of the discriminativeness of the test tasks. This indicator will be considered in the next - fourth section. Note: despite the related definition technique, these criteria are completely different. The criterion of internal consistency is focused on the assessment of the entire test, its peculiar “internal” validity, inherent to the totality of tasks. Discrimination indices reflect the diagnostic power of only individual items. Therefore, the determination of the discriminativeness of individual tasks is a preparatory procedure for analyzing the internal consistency of the test as a whole.

Discrimination of test assignments is the ability of individual tasks of the methodology to distinguish subjects with high or low test results, as well as subjects with high performance of real (criterial) activity from those in which it is low.

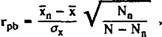

Any answer to the test subject can be represented in a dichotomous scale - “right”, “yes” - one point, “wrong”, “no” - zero points. The sum of such points for all tasks of the test is a primary (“raw”) assessment. The measure of coincidence of the success of solving individual tasks and the entire test in the sample of subjects is a direct indicator of the discriminativeness of the test tasks, which is calculated in the form of a point-bisterial correlation coefficient and is called the discrimination coefficient (discrimination index):

where x is the arithmetic average of all individual test scores; x n is the arithmetic average of the ratings in those subjects who correctly completed this item (in the case of a personality questionnaire, it is a coincidence with the “key”); σ x - the average deviation of the individual test scores for the sample; N n - the number of subjects, correctly solved the problem (or those whose answer to this item of the questionnaire corresponds to the "key"); N is the total number of subjects.

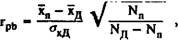

The above equation is suitable for calculating the discrimination rate only in cases where all the test subjects have responded to all test assignments without exception. When the number of answers is incomplete, it makes sense to calculate the discrimination coefficient using a different formula, namely:

where x is the arithmetic average of the individual assessments of those subjects who responded to the task; σ x d - the average deviation of the individual test scores for those who answered the tasks; Nd - the total number of subjects, gave an answer to the task.

The discrimination coefficient can take values from -1 to +1. A high positive r Pb means that a specific test item reliably distributes test subjects with high and low scores. High negative r Рb testifies to the unsuitability of the task, insufficient connection of the partial result with the final conclusion.

The coefficient of discriminativeness of test assignments is, in essence, an indicator of criterial validity of a task, since it is determined relative to the external criterion — the final result or the productivity of the experimental criterion activity. The discriminative index can be determined using the method of contrast groups. A prerequisite for the use of this tool is to have a near-normal distribution of estimates by the criterion of validation. The proportion of subjects in contrasting groups may vary widely depending on the sample size: the larger the sample is, the smaller the number of subjects that can be limited to selecting groups with high and low results. The lower limit of the selection of the group is 10% for each group of the total number of members of the sample, the upper limit is 33%. Desyatoms and groups are used quite rarely, since a small number of cases reduces the statistical reliability of discrimination indices. Often, 25-27% of subjects are identified from the sample.

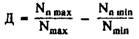

The discrimination index is calculated as the difference between the shares of individuals who successfully solved the problem - separately for high and low productive groups. This index affects D. So

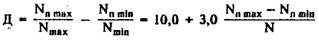

Since N max = N min = 0.10: 0.33, the equation takes the form

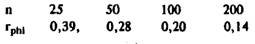

In the end, you can use the choti-rik correlation coefficient

where f g is the proportion of persons who correctly solved the problem in the total number of subjects belonging to the group with the maximum result; f d - the proportion of persons who correctly solved the problem in the group of minimum results; p is the total proportion (f g + f d) of persons who correctly solved the problem; q - the proportion of persons who gave the wrong decision (1-p).

The critical values of the coefficient indicate the diagnostic value of the item at a confidence level of p <0.05, depending on the number of patients examined (n):

The maximum accuracy of determining r phi is achieved when the volume of contrast groups is about 27% of the sample in each.

When analyzing discriminativeness, special attention should be paid to the statistical weight of the correlation coefficients, their reliability. In cases when the values of the discrimination coefficient approach zero and the confidence level is low, the test item is checked, should be revised. The importance of determining the discriminative nature of test assignments, one of the main indicators characterizing the diagnostic value of personality questionnaires, is explained by the orientation of a large number of factorial questionnaires and questionnaires on the dichotomous distribution of subjects according to polar personality characteristics. The discriminative index value for questionnaires is enhanced by the possibility of differential calculation of the final result (see the previous section). The analysis of the discriminativeness of tasks is widely used in cases of developing and monitoring objective tests, tests of general and special abilities, and the like. This indicator is also of great importance for achievement tests. The decisive stage of the procedure for the creation and control of screening techniques is also the selection of the most discriminative items. On the contrary, the analysis of discriminativeness in projective tests, although it is fundamentally important, but recedes into the background because of the difficulties associated with the quantitative qualification of indicators of projective tests, sometimes the impossibility of conducting correlation analysis, the characteristic of discriminativeness in qualitative form.

Calculations of discriminative indices are quite laborious, especially when the test consists of a large number of tasks, but such procedures can become easier if there are special programs for processing empirical information on a PC.

The severity of the test tasks is such a characteristic of the item, which reflects its statistical level of the possibility of solving. Together with the analysis of discriminativeness and internal consistency, this set of indicators occupies a leading place in the procedure for completing a test with adequate objectives for measuring tasks.

In psychology, subjective psychological and statistical (objective) severity is distinguished. The subjective severity of the task is associated with an individual psychological barrier, the size of which is mediated by the conditions of the decision (time limit, clarity of instruction, degree of non-triviality of the solution, etc.), the level of formation of the knowledge and skills necessary to solve the problem, as well as some other factors. The individual influence of a combination of these factors on the test results reduces the reliability and authenticity of the data. Given this, measures are being taken to level the factors of subjective severity, which is achieved by using a special set of sampling and standardization of the research procedure.

One measure of statistical severity is the percentage of individuals in the sample who have solved or have not solved the problem. For example, if only 20% of the subjects found the right solution, then such a task can be characterized as too heavy for a particular sample, and if 80% of the subjects successfully coped with the tasks, such a task is accordingly considered easy. The severity of test assignments is a relative characteristic, since it depends on the characteristics of the sample (age, professional, socio-cultural characteristics). The severity is primarily a characteristic of intellectual tasks for which the criterion of “right” or “wrong” outcome can exist. For questions or situational tasks (that is, tasks that model a situation) that are part of the methods of personality research, the statistical concept of gravity is unsuitable.

For the successful implementation of the psychodiagnostic methodology, the stage of completing tasks in terms of the severity of implementation is of great importance. So, in the presence of too complex tasks, the reliability and validity of the technique will decrease sharply. This will occur due to the deterioration of the statistical characteristics of the assessment due to the small number of decisions obtained, the increase in the number of random assessments for the subjects trying to guess the correct answer. Experiences of constant failures when working with a test negatively affect the test subject, and this, in turn, reduces the obvious validity of not only a specific test, but of subsequent research. Failures of work with the test tasks can also cause such an emotional reaction of the test subject as a refusal to cooperate further. Too simple tasks will lead to the same results, that is, most of the subjects will quickly cope with the entire set of tasks, and from this point on the test will lose its ability to rank the results. In addition, in this case there will be a slight variation of indicators, a negative impact on the subjective attitude to the test.

The main task of analyzing the severity of the test tasks is to select the test points that are optimal in complexity and also to place them in a specific order. Of course, if the statistical severity is insignificant, such an easy task is placed at the beginning of the test, and vice versa, complex tasks are placed at the end of the methodology. One or two simple tasks set before the main kit and used as examples. The disposition of tasks by increasing severity, which is represented by particles of individuals from the sample that perform tasks successfully, allows one to tentatively determine the threshold (degree) of the severity of each particular subject. This threshold level can already mean the rank of the subject in the group according to the progress of the test. A similar principle underlies the measurement in the first tests of mental abilities (for example, the Binet-Simon scale), where the indicator of “mental age” was the fulfillment of a task of moderate severity for a given age group. The principle of placing tasks on a growing burden has remained in most modern psychometric tests, focused on the study of general abilities, professional success, in tests of achievements and some others.

In tests of speed, in contrast to tests of effectiveness (power tests), the severity of individual tasks is, of course, small and practically unchanged. The number of such tasks is taken so that no one test subject from the sample is within the allotted time limit.

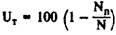

The main indicator of the severity of the test tasks is the severity index.

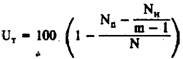

where U t - the number of subjects, did not solve the problem; N 0 - the number of subjects, correctly solved the problem; N is the total number of subjects.

When considering random success, guess for problems with an “imposed” answer.

where N n - the number of subjects, did not solve the problem; m is the number of answer choices.

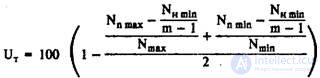

In cases where the sample number of items to be checked is numerous, the severity index can be determined using contrasting subgroups. Each of these subgroups includes 27% of persons from the total sample of people with the best and worst results on the test as a whole. The severity index is defined as the arithmetic average of the indices for groups with maximum and minimum results:

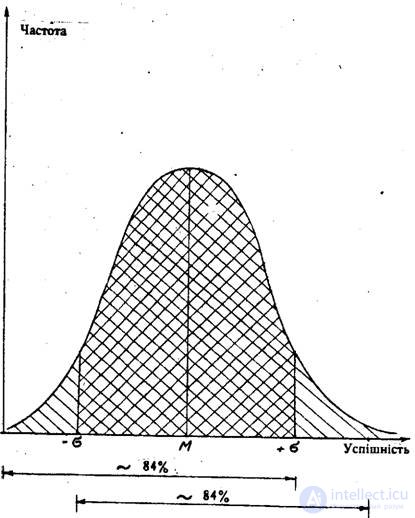

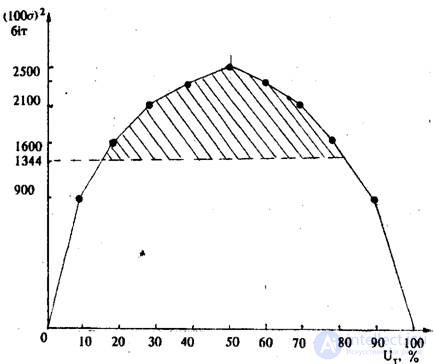

If we imagine that the frequencies of the distribution of assessments of subjects, solve problems, obey the normal law, then within M ± σ there are about 68% of all subjects, beyond these limits - 16% of people. These frequencies are taken on the threshold in the selection of tasks for the initial development of the test. Thus, after determining the severity indices, the tasks that were solved by more than 84% (more than M-σ) of the subjects, as well as those that were not able to perform 84% of the sample members (M + σ) (Fig. 2), are primarily discarded.

Fig. 2. Threshold frequencies of cases of solving test problems in the selection by severity index

A more differentiated analysis of the severity of tasks is the division of experimental subjects into groups according to the external criterion of validity, Hanpiklad, according to performance in real activity. All experimental subjects are distributed according to the principle of success in criterion activity - into subgroups. For each of them analyzes the success of solving specific problems. In fig. 3, in the form of broken lines, the dependences between the success of problem solving and estimates by the validation criterion for the four hypothetical test points are given.

Fig. 3. Changes in the success of solving problems in groups with different performance

Task number 1 practically does not differentiate the subjects. The proportions of individuals, he was decided, differ very little in subgroups with different performance (Fig. 3 shows data from 50 subgroups}). The constant pattern of changes in the number of persons solved the problem, depending on the increase in performance indicators is not observed.

For task number 2, this dependence is in the range of 0–5 points. Problem number 2 is too easy and can be used in the test if it falls into the 84% interval.

Problem number 3 is quite difficult. It differentiates well subjects with high scores, and if this task is performed by more than 16% of subjects, it can be included in the test.

The best of all is the Ns 4 problem. Its severity is close to the average for this sample. The task differentiates the subjects well according to an external validation criterion.

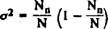

When selecting test tasks, not only the severity index can be applied, but also the indicator derived from it. Such an indicator can be the standard deviation for qualitative signs. It is calculated on the basis of the particles of the subjects, decided and did not solve the problem. Such an indicator can be used in cases of the binomial distribution of the results of solving a problem in a sample. For larger samples

A more convenient indicator, calculated in bits:

The relationship between (100σ) 2 and U t has a parabolic character (Fig. 4). The easier or more complex the task, the worse it differentiates the subjects. The best in this aspect are tasks that perform about 50% of the members of the sample. The tasks fall into the shaded area and have the value (100σ) 2 * 16 * 84 = 1344, by the parameter of gravity can be included in the test. They should be evenly distributed in the test material in accordance with the indexes of gravity.

The selection of tasks for the severity index can be carried out simultaneously with the calculations of other correlation coefficients.

Fig. 4. Dependence between the severity index and the ability to differentiate subjects

bibliography

Часть 1 Means of control of the diagnostic qualities of psychological tests

Часть 2 2.1. content validity - Means of control of the diagnostic

Часть 3 2.4. obvious validity - Means of control of the diagnostic

Comments

To leave a comment

Mathematical Methods in Psychology

Terms: Mathematical Methods in Psychology