Lecture

The limited Boltzmann machine (OMB, English Restricted Boltzmann machine, RBM) is the generators of a stochastic [artificial neural network capable of learning the probability distribution over a set of its inputs.

The OMB was first invented under the name Harmonium (Paul Harmonium) by Paul Smolensky in 1986, and they gained popularity after the invention of Jeffrey Hinton and co-authors in the mid-2000s of fast learning algorithms for them. POMs have found application in reducing the dimension, classification, collaborative filtering,] character training and thematic modeling. They can be trained in a controlled and spontaneous manner, depending on the task.

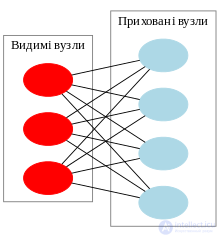

As their name implies, OMB is a variant of the Boltzmann machines, with the limitation that their neurons must form a bipartite graph: a pair of nodes from each of two groups of nodes (which is usually called “visible” and “hidden” nodes, respectively) can have a symmetrical connection between them, but there are no connections between the nodes in the group. In contrast, the "unlimited" Boltzmann machines may have a connection between hidden nodes. This restriction allows for more efficient workout algorithms than are available for the general class of Boltzmann machines, in particular, the comparative divergence algorithm (Eng. Contrastive divergence) based on gradient descent.

Limited Boltzmann machines can also be used in depth learning networks. In particular, deep-seated belief networks can be formed by “assembling” an OMB and, possibly, by fine-tuning the resulting deep-seated network using gradient descent and back propagation.

The standard type of OMB may be doubled (boolean / bernulnієvі) prikhovanі that is visible to the institute, and be added to the matrices of wagons koefіtsіnntіv (rozmіru m × n ), tied to s z'єdnannyam mіzh prihovanim vuzlom

This is visible to the school

, as well as wahi cossacks upwardzhen (zsuviv)

for vidimih vuzlіv, і

for prikhovanikh vuzl. Urahuvannyam tsogo, the energy of the confession (betting vectors) ( v , h ) is assigned to yak

abo, in matrix,

Tsya funktsiya energії є analogychny to funkііenerіі least least Hopfіlda. Yak і in Boltzmann's zagalnyh machines, spreading ymov_rnostі over prikhovanimi / abo by visible vectors are used in terms of the functions of energy: [9]

de є Statistical Sumy [ru] , determined by Yak Sum

over usim, they can be confiscated (in other words, they simply became [ru] for those who want to get rid of the sweep of giving 1). Analogously, (interconnected) the ideology of the visible (internal) vector of Boolean values є sumo over usim can be confused with the following ball: [9]

Omsk OMB form dvukhchitkov [7] Tobto, for Vidimih vuzliv ta

Prikhovanikh Universities of the Unemployed Confession of Confessions of the Universities of the Universities v for a given Confession of Universities of the Universities of the Universities h є

.

І navpak, with the mind of the homo hm for a given v є

.

Okremі imovіrnostі aktivatsіі ask yak

that

de Let the logistic sigmoi [ru] .

No matter what they are, they are found in the л Bernuliмиvimi, visible in the OMB, they can be batch-valued. In such a scenario, logistic functions for the students of higher education will be fixed by the logistic functional functions of the activator [en] (eng. Softmax function )

De K є kіlkіstyu discrete values, they can be seen. Stinks zastosovuyutsya in thematic modelyuvanni [6] and the recommended systems. [four]

The Boltzmann machines have been massed by the special combination of the Boltzmann machines and Markov type machines. [10] [11] This is a graphical model of a model of factor analysis models. [12]

Boltzmann's masquerade masks to cope with the maximization of the dobutok of the ymovirnosti, with a singing trenuvalnuyu (matrix, leather row yako rozgladaatsya yak vidimiy vector

),

abo pivotally maximized mathematically the logarithmic logos [en] : [10] [11]

Algorithm, that is why it is necessary for zaschastіshe zasosovuєtsya for trenuvannya OMB, tobto to optimize the vector of the vagovyi koyfіtsієntіv , пор algorithm of porosity rozbіzhnosti (PR, eng. contrastive divergence, cd ), just to detect [en] , first of all rotations for trainer models of expert examiners [ru] (eng. product of experts, PoE ). [13] [14] taihmuyuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuviemöysthekuyoukuyuyuyuyuyuyuyuyuyu.jpl.jpu

An elementary, one-for-all procedure of a portable rozbіzhnost_ (PR-1, Eng. CD-1 ) for a single call can be described as follows:

The rule of clarification for forwarding a and b is equivalent.

Practical training of the OMB, which was written by Hinton, can be found on your home side. [9]

Comments

To leave a comment

Computational Intelligence

Terms: Computational Intelligence