Lecture

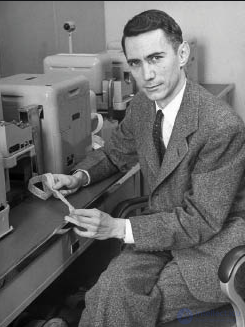

In the late 1930s, Shannon was the first to connect Boolean algebra with switching circuits, which are part of modern computers. Thanks to this discovery, Boolean algebra could be used as a way of organizing internal computer operations, a way of organizing the logical structure of a computer. Thus, the computer industry owes much to this man, even though his interests were sometimes far from computers.

Claude Elwood Shannon was born on April 30, 1916 in the small town of Gaylord on Lake Michigan. His father was a lawyer and a judge for some time. His mother taught foreign languages and became the director of Gaylord High School. Young Claude loved to design automatic devices. He put together models of airplanes and radio chains, also created a radio-controlled boat and a telegraph system between his house and a friend's house. He corrected the radio station for the local department store. Thomas Edison was both his childhood hero and a distant cousin, although they have never met. Later, Shannon added Isaac Newton, Charles Darwin, Albert Einstein and John von Neumann to his list of heroes. In 1932, Shannon was enrolled in the University of Michigan.

Claude Shannon specialized in electrical engineering. But mathematics also fascinated him, and he tried to attend as many courses as possible. One of those mathematical courses, by symbolic logic, played a large role in his career. He received a bachelor's degree in electrical engineering and mathematics. “This is the story of my life,” says Shannon. “The interaction between mathematics and electrical engineering.”

In 1936, Claude Shannon became a graduate student at the Massachusetts Institute of Technology (MIT). Its head, Vannevar Bush, the creator of the differential analyzer (analog computer), suggested that the logical organization of the analyzer be described as the topic of the thesis.

While working on his thesis, Shannon concluded that Boolean algebra can be successfully used to analyze and synthesize switches and relays in electrical circuits. Shannon wrote: “It is possible to perform complex mathematical operations through relay circuits. Numbers can be represented by relay positions and jog switches. By connecting a set of relays in a certain way, it is possible to perform various mathematical operations. ” Thus, explained Shannon, you can assemble a relay circuit that performs logical AND, OR, and NOT operations. You can also implement comparisons. Using such chains, it is easy to implement the “If ... then ...” construction.

In 1937, Shannon wrote a thesis entitled “Symbolic Analysis of Relay and Switching Circuits”. It was an unusual dissertation, it was regarded as one of the most significant in all the science of the time: what Shannon did paved the way for the development of digital computers.

Shannon's work was very important: now engineers in their daily practice, creating hardware and software for computers, telephone networks and other systems, constantly use Boolean algebra. Shannon downplayed his merit in this discovery. “It just happened that no one else was familiar with these two areas (mathematics and electrical engineering - A. Ch.) At the same time,” he said. And afterwards he declared: “I always liked this word - boolean”.

To be fair, it should be noted that, prior to Shannon, the connection between Boolean algebra and switching chains in America was conducted by C. Pearce, in Russia - P. S. Ehrenfest, V. I. Shestakov, and others.

On the advice of Bush, Shannon decided to pursue a doctorate in mathematics at MIT. The idea of his future dissertation was born to him in the summer of 1939, when he worked at Cold Spring Habor in New York. Bush was appointed president of the Carnegie Institution in Washington County and offered Shannon to spend some time there: the work that Barbara Berks did on genetics could have served as a subject for which Shannon would apply her algebraic theory. If Shannon could organize the switching of chains, then why can't he do the same in genetics? Shannon's doctoral thesis, dubbed “Algebra for Theoretical Genetics”, was completed in the spring of 1940. Shannon holds a Doctoral degree in mathematics and a Master's degree in electrical engineering.

Fry, director of mathematics at Bell Laboritories, was impressed with Shannon's work on symbolic logic and his mathematical thinking. In the summer of 1940, he invites Shannon to work at Bell. There Shannon, exploring the switching circuit, discovered a new method of organizing them, allowing to reduce the number of relay contacts needed to implement a complex logical function. He published a report entitled “The Organization of Bipolar Switching Circuits”. At the end of 1940, Shannon received the National Research Award. In the spring of 1941, he returned to Bell Laboratories. Since the beginning of the war, T. Fry headed the work on a program for fire control systems for air defense. Shannon joined this group and worked on devices that spotted enemy aircraft and targeted anti-aircraft installations.

AT & T, the owner of Bell Laboratories, was the leading telecommunications company in the world and naturally, Bell laboratories also worked on communication systems. This time Shannon became interested in electronic messaging. Little was clear to him in this area, but he believed that mathematics knew the answers to most questions.

At first, Shannon set himself a simple goal: to improve the process of transmitting information via a telegraph or telephone channel, which is under the influence of electrical disturbances or noise. He concluded that the best solution lies not in technical improvement of communication lines, but in more efficient information packaging.

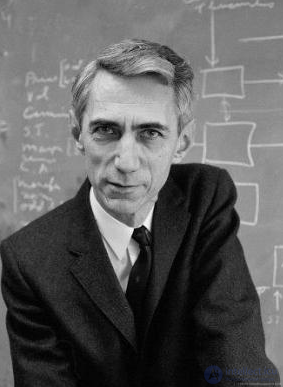

What is information? Leaving aside the question of the content of this concept, Shannon showed that this is a measurable quantity: the amount of information contained in this message is a probability function that this will be selected from all possible messages. He called the overall potential of information in the message system as its “entropy.” In thermodynamics, this concept means the degree of randomness (or, if you like, “mixing”) of a system. (Once Shannon said that he was advised to use the concept of entropy by mathematician John von Neumann, who said that since no one knows what it is, Shannon will always have an advantage in disputes concerning his theory.)

Shannon defined the basic unit of the amount of information, later called a bit, as a message representing one of two options: for example, “eagle” - “tail”, or “yes” - “no”. The bit can be represented as either 0, or the presence or absence of current in the circuit.

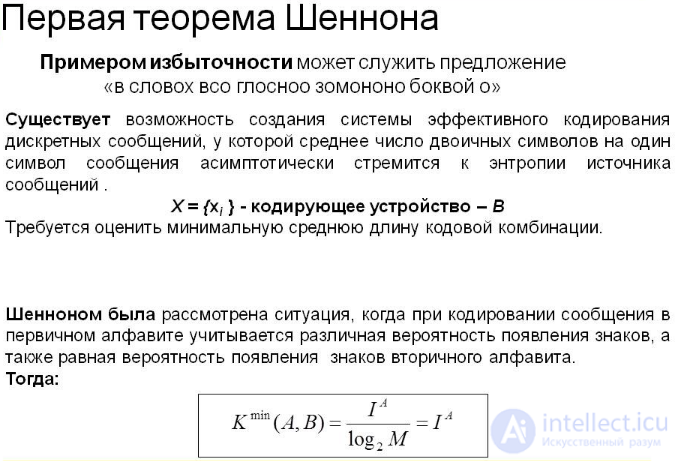

On this mathematical foundation, Shannon then showed that any communication channel has its maximum throughput for reliable information transfer. In fact, he proved that, although it is possible to get closer to this maximum due to clever coding, it cannot be achieved.

This maximum became known as the Shannon limit. How can we get closer to the Shannon limit? The first step is to take advantage of code redundancy. Just as a lover could concisely write in his love note “I am in”, by efficiently encoding you can compress information, presenting it in the most compact form. With the help of special coding methods that allow for error correction, we can guarantee that the message will not be distorted by noise.

Shannon's ideas were too visionary to have an immediate practical effect. Circuits for vacuum tubes simply could not yet compute the complex codes required to get closer to the Shannon limit. In fact, it was not until the early 70s, with the advent of high-speed integrated circuits, that engineers began to fully use information theory.

All his thoughts and ideas related to the new science - information theory, Claude Shannon outlined in the monograph “Mathematical theory of communication”, published in 1948.

Information theory, in addition to communication, has also penetrated into other areas, including linguistics, psychology, economics, biology, and even into art. In confirmation, for example, we will give a fact: in the beginning of the 70s, an editorial article titled “Information Theory, Photosynthesis, and Religion” was published in the journal “IEEE Transactions on Information Theory”. From the point of view of Shannon himself, the application of information theory to biological systems is not at all so irrelevant, since, in his opinion, the general principles are the basis of mechanical and living systems. When asked if a car can think, he replies: “Of course, yes. I am a car and you are a car, and we both think, don’t we? ”

In fact, Shannon was one of the first engineers to suggest that machines could be programmed so that they could play cards and solve other complex problems.

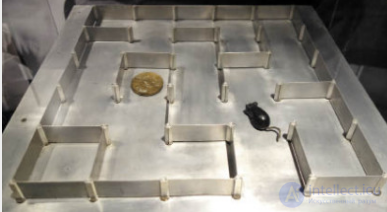

In 1948 he published the work “Programming a computer for playing chess”. Previously, there were no similar publications on this topic, and the chess program created by Shannon was the basis for subsequent developments and the first achievement in the field of artificial intelligence. In 1950, he invented the Theseus mechanical mouse, which, being controlled by a magnet and a complex electrical circuit hidden under the floor, could find a way out of the maze.

He built a machine that “reads thoughts” and plays a “coin” - a game in which one of the players tries to guess what the other player, the tails or the tails, chose. A Shannon colleague who also worked at Bell Labo ratories, David W. Hegelbarger, built a prototype; the car remembered and analyzed the sequence of past elections of the opponent, trying to find a pattern in them and predict the next choice based on it.

Claude Shannon was one of the organizers of the first conference on artificial intelligence, held in 1956 in Dartmupta. In 1965, he visited by invitation in the Soviet Union, where he gave a series of lectures on artificial intelligence.

In 1958, Shannon left Bell Laboratories, becoming a professor at the Massachusetts Institute of Technology. After he officially retired in 1978, juggling became his greatest hobby. He built several juggling machines and developed what could be called the unified field theory for juggling.

Since the late 1950s, Shannon has published very few papers on information theory. Some of his former colleagues said that Shannon “burned out” and he was fed up with the theory he had created, but Shannon denied it. “Most great mathematicians wrote their best works when they were still young,” he said.

In 1985, Shannon and his wife suddenly decided to attend the International Symposium on Information Theory, held in Brighton, England. For many years he did not participate in conferences, and at first no one noticed him. Then the participants of the symposium began to whisper: the modest gray-haired gentleman who came and left the halls where the reports were being heard was Claude Shannon. At the banquet, Shannon said a few words, slightly juggled three balls, and signed many autographs to the engineers lined up in a long line. As one of the participants recalled, “it was perceived as if Newton appeared at a conference devoted to the problems of physics.”

In early March 2001, at the age of 84, Claude Shannon passed away after a long illness. As the ubiquitous journalists wrote - the person who came up with the bit died.

Comments

To leave a comment

Persons

Terms: Persons