Lecture

The first years of the development of artificial intelligence were full of success, although quite modest. Considering how primitive computers and programming tools were at that time, and the fact that only a few years before computers were considered as devices capable of performing only arithmetic and not any other actions, one can only wonder how managed to get the computer to perform operations, at least a bit like reasonable ones.

The intellectual community, for the most part, continued to believe that "no machine can perform action X". (A long list of such X, compiled by Turing). It is quite natural that researchers in the field of artificial intelligence responded to this by demonstrating the ability to solve one task X after another.

John McCarthy described this period as an era of exclamations: “Look, mom, what can I do!”. The first successful developments of Newell and Simon were followed by the creation of a program for a common problem solver (General Problem Solver — GPS). Unlike the Logic Theorist program, this program was designed from the very beginning to simulate the procedure for solving problems by man. As it turned out, within the limited class of puzzles that this program was capable of solving, the order in which she considered subgoals and possible actions was similar to the approach people use to solve the same problems. Therefore, the GPS program was, apparently, the very first program that embodied the approach to “organizing thinking according to the same principle as that of a human being”.

The results of the successful application of GPS and subsequent programs as a model of knowledge allowed us to formulate the famous hypothesis of a physical symbolic system , which states that there is a "physical symbolic system that has the necessary and sufficient means for intellectual actions of a general type". By this is meant that any system exhibiting intelligence (human or machine) must act on the principle of manipulating data structures consisting of symbols. Below it will be shown that this hypothesis has been in many ways vulnerable to criticism.

Working at IBM, Nathaniel Rochester and his colleagues created some of the very first artificial intelligence programs. Herbert Gelernter designed the Geometry Theorem Prover program (an automatic geometric theorem proof program) that was able to prove such theorems that would seem very complicated to many mathematics students.

Since 1952, Arthur Samuel has written a number of programs for the game of checkers, which ultimately learned to play at the level of a well-trained amateur. In the course of these studies, Samuel refuted the claim that computers were able to perform only what they were taught: one of his programs quickly learned to play better than its creator. This program was shown on television in February 1956 and made a very strong impression on the audience.

Like Turing, Samuel struggled to find machine time. Working at night, he used computers that were still on the test site of the IBM manufacturing facility. John McCarthy moved from Dartmouth University to the Massachusetts Institute of Technology and here during the course of one historical year of 1958 he made three crucial contributions to the development of artificial intelligence.

The MIT AI Lab Memo No. 1 John McCarthy gave the definition of a new high-level language Lisp , which was destined to become the dominant programming language for artificial intelligence. Lisp remains one of the major high-level languages currently in use, being at the same time the second-order language of this type, which was created just one year later than Fortran .

Having developed the Lisp language, McCarthy obtained the necessary tool for him, but access to limited and expensive computer resources continued to be a serious problem. In this regard, he, together with other employees of the Massachusetts Institute of Technology invented the time-sharing mode. In the same 1958, McCarthy published an article called Programs with Common Sense, in which he described the hypothetical program Advice Taker , which can be considered as the first complete system of artificial intelligence. Like the Logic Theorist and Geometry Theorem Prover programs, this McCarthy program was designed to use knowledge when searching for solutions to problems. But unlike other programs, it was intended to include general knowledge of the world. For example, McCarthy showed that some simple axioms allow this program to develop a plan for the optimal route of a car trip to the airport so that you can catch a plane.

This program was also designed in such a way that it could accept new axioms in the course of ordinary work, and this allowed it to acquire competence in new areas without reprogramming. Thus, the Advice Taker program embodied the central principles of knowledge representation and conducting reasoning, which is that it is always useful to have a formal, explicit view of the world, as well as how agent actions affect this world and how to acquire the ability to manipulate similar representations using deductive processes. A remarkable feature of this article, which appeared in 1958, is that a significant part of it has not lost its significance even today.

The famous year 1958 was also marked by the fact that it was this year that Marvin Minsky moved to the Massachusetts Institute of Technology. But his cooperation with McCarthy, which had been developing at first, did not last long.

McCarthy insisted that it was necessary to study ways of presenting and conducting reasoning in formal logic , whereas Minsky was more interested in how to bring the programs to a working state, and ultimately he developed a negative attitude to logic.

In 1963, McCarthy opened an artificial intelligence lab at Stanford University. He developed a plan for using logic to create the final version of the Advice Taker program even faster than was conceived, thanks to the discovery of a resolution method by J. A. Robinson (a complete algorithm for proving theorems for first-order logic). The work carried out at Stanford University, stressed the importance of using general-purpose methods for conducting logical reasoning. Logical applications include Cordell Green’s question- forming and planning systems , as well as the Shakey robotic project being developed at the new Stanford Research Institute (SRI).

The last project, for the first time, demonstrated the complete integration of logical reasoning and physical activity. Minsky supervised the work of a number of students who chose tasks of limited scope for themselves, which, as it seemed, required intellectuality at that time. These limited problem areas are called microworlds .

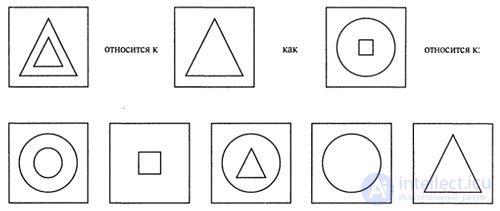

The Saint James Slag program was able to solve integration problems in closed-form calculus, typical of the first college courses. The Analogy program by Tom Evans solved the problem of identifying geometric analogies used in testing IQ, similar to the one shown in the figure below.

The Student Daniel Bobrov program solved algebraic problems described in the form of a story, similar to the one below. The most famous example of the microworld was the world of blocks, consisting of many solid blocks placed on the surface of a table (or, more often, on a table imitation).

A typical task in this world is to change the location of the blocks in a certain way using a robot arm that can capture one block at a time. The World of Blocks became the basis for the David Huffman technical vision system project, the study of vision and the distribution of (satisfying) the restrictions of David Walts, the theory of teaching Patrick Winston, the natural language comprehension program Terry Winograd and the planner in the world of Scott Fulman blocks.

Research based on early work on the creation of the neural networks of McCulloch and Pitts also advanced rapidly. In the work of Vinograd and Cowan, it was shown how to present a separate concept with the help of a collection consisting of a large number of elements, respectively, increasing the reliability and degree of parallelization of their work.

Hebb's teaching methods were refined in the works of Bernie Widrow, who called his networks adalines, as well as Frank Rosenblatt, the creator of perceptrons. Rosenblatt proved the perceptron convergence theorem, which confirms that the learning algorithm proposed by him makes it possible to adjust the number of perceptron connections according to any input data, provided that such a correspondence exists.

Comments

To leave a comment

Models and research methods

Terms: Models and research methods