Lecture

"Information genesis is the process of generating information in nature" (A.N. Kochergin (A. Kochergin, "Information and its manifestations", 2008; A. Kochergin, Z. Zayer, "Information genesis and its optimization questions", 1977.)). This is not about any, but about productive information, leading to useful qualitative changes and different from destructive disinformation and unproductive information noise. Informational genesis in the above sense is an alternative to information dissipation. The generation of productive information begins morphologically (as an increase in the diversity of the open system) and occurs according to synergetic laws due to the diversity of the environment. So the system receives data. Increased morphological diversity, i.e. the elemental composition of the system, through the selection of valuable data goes into the syntactic binding of elements with the help of an internal system self-learning program (if there is one). Thus, valuable data for the system is transformed into information — primary knowledge — the system “recognizes” the acquired diversity, and a thesaurus appears in the system’s memory if it was not created at an early stage of self-organization in a similar situation. In the presence of consciousness, the primary knowledge stored in the system thesaurus acquires meaning, reaching a higher semantic level for knowledge.

Our use of linguistic terms of morphology, syntax and semantics is not accidental. Firstly, the described “technology” of information genesis in nature fits perfectly into the grammatical rules for constructing phrases and texts from words. Even medieval physicists understood this, speaking of the "texts of nature" (Galileo Galilei). Secondly, information genesis is characteristic both for natural phenomena and processes, and for secondary natural artifacts of human activity, be it science (in particular, linguistics), art, technology. In this regard, humanities and natural scientists, lyricists and physicists should understand that from the standpoint of information as such and information genesis, their opposition, if it exists, looks unnatural.

Researchers of intellectual systems agree that thesauruses and knowledge bases of intelligent systems in the process of development are built on a combinatorial-hierarchical principle due to the gradual integration of information blocks - first the simplest, then all the more complex. These blocks use system codes — hereditary and / or incorporated from the environment — and actually represent the cellular assemblies of neural networks (natural or artificial). The enlargement of blocks, as well as their internal morphological and functional modification, which is reduced to the establishment of connections between the cells of an ensemble (biological neurons or artificial formal neurons), is stimulated by the environment (in acts of knowledge and organization) or by the system (in acts of self-knowledge, self-organization). Such patterns are also characteristic of social systems (populations, societies) and information and communication systems (networks) with a distributed knowledge base - a collective thesaurus (Next, combine the related terms "knowledge base" and "thesaurus" into one - thesaurus.).

Example 1. Let 0 and 1 be the syntax codes of the simplest elements - the building blocks of the information blocks. Block - two elements. During informational genesis, first, within the blocks, links are established between their elements according to the principles of identity (00.11) and complementarity (01.10). When all combinations of links that form the knowledge necessary for the regulatory parrying of the environment are exhausted, the law of necessary diversity comes into effect - the thesaurus is no longer able to help the system in parrying the environment, the integration of information blocks is required, which is done. There are triads 000, 001, 010, 011, 100, 101, 110, 111, tetrads 0000, 0001, 0010, 0011, 0100, 0101, 0110, 0111, 1000, 1001, 1010, 1011, 1100, 1101, 1110, 1111 etc. At the same time, the potential spectrum of intra-block connections is expanding. Any integration, as we see, leads to an increase in diversity in the informational sense, i.e. to the generation of new information - there is a qualitative leap of knowledge. Note that the informational tetrads (biological quadruplets) of zeros and ones given as an example are based on triads (triplets), they, in turn, on dyads (doublets) and, finally, the last ones - on monads ( monoplets ) 0 and 1.

Real thesaurus codes (genetic, protein, metabolic, machine) have a significant impact on the rate of thesaurus self-organization. The more complex the code (in terms of the alphabet), the greater the diversity of each information block of the thesaurus compared to the same block with a simple code.

Example 2. A monad ("letter") of a computer code is capable of giving 2 combinations, a monad of a genetic code - 4, a monad of a protein code - 20, Russian - 33 combinations, and a doublet - 4, 16, 400 and 1089 combinations (The number of combinations in the doublet N = n 2 , where n is the variety of the monad code.). Consequently, during the next qualitative leap (enlarging of the blocks) of the thesaurus coded with a constant complex code, intrablock links are combined longer than with simple coding, and only because there are potentially more of them. These mechanisms are explained by combinatorial informational measure of diversity. The increase in the duration of intrablock combinatorial processes of functional-morphological binding of the information elements of the thesaurus as it develops leads to the fact that the frequency of qualitative knowledge jumps in the late stages of a self-organizing system decreases as compared to the early stages of thesaurus self-organization, and the process of adapting the thesaurus to knowledge growth lengthens. Thus, the rate at which a person perceives new knowledge increases to about 4-5 years of age, then stabilizes and, starting from 6-7 years, decreases, especially quickly after 20-25 years of age, and catastrophically quickly - to old age (V. Druzhinin ., Kontorov DS "Problems of Systemsology", 1976.).

Of course, the influence of a person’s age on the speed of knowledge perception is explained more difficultly than it is done using the example of combining information blocks and combining the connections within them. Apparently, there are other hidden informational factors, in particular, the existence of a thesaurus complexity limit — a limit that depends on the useful information resource of the environment and on the material and energy limitations of the thesaurus carrier (for example, the brain). Another possible factor is the change (desensitization) of the sensitivity of the sensory mechanisms (algorithms) of informational metabolism as the thesaurus develops.

In addition, the intellect does not indiscriminately use (or rather, without selection) all potential combinations of intra-and interblock informational links to enrich the thesaurus. The mechanism of intellectual selection selects the most valuable of these connections in accordance with a certain goal . And if the first steps in the formation and development of a thesaurus begin with the initial accumulation of a certain minimum of diversity of connections (states) on the principle of “better than horrible, than nothing”, then a purposeful selection of valuable and rejection of useless (harmful) links is carried out according to the principle “nothing is better than horrible. "

Accumulation, binding, combining, consolidation, selection and, finally, the generation of information - operations (actions) that make up the targeted sequence - an algorithm implemented through a program. It is logical to call it a program for generating information. Where does it come from and where to store it? When a student helps memory, he does not need such a program. But once, as a result of several failures, he feels that the method of simple accumulation of data does not help and, moreover, is dangerous, there is a need for another method based not on memorizing, but on understanding data and interrelations between them. Such a breakdown of the method is painful, but necessary. If it succeeds, the system will survive and will develop, if it fails, the system will die or degrade until complete decomposition. This is how an information generation program arises as a measure of the organization of a knowledge system. Without such a program, even a bifurcation (probabilistic) self-organization of the system and the system itself are impossible.

The logic laid down in the program for generating information should be probabilistic, fuzzy, giving the freedom to choose the path of self-organization of the system. Further, the self-organization of the system will be understood as the process of growth of its information diversity due to the diversity of the environment and the work of the internal program of information generation. In this sense, self-organization is equivalent to development . But we do not exclude self-organization, directed in the opposite direction, to degradation (“Mass Consciousness” is self-organized by the crowd not for the benefit of crowd development due to the growth of its information diversity, but to the detriment of the surrounding society by reducing this diversity (see topic 4, section 4.5). Self-organization for the sake of destruction, but not creation is not such a rarity.). Next, consider the self-organization-development.

Thus, a program for generating information may arise in parallel with the process of growing diversity. The natural storage place is the system thesaurus. Obviously, before the need arose, there was no need for such a program. But once formed as a storage device (memory), the thesaurus, as well as computer memory, allows you to store and edit programs placed in it (add, modify, erase), along with the data (by analogy with the principle of a stored program in a Neumann computer) . At the same time, the diversity of the elemental composition of the system grows due to the material-energy metabolic processes under the control of the genetic program, and the diversity of relations (relationships) between the elements is due to the information metabolism under the control of the information generation program, which, in turn, arises and improves during this same metabolism. Both programs are interconnected.

We assume that the generation of information is discrete and coincides with the moments of self-knowledge by the system of its increased diversity. The generated information according to the law of the final information is finite. In accordance with the principle of the stored program, the information generation program is stored in thesaurus codes. Thanks to this program, the growth of diversity acquires a more valuable character for the system, since the many states of the system include not only the properties of individual elements, but also the properties of interrelations and group properties of elements formed by the program. And just as the case has a major impact on the early morphological development of the system, so as the thesaurus is filled and improved, the program of information generation and information exchange with the environment has an increasing influence on the self-organization of the system. The system, which firstly saved something for the future, becomes "legible" as it develops, sweeps away random data and fills the memory no longer with them, but with knowledge, where all elements of the accumulated diversity are linked together into a single system that meets development goals. This leveling of developmental randomness, as the thesaurus becomes more complex, leads in biosystems (and in systems of a different nature) to a decrease in their natural mutations. The probability of perceptible genetic mutations in highly developed systems is extremely low. True, this can be dangerous for such systems in the sense that they lose the ability to quickly adapt to rapidly changing habitats. An overdose of information generated by a super-complicated thesaurus, the resistance of systems to mutations, is similar in its consequences to the fate of conservative systems that have lost their ability to develop.

Note that any system with a stable thesaurus in different conditions may have a different degree of organization. Thus, a fairly organized holistic system of everyday knowledge of a certain person may make too small a contribution to the system of his production and, moreover, scientific knowledge. There are two reasons for this: the lack of data at each of the higher levels and the lack of links between the data. As a result, the holistic knowledge system narrows down and (or) crumbles into smaller holistic systems up to the complete disappearance of the systems as such.

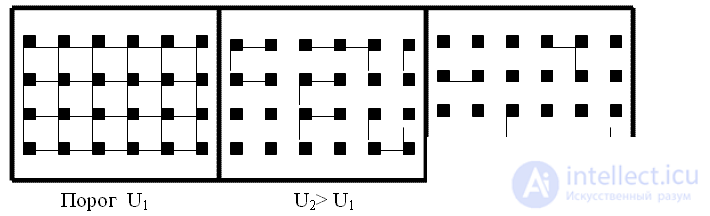

Example 3. In fig. 5.1 shows an example of the destruction of an integral system due to the rupture of bonds between elements with an increase in a certain threshold U (detection threshold, understanding threshold, patience threshold, etc.). Above the threshold, the system maintains strong, stable ties (mutual influences), weak links with the rise of the threshold are destroyed - the elements cease to react to each other's states. The concept of a threshold turns out to be gnoseological and ontologically significant.

On the other hand, a system, once organized in a certain environment, can maintain its organization in other environments without being damaged (the "concrete principle"). The integrity of such a system can be broken only by extreme influences. So, if a person understands something and the knowledge gained is fixed, the integrity of the knowledge system can be broken only with pathological changes in the brain.

In short, the result of self-organization of an open system depends on the program for generating information stored in the thesaurus of the system and managing the process of self-organization in compliance with the law of information preservation. The epistemological version of this law suggests that the full mutual information of the developing system and environment is always limited by the achieved information content of the thesaurus.

The technology of the program for generating information seems to us similar to the evolutionary mechanism of developing systems and the principle of program management of the "Neumann" computer. The essence of the principle is that the program plans the behavior of the system (computer) not for the whole life cycle (session), but for just one next step (one command). For a developing thesaurus, the implementation of this principle is reduced to an insignificant expansion of the knowledge base at the next step of self-organization in relation to the previous step. Everything in the world develops in this way - from simple to complex, step by step, gradually. Nothing happens right away. The presence of a transition (evolutionary) process is a law. It is impossible to accurately predict its outcome, since This is a probabilistic process in which correlations between random events are not rigidly determined. For biosystems and thesaurus, this rule can be formulated as follows: "Each individual system resulting from mutations and selection is unpredictable with regard to its structure, however, the inevitable result is always the process of evolution - this is the law (Eigen M." Self-organization of matter and evolution of biological macromolecules ", 1980)".

A small dosage of complexity, like an unfamiliar medicine, seems to be the only reasonable way to conduct a system under conditions of a priori uncertainty of consequences. An overdose is fraught with death if the “medicine” is harmful to the system, in particular, if it does not optimize its target developmental function. A small dose is not able to kill the system. But, if the system does not recognize the harm in a timely manner, it can continue to increase its complexity in the chosen wrong direction, and in the end, with the next bifurcation, the negative cumulative effect of the harmful complexity will work - the system degrades and dies. Where the development program (information generation) will lead the system - to the correct solution or failure - depends only on the program and the data used by it. To do this, it must be provided with means to protect against runtime errors and poor-quality data, which is known in the “Neumann computer” as “security programming”.

From "Information genesis and self-organization" it follows that information genesis and self-organization are inextricably linked with each other.

Before the emergence of synergetics, self-organization was understood as the spontaneous emergence of an organization in an autonomous closed system. But almost all systems in the universe are open, influenced by the environment and on each other. Therefore, synergy as an interdisciplinary scientific direction deals with the problems of self-organization of open systems (G. Haken, I. Prigogine, M. Eigen (Prigogine I., Stengers I. "Order from chaos", 1986; Chernavsky DS "Synergetics and information (dynamic information theory ", 2004.), etc.). Ideas about self-organization first appeared in the era of antiquity (Democritus, Aristotle). But the linking of self-organization with information (information genesis) took place only in the twentieth century as part of synergy.

Self-organization can occur under the action of a) the genetic program; b) an internal program for generating information; c) the environment; г) программы генерирования информации и среды одновременно. Генетическая программа способна дать первоначальный импульс самоорганизации, придав "новорожденной" системе минимальное информационное разнообразие, необходимое для ее жизнедеятельности и выживания в среде. Программа генерирования информации, возникшая под влиянием среды, взаимодействующая с генетической программой и хранящаяся в тезаурусе системы, управляет самоорганизацией системы на всех этапах ее жизненного цикла посредством алгоритма самоорганизации . Среда способствует совершенствованию информации и наполнению тезауруса системы знаниями.

Информациогенез при самоорганизации становится неустойчивым, как неустойчиво состояние между хаосом дезорганизации (энтропийная тенденция) и порядком организации (информационная тенденция). Состояние флуктуационной неустойчивости, неравновесности свойственно каждому этапу самоорганизации системы, связанному с изменением информационного разнообразия, накопленного системой к текущему этапу. Увеличивается разнообразие→увеличивается знаниевое наполнение тезауруса→растет информативность системы, и наоборот. Борьба энтропийной и информационной тенденций при самоорганизации напоминают гегелевскую диалектическую триаду "тезис – антитезис – синтез". Объем априорной информации для самоорганизации минимален.

Самоорганизация – вероятностный процесс, и наряду с безнадежными системами, пользующимися вредными данными и командами от среды, найдутся и такие, которые благодаря средствам защитного программирования своевременно отказались от вредных данных и выполнения сбойных команд. Приращение сложности таких систем направлено на оптимизацию их целевой функции. При очередной количественно-качественной бифуркации такие системы будут прогрессировать. Но и эти системы наращивают свою сложность осторожно, малыми дозами, пошагово, не "переедая". Достоинство пошагового достижения заданной сложности и эффективности в том, что система может это сделать сама, без внешней помощи. Так, начинающий спортсмен может сразу стать олимпийским чемпионом разве что с помощью Бога. Но упорно тренируясь, он шаг за шагом сам (и никто за него!) придет к олимпийскому пьедесталу. Если же его программа тренировок была тупиковой, что ж, олимпийским чемпионом станет другой.

На вопрос, почему прогрессивное развитие (самоорганизация) идет от простого к сложному, а не наоборот, отвечает закон необходимого разнообразия Эшби, согласно которому чем больше (разнообразнее, сложнее) среда возмущает (стимулирует) развивающуюся систему, тем больше должно быть ответных реакций системы для ее эффективного существования в среде, т.е. система должна усложняться. И наоборот, чем меньше стимулируется система, тем проще она может быть (регрессивное "развитие").

Платой за сложность является время. Самоорганизация систем требует существенно большего времени, чем потребовалось бы их создателю. Есть феноменальные люди, способные практически мгновенно "создать" ответ некоторой вычислительной задачи. Если же для ее решения использовать итерационный алгоритм вычислительной математики, то чем более точный (а следовательно, и более сложный – по числу точных знаков после десятичной запятой) результат мы хотим получить, тем больше итераций должна сделать программа и, соответственно, тем дольше она занимает компьютер.

Концепции самоорганизации и синергетики, упомянутые в "Физика информации" позволили объяснить многие процессы развития (включая эволюцию) без привлечения сверхъестественных сил и мистики чуда, хотя самоорганизация как будто происходит сама собой, без видимых причин и внешнего вмешательства.

Пример 4. Эволюция космических тел согласно научным данным проходит в течение нескольких миллиардов лет, эволюция живой природы на Земле – сотни миллионов лет, эволюция разума – десятки миллионов лет. А Бог на третий день создал "зелень, траву, сеющую семя по роду и по подобию ее, и дерево плодовитое, приносящее по роду своему плод, в котором семя его на земле", на четвертый день – "светила на тверди небесной" (Библия, Бытие, 1). За следующие два дня Господь создал всю фауну и человека. Мы восхищаемся проворностью Творца (если, конечно, понимать "день", "субботу" в человеческом измерении). Эволюция так быстро не творится. Господь Бог или эволюция? – вечная дилемма, неразгаданная загадка бытия, которую, по нашему мнению, надлежит оставить в покое, как и тайну Творения. Последнее вместе с атрибутивной информацией природы следует принять как данность. А тайны, что ж? – без них жизнь скучна и неполноценна.

Многошаговые процессы с оптимизацией некоторой целевой функции, подобные эволюции и самоорганизации тезауруса, часто встречаются в задачах динамического планирования, распределения ресурсов, оптимизации транспортных перевозок и др. Для решения подобных задач возможны два способа решения: 1) искать сразу все элементы решения на всех шагах; 2) строить оптимальное управление шаг за шагом, оптимизируя целевую функцию на каждом шаге. Реализация первого способа возможна в конечных алгоритмах динамического программирования с известной целью и результатом оптимизации (экспертные системы, интеллектуальные игры и др.). Второй способ оптимизации проще, менее рискованный, чем первый, особенно при неопределенно большом числе шагов и неопределенном конечном результате оптимизации. Обратим внимание, что задачи распределения ресурсов и оптимизации транспортных перевозок, по существу, являются основными в биологических и интеллектуальных процессах метаболизма. Соответственно, мало оснований полагать, что природа для решения задач самоорганизации выбирает сложные пути (созидание) вместо простых (эволюция). Наблюдаемая сложность природы изначально проста. Задача рациональной науки – понять эту простоту.

Было бы наивным полагать, что простота механизма самоорганизации в приведенном понимании идентична простоте в "понимании" природы. Поиск простоты в сложности природы как одна из сторон реализации принципа простоты ( "Информация и управление" ), по возможности, не должен быть слишком антропным. Достичь такого понимания природы, не ограниченного человеческим опытом и рациональной наукой, дано немногим. В физике XX века это, пожалуй, Бор, Эйнштейн, Х. Лоренц, де Бройль, Паули, Гейзенберг, Ландау. Известный афоризм Н. Бора о теориях, недостаточно безумных, чтобы быть правильными, образно демонстрирует стиль, исповедуемый неклассической и постнеклассической наукой.

Пример 5. Проанализируем некоторые эмпирические примеры биологической самоорганизации (И. Пригожин). При угрозе голода амебы стягиваются в единую многоклеточную массу, личинки термитов концентрируются в ограниченной области термитника. Подобная кооперативная самоорганизация свойственна любой популяции, т.к. "общий котел" всегда выгодней, экономней, чем раздельное питание. В этих примерах (вне зависимости от конкретного механизма самоорганизации каждой популяции) важно понять, что стимулом самоорганизации было достижение величиной разнообразия популяции некоторого контролируемого порога, вызывающего подпрограмму принятия "организационного решения". Подобный алгоритм самоорганизации характерен для коацерватных капель, развивающихся и конкурирующих фирм, государств, избирательных блоков, студентов перед экзаменом, семей и кланов и т.д. При этом глубинным мотивом кооперативной самоорганизации в общем случае является инстинкт самосохранения (выживания), проявляющийся не только при угрозе голода, но и при любой опасности со стороны враждебной среды. Так, "сытые" поодиночке предприниматели объединяются перед угрозой национализации частной собственности, слабые объединяются в стаи, племена, государства, (кон)федерации, союзы перед угрозой силы. Вполне вероятно, что и многоклеточные организмы появились в результате кооперативной самоорганизации одноклеточных, что облегчило совместное выживание, усвоение пищи, устойчивость к агрессивной среде. В основе (на входе) любого организационного решения лежит информация – пока система не осознала входной (внешней) информации, ни о каком решении речи быть не может. Это, если угодно, аксиома теории управления. Следовательно, к самоорганизации, действительно, можно придти только через познание собственного информационного разнообразия. А самопознание без тезауруса, хранящего информацию и программу ее генерирования, невозможно.

Итак, порождение информации происходит в процессе освоения (самопознания) системой приобретенного ею разнообразия, и этот процесс состоит в опосредованном (через разнообразие) распознавании и перекодировании внешней информации среды во внутренние более ценные информационные коды упорядоченности, смысла, знания (в зависимости от достигнутого системой уровня развития). Такое распознавание и перекодирование эквивалентно генерации все более ценных видов информации и возможно только при наличии программы развития и, в частности, программы генерирования информации (Диссипация информации происходит "внепрограммно".) , по принципам своей работы сходной с принципами "неймановского компьютера". Это не случайное совпадение – фон Нейман тесно сотрудничал с Винером и другими пионерами кибернетики в выработке компьютерных и кибернетических концепций.

Example 6. In inanimate nature, examples of self-organization are the occurrence of laminar turbulence in liquids, the formation of convection Benard cells (hydrodynamics), self-oscillatory reactions (chemistry), the materialization of virtual microparticles of physical vacuum (physics).

Example 7. In society, examples of self-organization are the market with its “invisible guiding hand” (A. Smith), true democracy, the formation of the information society, the Internet, social networks and network communities. Modern social institutions as products of human activity are not, for the most part, the result of conscious, powerful planning, but they self-organize almost spontaneously from the non-linear, non-equilibrium, and sometimes catastrophic social processes of the 20th century

The inextricable link of self-organization with information genesis means that information accompanies any development. And since all known open systems in one way or another change and develop, interacting with each other and with the habitat, there is a deep indissoluble unity between information genesis and system genesis (AN Kochergin), and we can speak about informational expansion into all conceivable systems. In other words, information expansion means that information is the main resource for the development of all systems without exception. And if before the appearance of the concept of information there could be no talk of information expansion, now its existence should not be in doubt.

The entire history of life, moving from the primitive sensory material and energy perceptions of the first single-celled organisms to the ideal apperceptions of the reflecting human consciousness, testifies to informational expansion in nature. In inorganic nature, it is much more difficult to trace the history of information expansion, because the history of nature is much longer than the history of life. But, based on the available ideas about attributive information and information physics (see topics 1, 2), we note that the concept of attributive information as internal information of all objects of nature without exception and the theory of the information field supplying physical fields with information is in harmony with the concept of information expansion . Therefore, we believe that informational expansion is peculiar to all natural systems — inorganic and organic.

Informational expansion is observed in the development of artifacts of material culture, in particular, computing and telecommunication equipment - from the primary "big calculator" (computer) to the modern portable information machine (informachine), from the complex (expensive) telephone and radio equipment of wired and wireless communication XIX- Twentieth centuries. to more simple and affordable modern means, largely using the previously established communication infrastructure.

The formation of the information society is evidence of information expansion in the field of public relations. In the information society, information becomes a national resource and productive force, at least half of labor resources work in the information sphere, the cult of knowledge and universal literacy prevails in the mentality of people, the morality and ethics of relationships are replenished with cyber ethics and infoethics, states have to reckon with the existence of an independent global cyberspace, saturated with information and virtual personalities (by analogy with the physical space, saturated with matter and bodies). Powerful information flows invaded the life of mankind, threatening its psychological and physiological capabilities no less than tsunamis and typhoons. And if we consider the latter as manifestations of ocean expansion, then equally the “information flood” is a sign of information expansion.

The problem of man in the world of information expansion acquires specific features ("Information and man"). After all, there is a lot of information in this world, even too much, but how to isolate vital knowledge in it is a big question: "Everyone wants to be informed honestly, impartially, truthfully - and in full accordance with his views" (G. Chesterton) . But you can only dream of talking about honesty, impartiality and truthfulness of information, and each according to your views. And in the muddy data stream, rushing at a person through the Internet and biased media, along with objective information, is full of misinformation and information noise. Separate the "wheat from the chaff" accounts for each of us.

Recall that data is transformed into knowledge through self-study, when the system, mostly (but not only), learns its own diversity (taking into account acquired information blocks) and the displayed environment, searches, selects and remembers valuable information in the data stream, establishing additional links between them. At the same time, the term “learning” seems to us to be archaism - learning gives (teaches) knowledge, but this is clearly not enough; in the end, you have to get it. The hands of the giver and taker must be joined; the whole learning process should be strategically redirected to self-study, for "a person fully understands only what he himself thinks of, just as a plant absorbs only the moisture that its roots absorb" (A. Renyi).

Strictly speaking, the stages of self-organization and self-learning of a system cannot be separated in time, the nature of the relationship between them is diffuse. Indeed, organization (ordering) as a restriction of diversity is impossible without knowing the latter, without searching and selecting valuable data, without using the primary memory mechanisms. Self-organization does not stop at the stage of self-learning, as during the development of acquired metabolites exchange processes between the system and the environment do not stop. In this regard, when considering the process of self-study there is hardly any sense in separating it from self-organization. The stages of self-learning (memorization, perception, assimilation, etc.) can be interpreted as self-organization of the thesaurus. At the information level, it is only important to take into account that the process of self-learning consists of learning (remembering), storing and reproducing knowledge. If at least one of these stages is violated, self-study may not take place.

The self-learning of a system begins after it acquires from the medium a portion of information diversity in the form of some data whose value is unknown. The self-learning algorithm consists in selecting data according to some criteria of value and in linking the selected data valuable for the system into information — the primary knowledge of the acquired diversity. This knowledge is placed for storage in the thesaurus (knowledge base), which was created by the system in its memory at the very early stage of self-organization. However, self-study at the stage of binding valuable data does not end. The selection subroutine according to the criteria of value continues to work, “comprehending” primary knowledge, because the meaning of knowledge is the ultimate goal of self-learning. As a result, the system “learns” the acquired informational diversity and due to this increases its internal information (self-knowledge).

Informational genesis does not stop at all stages of self-study. After all, any act of selection (choice) or comprehension leads to disequilibrium of the system, non-stationarity of latent processes of information metabolism, and therefore, to the generation of information. The environment participates in self-study only indirectly - through its coded mapping in the receptor subsystem (Druzhinin VV, Kontorov DS "Problems of systemology", 1976.).

Quantitative and qualitative transformations of the thesaurus occur constantly (the Constancy of changes does not mean their continuity. The nature of the changes to be clarified.) And in parallel. At the same time, simply increasing the number of “knowledge elements” (information blocks) in the thesaurus still does not give the right to consider it a “treasure” of the system, as it is impossible to consider separate, random, unrelated data (files) in the long-term human memory (computer) knowledge base . Now, if these elements are jointly understood in the form of ordered, meaningfully complete clusters, sets, lists, arrays, semantic and / or neural networks filled with structural elements such as terms, frames, records, objects, variables, graphs, formal neurons, then we can say about the system (base) of knowledge, thesaurus of the organism or machine. In other words, informational links between the elements of knowledge included in the thesaurus should be more reliable than their connections to data outside the thesaurus. By the way, any system is different from a “non-system”: the internal connections of the system are necessarily stronger than external connections.

The organization of intrasystem relations is provided by the address under the control of the program of information generation and the system axiological installation of knowledge ("Knowledge as the highest form of information"). In the “Information and Consciousness” the principal importance of addressing the elements of knowledge in the thesaurus (access speed, ease of editing) was noted. It is not for nothing that the well-known RAM principle (From random access memory (eng. - random access memory).) Implements address binding of memory cells in a "Neumann" computer.

Imagine the evolution of a thesaurus as an increase in the complexity of an open information system (see examples 1, 2 in section 5.1.), Fed from its habitat and having a limit of cognitive efficiency, which depends on the information resource of the environment, useful learned by the system (Recall that the information limit of self-organizing thesaurus does not exist.).

Evolving from entropic chaos to the informational order, such an open system simultaneously exhibits signs of movement from order to chaos, the larger, the smaller the share of valuable (for the system) information in the volume of data received in the thesaurus. The second effect progresses as it approaches the end of the life cycle of the system. As a result, “old” systems often come to the agnostic syndrome of ignorance: “I know that I do not know anything.”

But one should not consider the entropic tendency of development only as a “senile” negative. “Young” systems also do not avoid chaos, because it is informationally creative not less (and more often more) than order — this or that tendency is determined by the goal of development. The order is more to stability (preservation, continuity, restriction of diversity), chaos - to instability (novelty, variability, the creation of diversity). The order, adhering to the healthy conservatism of homeostatic systems, is aimed at "preserving what has been achieved" instead of searching for something "better - the enemy of the good." The “freedom-loving” chaos, on the contrary, craves creativity. Both trends are complementary and interpenetrating in their coexistence, be it an organism or a machine, a population or a state, an education system or sport, art or linguistics.

System studies have shown that the development of systems is (quasi) step emergent (From the Latin. Emergere - to appear.) Character, when the transition to each next step corresponds to a leap in the quality of the system - it acquires new, previously absent properties. We believe that the stepped nature of development is explained by the discreteness of the process of generating information by the thesaurus of the system storing the corresponding program.

We first clarify the concept of a qualitative leap. Nature does not provide for material-energy processes with infinite gradients, described by discontinuous functions with infinite derivatives in time. Consequently, it is generally incorrect to speak of ideal leaps in relation to real processes, even to those that are the subject of the study of the theory of catastrophes. When viewed at a sufficiently fast temporal sweep in time, any of the “coolest” jumps looks like a transition process, like a fading or increasing oscillation, but not like a perfectly vertical step.

Example 8. The Big Bang, if it was, developed in time: "The first hundredth of a second", "The first three minutes" - these titles of the chapters of the book by Nobel laureate S. Weinberg about the Big Bang theory speak for themselves. Any most powerful signal has a finite front and fall due to real time constants (temporal inertia) of receiving-transmitting devices or organs. In a word, “no change happens in a jump” (GV Leibniz). This maxim is valid for the laws of self-learning of the thesaurus and social development, acting within the framework (not outside) of nature and not subject to the will and logos of people. Perhaps the rejection by nature of ideal jumps is one of the most vivid indirect evidence of its evolutionary nature.

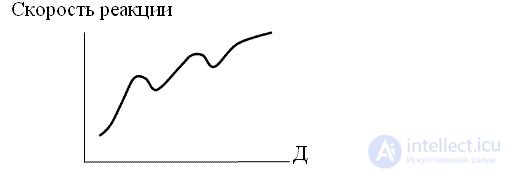

Example 9. The experimental dependence of the trainability of operators (reaction speed) on the duration of training is given in fig. 5.2 (according to VF Venda). As you can see, the relationship is essentially discrete.

In principle, each of us came across such a discrete (threshold, relay) self-learning effect, when insight or skill (as self-learning performance indicators) came to us not immediately after the first training impulse. Not one such impulse was needed, beating at one point from different sides, before the heuristics of understanding or skill worked. So people learn to walk, dance, swim, ride a bike, learn turning, programming, music, surgery, tensor analysis, enter the complex worlds of Blavatsky, Husserl, Heidegger, Bergson, etc. In a word, each self-learning act requires accumulation at the subthreshold level of a certain minimum of thesaurus states diversity before it (diversity) reaches the detection and recognition thresholds of thesaurus information generator, which will trigger the generator and increase system efficiency.

In connection with the above, if we still used the concept of a jump and continue to do it, then only in a philosophical sense . In the physical sense, a leap is a useful idealization that helps in the knowledge of nature, nothing more.

In order for a developing system to generate information, it must constantly learn itself, knowing the diversity of its states that grows as a result of self-organization. Otherwise, the new information will not occur. But any knowledge, as we have repeatedly seen, is impossible without errors, including errors, due to the finite sensitivity and finite selectivity of the algorithms for recognition and classification of diversity.

Example 10. At the input of any recognition and classification algorithm (artificial and natural) there is a communication channel supplying the raw (unprocessed) data. The receiver can be, for example, a sensory (receptor) device of some device, a sense organ, a neural network. The limited sensitivity of the receiver is due to objective factors of a dual nature: internal noise of the receiver and external interference (noise) of the medium. Sensitivity usually fluctuates with respect to a certain average value, which, in turn, drifts, as a rule, in the direction of deterioration, i.e. raising the threshold for detecting diversity. If this were not the case, i.e. the sensitivity would improve as the receiver ages, it would not have to periodically adjust, repair and, ultimately, “write off” for the uselessness. The increase in the detection threshold leads to a decrease in the frequency of detection of changes in diversity and the frequency of qualitative changes in the thesaurus. If a change in diversity is detected, the system must adapt to it (reconfigure the system matrix of reactions, control the diversity, change the detection threshold for diversity). Adaptation has a cyclical spiral character; on each turn of the helix, a renewed return to the increased diversity is made.

Of course, detecting diversity gain and detecting a communication signal is not the same thing. In particular, to unequivocally say that the threshold for detecting the increase in diversity in developing systems only changes upwards, it would be at least careless. If the system is capable of developing bifurcation, then bifurcations that drastically change the regular development or stagnation of the system can happen at any time and give rise to both an increase and a decrease in the sensitivity of the system to changes in diversity. Identifying the mechanisms for threshold detection of recognition changes is not so simple. But they exist, according to the results of thin thermochemical studies of living cells by biologists. These mechanisms combine with detection of signals common objective limitations - the presence of interference and the final detection threshold. Internal and external noise, along with the deterioration in the quality of detection of the increase in diversity, worsen the selectivity of the recognition and classification algorithms for new elements of the system, since the noises "blur" the boundaries between the characteristic features of these elements, which is true for any algorithms and their circuit solutions, whether they are algorithms of observation, thinking, cognition or games. Конечный порог обнаружения не позволяет распознать слишком малый (субпороговый) прирост разнообразия, меньший уровня порога; система способна распознать новое разнообразие и адекватно отреагировать на него порцией информации, если оно отличается от прежнего разнообразия не менее чем на конечную величину порога обнаружения.

Два рассмотренных фактора подтверждают фундаментальную значимость дискретности и вызываемых ею ошибок для процессов самообучения тезауруса, объективный характер дискретности процессов познания и развития. Таким образом, генерирование информации – объективно дискретный процесс, природа которого – в квантовании разнообразия. Дискретное генерирование информации является причиной ступенчатого изменения (скачков) эффективности развивающейся системы, информативности тезауруса.

Comments

To leave a comment

philosophiya

Terms: philosophiya