As in any historical essay, one is forced to limit oneself to describing a small circle of people, events and discoveries, ignoring all other facts that were no less important. This historical excursion is around a limited circle of questions.

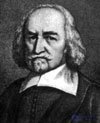

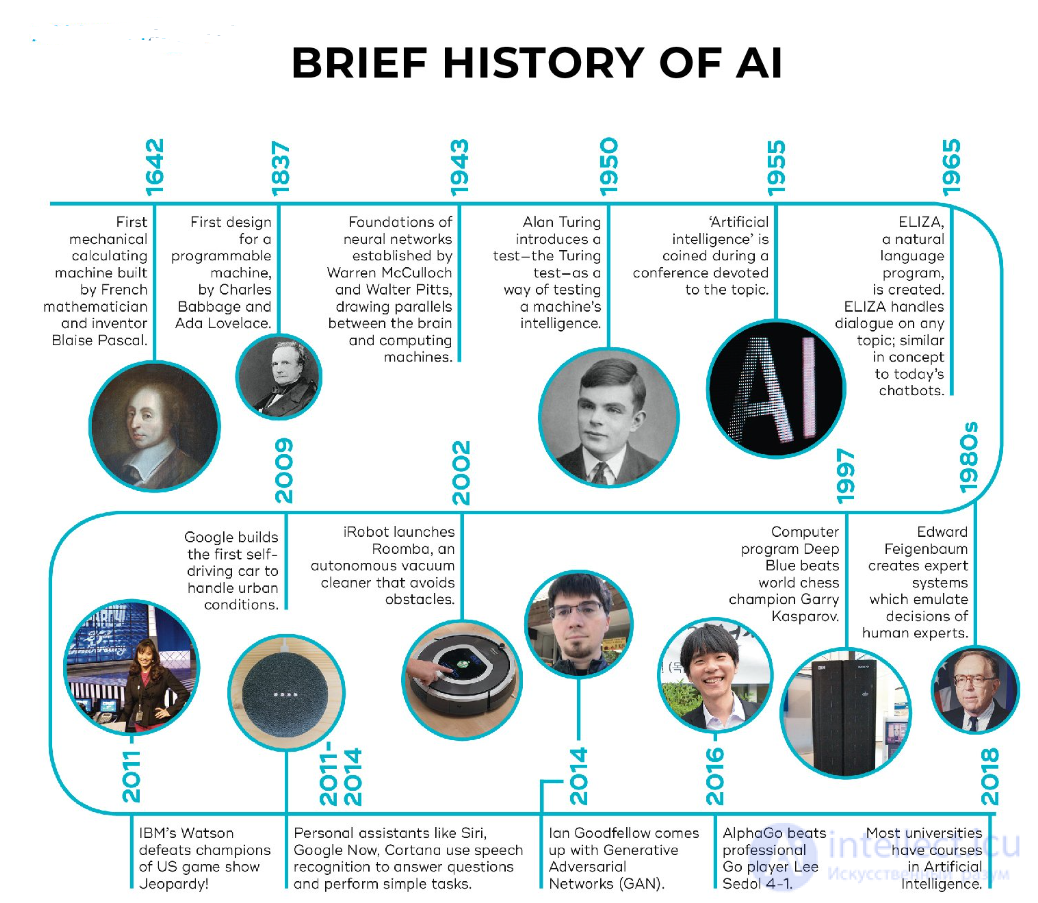

The history of artificial intelligence (AI) spans more than half a century, from early concepts and theoretical developments to modern machine learning and neural network technologies.

1. Early ideas and theoretical foundations (period from 428 BC to the 1950s)

- Can formal rules be used to draw correct conclusions?

- How does such an ideal object as thought come into being in such a physical object as the brain?

- What is the origin of knowledge?

- How does knowledge lead to action?

- Ancient Ideas of Intelligent Machines: The idea of creating an artificial intelligence or mechanical being has deep roots in myths and legends, such as Goliath in the Bible and Heracles in Greek mythology.

- Mechanical Automata: The 17th and 18th centuries saw the creation of mechanical devices such as Jacques de Vaucanson's automata and Wolfgang von Kempelen's "Turk" which, although not truly intelligent, demonstrated an interest in artificial intelligence and automation.

- The Beginnings of Computer Science: The work of Alan Turing and John von Neumann in the mid-20th century laid the foundations for computing. In 1936, Turing developed a theoretical model of a computing machine (the Turing machine), which became the basis for the creation of computers.

Aristotle

A precise set of laws governing the rational part of thinking was first formulated by Aristotle (384–322 BCE). He developed an informal system of syllogisms designed to conduct correct reasoning, which allowed anyone to work out logical conclusions mechanically, given initial premises.

Much later, Raymond Lull (d. 1315) put forward the idea that useful reasoning could actually be carried out using a mechanical artifact.

Raymond Lully

Thomas Hobbes (1588–1679) suggested that reasoning is analogous to numerical calculation, and that “in our silent thoughts we are forced to add and subtract.” At the time, the automation of calculation itself was already well underway; around 1500, Leonardo da Vinci (1452–1519) designed, but did not build, a mechanical calculator; a recent reconstruction has shown that his design works.

Thomas Hobbes

The first known calculating machine was built around 1623 by the German scientist Wilhelm Schickard (1592-1635), although the Pascaline machine, built in 1642 by Blaise Pascal (1623-1662), is better known. Pascal wrote that "the arithmetical machine produces an effect which seems closer to thought than any action of animals."

Gottfried Wilhelm Leibniz (1646-1716) created a mechanical device designed to perform operations on concepts rather than numbers, but its scope was rather limited.

Once humanity realized what a set of rules there must be to describe the formal, rational part of thinking, the next step was to view the mind as a physical system.

Leonardo da Vinci

René Descartes (1596-1650) was the first to publish a discussion of the differences between mind and matter and the problems that this raised. One of the problems with purely physical conceptions of the mind is that they seem to leave little room for free will: if the mind is guided solely by physical laws, then a person has no more free will than a boulder that “decides” to fall toward the center of the earth.

Although Descartes was a strong supporter of the mind-only view, he was also a dualist. Descartes believed that there was a part of the human mind (the soul or spirit) that was outside of nature and not subject to physical laws.

Wilhelm Schickard

On the other hand, animals do not have such a dualistic property, so they can be considered as a kind of machines. This is stated on the website https://intellect.icu . An alternative to dualism is materialism, according to which rational behavior consists of operations performed by the brain in accordance with the laws of physics. Free will is simply a form into which the perception of available options is transformed in the process of choice.

If we assume that knowledge is manipulated by the physical mind, then the next problem arises - to establish the source of knowledge. Such a scientific direction as empiricism, the founder of which was Francis Bacon (1561-1626), the author of the New Organon, can be characterized by the statement of John Locke (1632-1704): "There is nothing in human understanding that does not first appear in sensations."

Rene Descartes

David Hume (1711–76) in his book A Treatise of Human Nature proposed what is now known as the principle of induction, which holds that general rules are developed by examining recurring associations between the elements considered in the rules. Building on the work of Ludwig Wittgenstein (1889–1951) and Bertrand Russell (1872–1970), the famous Vienna Circle, led by Rudolf Carnap (1891–1970), developed the doctrine of logical positivism. According to this doctrine, all knowledge can be characterized by logical theories ultimately related to factual propositions that correspond to sensory input.

The confirmation theory of Rudolf Carnap and Carl Hempel (1905–97) attempted to understand how knowledge can be acquired from experience. Carnap's The Logical Structure of the World defines an explicit computational procedure for extracting knowledge from the results of elementary experiments. This is probably the first theory of thinking as a computational process.

The final element in this picture of philosophical research into the problem of mind is the connection between knowledge and action. This question is vital for artificial intelligence, since intelligence requires not only thinking but also action. Moreover, only by understanding the ways in which actions are justified can we understand how to create an agent whose actions are justified (or rational).

Aristotle argued that actions are justified by the logical connection between goals and knowledge about the results of a given action. Aristotle's algorithm was implemented 2,300 years later by Newell and Simon in the GPS program. What is now called a regressive planning system is now commonly called a goal-based analysis.

Goal-based analysis is useful, but it does not provide an answer to what to do when several courses of action lead to a goal, or when no course of action allows one to achieve it completely. Antoine Arnauld (1612–1694) correctly described a quantitative formula for deciding what action to take in such cases.

Utilitarianism by John Stuart Mill (1806–1873) advocated that rational decision-making criteria should be applied to all areas of human activity.

2. The birth of AI as a science (1950s)

- Turing Test (1950): In his paper "Computing Machinery and Intelligence", Alan Turing proposed a test to determine whether a machine possesses intelligence. The Turing Test became one of the first criteria for assessing artificial intelligence.

- Dartmouth Conference (1956): This is where the term "artificial intelligence" was born. American researchers including John McCarthy, Marvin Minsky, Nathan Rochester and Claude Shannon gathered at the Dartmouth Conference to discuss the prospects of creating machines that could think. This meeting is considered the beginning of the science of AI.

- First AI programming: In 1951, Christopher Strachey developed one of the first programs that could play checkers, and Alan Newell and Herbert Simon created the "Logic Theorist" - a program that could prove mathematical theorems.

3. Optimism and early successes (1950s–1960s)

- Early Programs and Models: This period saw the creation of programs for solving problems and proving theorems. The first programming languages for AI emerged, such as LISP, developed by John McCarthy.

- ELIZA System: Joseph Weizenbaum created the ELIZA system in 1966, which simulated human dialogue. Although it had limited capabilities, ELIZA showed the potential for human-machine interaction.

- Expert Systems: In the 1960s, expert systems began to develop that simulated the decision making of professionals in narrow fields such as medicine and law.

4. The "AI winter" period (1970s–1980s)

- Disappointment and Lack of Funding: The 1970s saw a decline in interest in AI. It turned out that many tasks required far more computing and data than the technology of the time could provide. Progress was slower than expected, and investors lost interest.

- Criticism and skepticism: The many limitations of AI led to less funding and less research. The period of the late 1970s and early 1980s is called the first "AI winter."

- New approaches to learning: Despite the difficulties, some areas of research continued, such as work on machine learning and neural networks, although practical applications of these ideas were limited.

5. The Renaissance of AI and Expert Systems (1980s)

- Expert Systems: The early 1980s saw a new wave of interest in AI thanks to the development of expert systems that could assist with complex tasks such as disease diagnosis and prognosis.

- Commercial Applications: Expert systems found application in business and industry, attracting investment. However, they were highly specialized and lacked the ability to learn.

- Renewed Interest in Neural Networks: The advent of new learning algorithms such as backpropagation has breathed new life into neural network research.

6. The New "AI Winter" and Revival (1990s)

- Reassessment of possibilities: After the failures in commercialization of expert systems, a new "AI winter" began. However, research in the field of machine learning and data analysis continued.

- Breakthroughs in gaming and new fields: In 1997, IBM's AI computer Deep Blue beat world chess champion Garry Kasparov. This success showed that AI can solve problems that require complex analysis.

Electron in the transistor-resistor kingdom

7. The era of big data and neural networks (2000s - present)

- Machine learning and big data: With the spread of the internet and the advancement of computing power, a new era of AI has begun. Major advances have been made possible by big data and advances in machine learning algorithms.

- Deep Learning: In the early 2010s, deep learning made a breakthrough, allowing for more powerful and efficient neural networks. Modern deep learning-based AI systems have made significant advances in image recognition, speech recognition, and text analysis.

- Successes in various applications: Examples of successful AI applications include voice assistants (e.g. Siri, Alexa), self-driving cars, recommendation systems (e.g. Netflix, YouTube), medical diagnostics, and robotics.

- AlphaGo and Complex Problems: In 2016, DeepMind's AlphaGo program defeated the world champion in Go, considered one of the toughest challenges for AI due to the huge number of possible moves. The success became a symbol of what's possible in AI.

8. Current issues and prospects (since 2020s)

- Ethics and Security: As AI advances, new questions of ethics, transparency, and security arise. AI systems can influence personal data, make decisions in difficult moral situations, and even be used for military purposes.

- General AI and superintelligence: Developers are aiming to create strong AI, a system that can solve problems at the level of human intelligence. This type of AI does not yet exist, and creating such systems raises questions about control and security.

- Autonomous systems and regulation: Modern AI systems are used in self-driving cars, medicine and other important areas, which require regulation and a careful approach to safety.

- The emergence of generative language models (GPT): In recent years, generative AI technologies such as OpenAI’s GPT (Generative Pre-trained Transformer) have revolutionized natural language processing. Trained on massive amounts of text data, these models can understand and generate texts, engage in conversations with users, translate languages, create creative texts, and even code. They are used in a variety of fields, such as education, customer service, creative writing, and information retrieval and analysis tasks.

- Ethical challenges and liability: Models like GPT have broad capabilities, which raises questions about liability, especially if they are used to create disinformation or other malicious activity. Because of their ability to generate text based on queries, they can, for example, unintentionally spread bias or support undesirable narratives. This calls for regulation, increased attention to transparency, and efforts to improve AI safety.

- Control and interaction issues: One important challenge is maintaining control over how language models are used and enabling more effective interactions between humans and AI. Such models are already capable of performing many intelligent tasks, making them potential candidates for partial transitions to strong AI.

- Opportunities for personalization and automation: GPT models and similar technologies find applications in personalized recommendation systems, creating conversational AIs that support various types of services, and automating creative processes such as writing articles, scripts, poetry, and other texts.

-

Thus, the emergence of language models like GPT has been one of the most important advances in AI in the last decade. These models, along with the development of other AI technologies, continue to change the understanding of what is possible in artificial intelligence and create new opportunities and challenges.

The history of AI is a journey from theoretical ideas to real achievements. Modern AI systems are capable of much, but there are still many stages to go before creating a full-fledged artificial intelligence.

Comments

To leave a comment

Artificial Intelligence. Basics and history. Goals.

Terms: Artificial Intelligence. Basics and history. Goals.