Lecture

The “Corpus of Texts” request is redirected here. This topic needs a separate article.

Corpus linguistics - a section of linguistics, engaged in the development, creation and use of text corpuses (Text corpus). The term was introduced in the 1960s in connection with the development of the practice of building casings, which since the 1980s has been promoted by the development of computer technology.

A linguistic corpus is a collection of texts collected in accordance with certain principles, marked up according to a certain standard and provided with a specialized search engine [ source not specified 239 days ] . Sometimes a corpus (“corpus of the first order”) is simply called any collection of texts united by some common feature (language, genre, author, period of creation of texts).

The expediency of creating text boxes is explained by:

The first large computer case is considered the Brown Corpus (BC, English Brown Corpus , BC), which was created in the 1960s at Brown University and contained 500 fragments of texts with 2 thousand words each, which were published in English in the USA in 1961 As a result, he set the standard of 1 million word usage to create representative buildings in other languages. In the 1970s, according to a model close to the BC, the frequency dictionary of the Russian language by Zasorina was created, based on a corpus of texts of 1 million words and including socio-political texts, fiction, scientific and popular texts from different areas and drama. A similar model was built and the Russian case, created in the 1980s at the University of Uppsala, Sweden.

The size of one million words is sufficient for a lexicographic description of only the most frequent words, since the words and grammatical structures of medium frequency occur several times per million words (from a statistical point of view, language is a large set of rare events). So, each of such mundane words as eng. polite (polite) or eng. sunshine (sunlight) is found in BC only 7 times, the expression English. polite letter only once, and such stable expressions as English. polite conversation, smile, request never.

For these reasons, as well as in connection with the growth of computer capacity capable of working with large volumes of texts, in the 1980s, several attempts were made in the world to create larger corpuses. In the UK, such projects were the Bank of English (Bank of English) at the University of Birmingham and the British National Corpus (BNC). In the USSR, such a project was the Machine Fund of the Russian language, created on the initiative of A. P. Ershov.

The presence of a large number of texts in electronic form significantly eased the task of creating large representative corpuses of tens and hundreds of millions of words, but did not eliminate the problems: collecting thousands of texts, removing copyright problems, bringing all texts into a single form, balancing the corpus by themes and genres take a lot of time. Representative corps exist (or are developed) for German, Polish, Czech, Slovene, Finnish, Modern Greek, Armenian, Chinese, Japanese, Bulgarian, and other languages.

The National Corpus of the Russian language, created at the Russian Academy of Sciences, today contains more than 300 million word usage [2] .

Along with representative bodies that cover a wide range of genres and functional styles, opportunistic collections of texts are often used in linguistic studies, for example, newspapers (often the Wall Street Journal and New York Times), news feeds (Reuters), collections of fiction (Moshkova Library or Project Gutenberg).

The corpus consists of a finite number of texts, but it is intended to adequately reflect the lexicogram phenomena typical of the entire volume of texts in the corresponding language (or sublanguage). For representativeness is important as the size and structure of the body. The representative size depends on the problem, since it is determined by how many examples can be found for the studied phenomena. Due to the fact that, from a statistical point of view, a language contains a large number of relatively rare words (Zipf's Law), for the study of the first five thousand most frequent words (for example, loss, to apologize ), a corpus size of about 10-20 million word usage is required, while as for the description of the first twenty thousand words ( simple, heartbeat, swarm ), a corpus of over one hundred million word usage is already required.

The primary markup of texts includes the steps required for each body:

In large buildings, a problem arises that was previously irrelevant: a search on demand can produce hundreds or even thousands of results (usage contexts) that are simply physically impossible to see in a limited time. To solve this problem, systems are developed that allow grouping search results and automatically splitting them into subsets (clustering search results), or issuing the most stable combinations (collocations) with a statistical evaluation of their significance.

As a corpus, many texts can be used that are available on the Internet (i.e., billions of words for the main world languages). For linguists, the most common way to work with the Internet is to write queries to the search engine and interpret the results either by the number of pages found, or by the first returned links. In English, this methodology is called English. Googleology [3] , Yandexology can be a more appropriate name for Russian. It should be noted that such an approach is suitable for solving a limited class of problems, since the text markup tools used in the web do not describe a number of linguistic features of the text (specifying accents, grammatical classes, boundaries of word combinations, etc.). In addition, the case is complicated by the low prevalence of semantic layout.

In practice, the limitations of this approach leads to the fact that, for example, it is easiest to check the compatibility of two words through a query like "word1 word2". According to the results obtained, it is possible to judge how widespread such a combination is and in which texts it is more common. See also query statistics.

The second method consists in automatic extraction of a large number of pages from the Internet and their further use as a regular corpus, which makes it possible to mark it up and use linguistic parameters in queries. This method allows you to quickly create a representative corpus for any language sufficiently represented on the Internet, but its genre and thematic diversity will reflect the interests of Internet users [4] .

The use of Wikipedia as a corpus of texts [5] is becoming increasingly popular in the scientific community.

In 2006, the Tatoeba website appeared, allowing to freely add new and modify existing sentences in different languages, linked by meaning. It was based only on the Anglo-Japanese Corps, and now the number of languages exceeds 80, and the number of sentences is 600,000 [6] . Anyone can add new sentences and their translations, and if necessary, download all or part of the language corpus for free or in part.

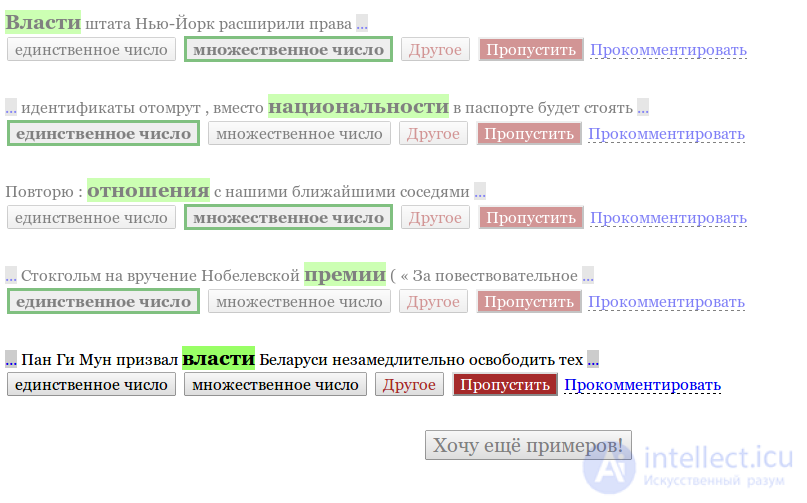

Interface markup system of the Russian Open Corps

Of interest is the draft of the open corpus of the Russian language, which not only uses the texts published under free licenses, but also allows anyone who wishes to take part in the linguistic markings of the corpus. This form of crowdsourcing was made possible by splitting the task of marking into small tasks, most of whom can be managed by a person without special linguistic training [7] . The corpus is constantly updated, all texts and software related to it are available under the licenses of GNU GPL v2 and CC-BY-SA.

Comments

To leave a comment

Natural language processing

Terms: Natural language processing