Lecture

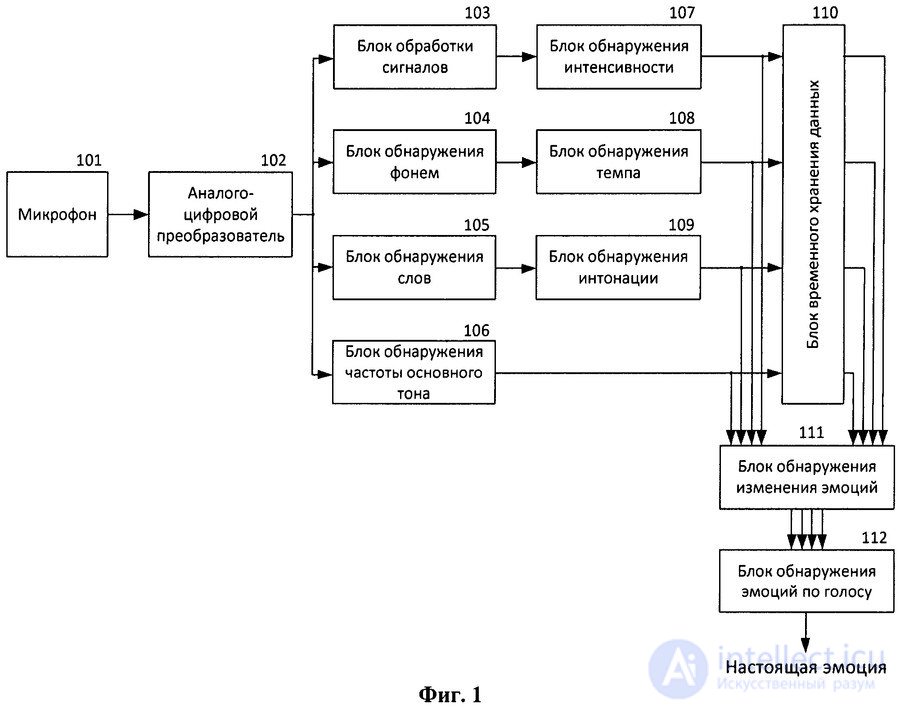

The invention relates to the recognition of human emotions by voice. The technical result is to improve the accuracy of determining the emotional state of the Russian-speaking subscriber. Voice intensities and tempo, determined by the speed with which a voice appears, respectively, are detected and, as a measure of time, intonation is detected, which reflects the pattern of intensity change in each word performed by the voice, based on the voice signal input. A first change amount is obtained, indicating a change in the intensity of the detected voice in the direction of the time axis, and a second change amount indicating the change in the voice tempo in the direction of the time axis, and a third change amount indicating the change in the voice intonation in the direction of the time axis. Enter the voice signal of the Russian-speaking subscriber, and then detect the intensity of voice and pace. After a third measurement value is obtained, the pitch frequency of the voice signal is detected and a fourth change value is obtained indicating the pitch frequency change in the direction of the time axis, signals are generated expressing the emotional state of anger, fear, sadness and pleasure, respectively, based on the first one, second, third and fourth magnitude changes. 3 il.

Comments

To leave a comment

Auto Speech Recognition

Terms: Auto Speech Recognition